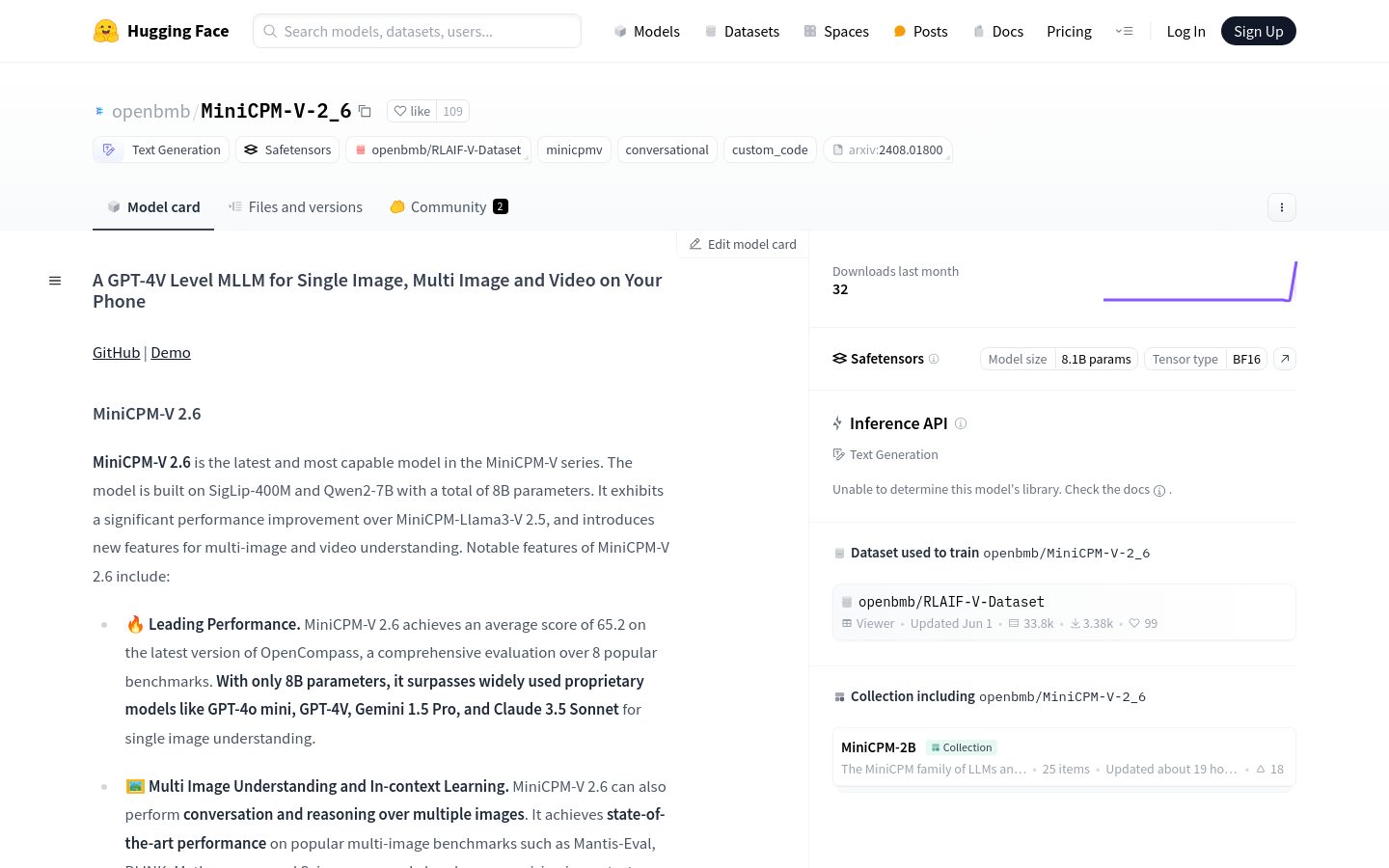

Minicpm V 2.6

Overview :

MiniCPM-V 2.6 is a multimodal large language model based on 800 million parameters, demonstrating leading performance in single image understanding, multiple image understanding, and video comprehension across various domains. The model achieved an average score of 65.2 on multiple popular benchmarks such as OpenCompass, surpassing widely used proprietary models. It possesses robust OCR capabilities, supports multiple languages, and performs efficiently, enabling real-time video understanding on devices like the iPad.

Target Users :

Target audience includes researchers and developers seeking high-performance solutions in areas such as image and video understanding, multilingual processing, and OCR.

Use Cases

Researchers use MiniCPM-V 2.6 for image recognition and classification tasks.

Developers leverage the model for real-time video subtitle generation and content analysis.

Businesses adopt the model to optimize image and video processing capabilities in their products.

Features

Achieved top performance across eight popular benchmarks, including OpenCompass.

Supports multiple image understanding and contextual learning, showcasing advanced performance.

Handles video input, enabling dialogue and providing detailed subtitles.

Strong OCR capabilities, handling images up to 1.8 million pixels.

Based on the latest RLAIF-V and VisCPM technologies, demonstrating reliable behavior and low hallucination rates.

Efficient performance with significantly fewer generated tokens than most models, enhancing inference speed and reducing power consumption.

How to Use

Load the MiniCPM-V 2.6 model using the Hugging Face Transformers library.

Prepare the input data, which can be a single image, multiple images, or a video file.

Input questions or commands via the model's chat function and receive responses.

If processing a video, use the provided encode_video function for video encoding.

Utilize the model's multilingual capabilities for analyzing image or video content in different languages.

Fine-tune the model as needed to adapt it for specific application scenarios or tasks.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M