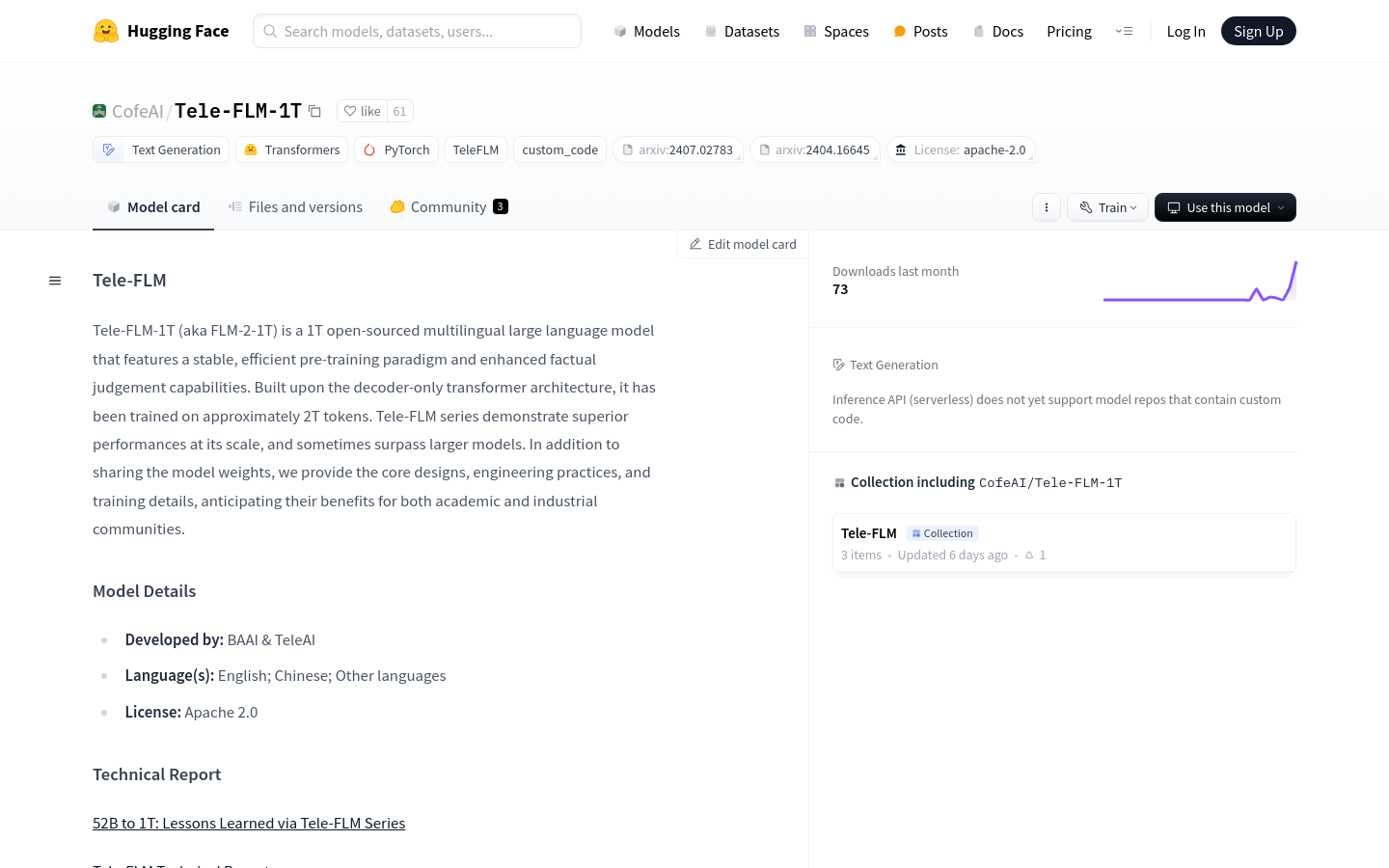

Tele FLM 1T

Overview :

Tele-FLM-1T is an open-source 1T multilingual large language model, based on a decoder-only Transformer architecture, trained on approximately 2 trillion tokens. The model demonstrates outstanding performance at scale, sometimes even surpassing larger models. In addition to sharing the model weights, it also provides core design, engineering practices, and training details, with the expectation of benefiting both the academic and industrial communities.

Target Users :

The target audience includes researchers and developers who need to use large language models for tasks such as text generation, machine translation, and question-answering systems.

Use Cases

For generating high-quality multilingual text content.

As the core model for multilingual machine translation systems.

To provide accurate information retrieval and answers in question-answering systems.

Features

Divided into three training stages of 52B, 102B, and 1TB based on growth techniques.

Utilizes a standard GPT-style decoder-only Transformer architecture with several enhancements.

Features Rotary Positional Embedding (RoPE), RMSNorm, and SwiGLU activation functions.

Compatible with the Llama architecture, with minimal code adjustments required.

Trained on a cluster of 112 A800 SXM4 GPU servers, each with 8 NVLink A800 GPUs and 2TB RAM.

Employs 3D parallel training, combining data parallelism, tensor parallelism, and pipeline parallelism.

Provides model weights and training details to facilitate community usage and research.

How to Use

1. Visit the Hugging Face model hub and locate the Tele-FLM-1T model.

2. Read the model card to understand the detailed information and usage limitations.

3. Download the model weights and related code.

4. Adjust the model according to the provided engineering practices and training details to suit specific tasks.

5. Deploy the model locally or in a cloud environment for training or inference.

6. Use the model for text generation or other NLP tasks.

7. Share your experiences and feedback to promote community development.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M