Holodreamer

Overview :

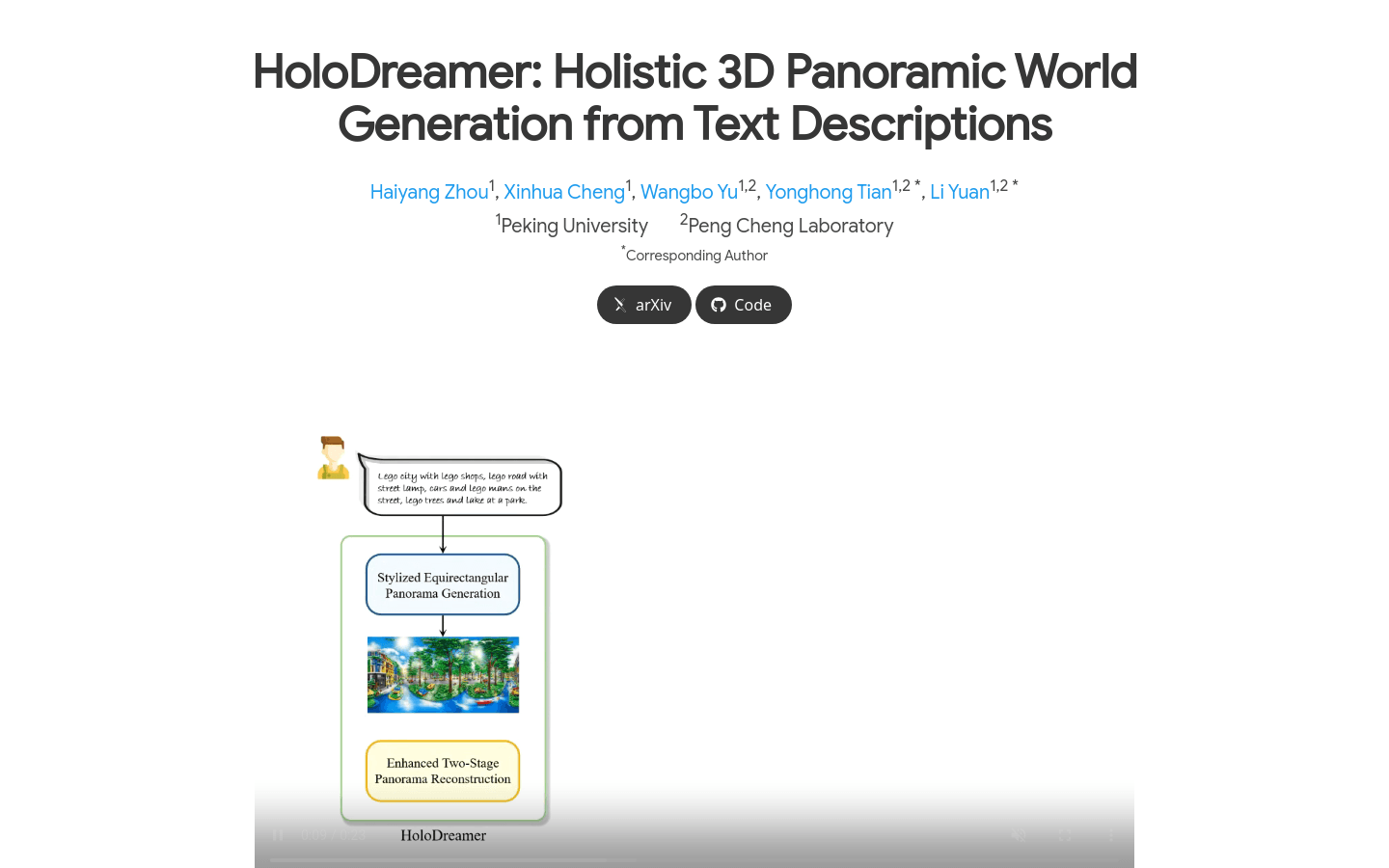

HoloDreamer is a text-driven 3D scene generation framework capable of producing immersive and view-consistent fully enclosed 3D scenes. It consists of two fundamental modules: stylized rectangular panoramic generation and enhanced two-phase panoramic reconstruction. This framework first generates high-definition panoramic images as a complete initialization for the 3D scene, then quickly reconstructs the 3D scene using 3D Gaussian scattering (3D-GS) technology, resulting in view-consistent and fully enclosed 3D scene generation. HoloDreamer's main advantages include high visual consistency, harmony, and robust reconstruction quality and rendering.

Target Users :

The target audience for HoloDreamer includes professionals in the virtual reality, gaming, and film industries. These fields require high-quality 3D scene generation technologies to enhance user experience. HoloDreamer swiftly generates 3D scenes from text prompts, offering a fast, flexible, and cost-effective solution suitable for professionals needing rapid prototyping and scene previews.

Use Cases

In virtual reality, designers can rapidly generate a virtual city using HoloDreamer for preliminary design and testing.

Game developers can utilize HoloDreamer to create unique gaming environments that enhance immersion and visual appeal.

Filmmakers can leverage HoloDreamer to generate intricate film scenes for previews and modifications, optimizing the final visual effects.

Features

Stylized Rectangular Panoramic Generation: Combines multiple diffusion models to generate stylized and detailed rectangular panoramic images from complex text prompts.

Enhanced Two-Phase Panoramic Reconstruction: Conducts depth estimation and projects RGBD data to obtain point clouds, utilizing both primary and auxiliary cameras for projection and rendering in various scenes.

3D Gaussian Scattering (3D-GS): Rapidly reconstructs 3D scenes, enhancing the scene's integrity.

Multi-View Supervision: Utilizes 2D diffusion models to generate initial local images, progressively constructing the scene to improve global consistency.

Seamless Panoramic Rotation: Applies circular blending techniques to avoid seams when rotating panoramic images.

Two-Phase Optimization: Refines the rendered images of the reconstructed scene in the transmission optimization phase, optimizing 3D-GS to generate the final scene.

High-Definition Panorama Initialization: Generates high-definition panoramic images as the complete initialization for 3D scenes, enhancing reconstruction quality and consistency.

How to Use

1. Input Text Description: Users provide textual descriptions to define the 3D scene.

2. Generate Panoramic Images: HoloDreamer utilizes multiple diffusion models to create stylized and detailed rectangular panoramic images.

3. Depth Estimation: Perform depth estimation on the generated panoramic images to produce RGBD data.

4. Point Cloud Generation: Project the RGBD data into 3D space to create a point cloud.

5. 3D Scene Reconstruction: Quickly reconstruct the 3D scene using 3D Gaussian scattering techniques.

6. Two-Phase Optimization: In the transmission optimization phase, refine the rendered images of the reconstructed scene and optimize the 3D-GS to generate the final scene.

7. Rendering and Output: The fully generated 3D scene can be utilized in virtual reality, gaming, or filmmaking.

Featured AI Tools

Stable Fast 3D

Stable Fast 3D (SF3D) is a large reconstruction model based on TripoSR that can create textured UV-mapped 3D mesh assets from a single object image. The model is highly trained and can produce a 3D model in less than a second, offering a low polygon count along with UV mapping and texture processing, making it easier to use the model in downstream applications such as game engines or rendering tasks. Additionally, the model predicts material parameters (roughness, metallic) for each object, enhancing reflective behaviors during rendering. SF3D is ideal for fields that require rapid 3D modeling, such as game development and visual effects production.

AI Image Generation

130.0K

Toy Box Flux

Toy Box Flux is an AI-driven 3D rendering model trained to generate images, merging existing 3D LoRA models with the weights of Coloring Book Flux LoRA, resulting in a unique style. This model excels in producing toy design images with specific styles, particularly performing well with objects and human subjects, while animal representations show variability due to insufficient training data. Additionally, it enhances the realism of indoor 3D renderings. The upcoming version 2 plans to strengthen style consistency by blending more generated and pre-existing outputs.

AI Image Generation

120.1K