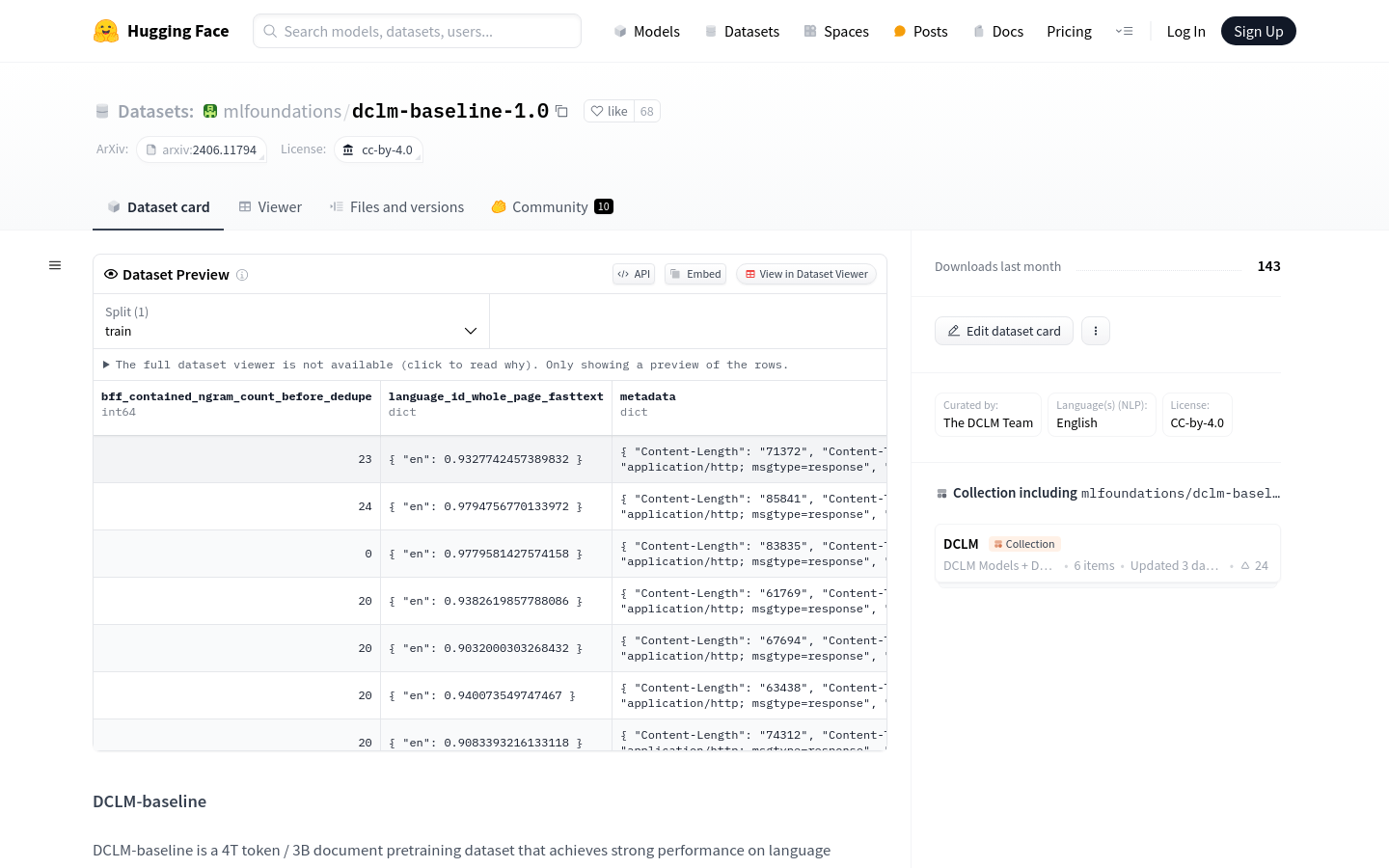

DCLM Baseline

Overview :

DCLM-baseline is a pretraining dataset for language model benchmarking, containing 4T tokens and 3B documents. It is curated from the Common Crawl dataset after a careful planning of data cleaning, filtering, and deduplication steps, aiming to demonstrate the importance of data curation in training efficient language models. The dataset is only for research purposes and should not be used in production environments or for training domain-specific models, such as those for code and mathematics.

Target Users :

DCLM-baseline is intended for researchers and developers in the field of natural language processing. They can use this dataset to train and evaluate their language models, especially for benchmarking purposes. The dataset is particularly suitable for research projects that require large amounts of data for model training. Since it is a benchmark dataset, it should not be used in production environments or for training domain-specific models, such as those for code and mathematics.

Use Cases

Researchers use DCLM-baseline to train their own language models and achieve excellent results in multiple benchmarking tests.

Education institutions use it as a teaching resource to help students understand the construction and training process of language models.

Companies use it to test the performance of their natural language processing products.

Features

High-performance dataset for language model benchmarking

Contains a large amount of tokens and documents, suitable for large-scale training

Clean, filtered, and deduplicated data, ensuring data quality

Provides benchmark for researching language model performance

Not suitable for production environments or domain-specific model training

Helps researchers understand the impact of data curation on model performance

Contributes to the research and development of efficient language models

How to Use

Step 1: Visit the Hugging Face website and search for the DCLM-baseline dataset.

Step 2: Read the dataset description and usage guidelines to understand the structure and features of the dataset.

Step 3: Download the dataset and prepare the necessary computing resources for model training.

Step 4: Train language models using the dataset, monitor the training process and model performance.

Step 5: After completing the training, use the DCLM-baseline dataset for model evaluation and testing.

Step 6: Analyze the test results and adjust model parameters or training strategies as needed.

Step 7: Apply the trained model to real-world problems or further research.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M