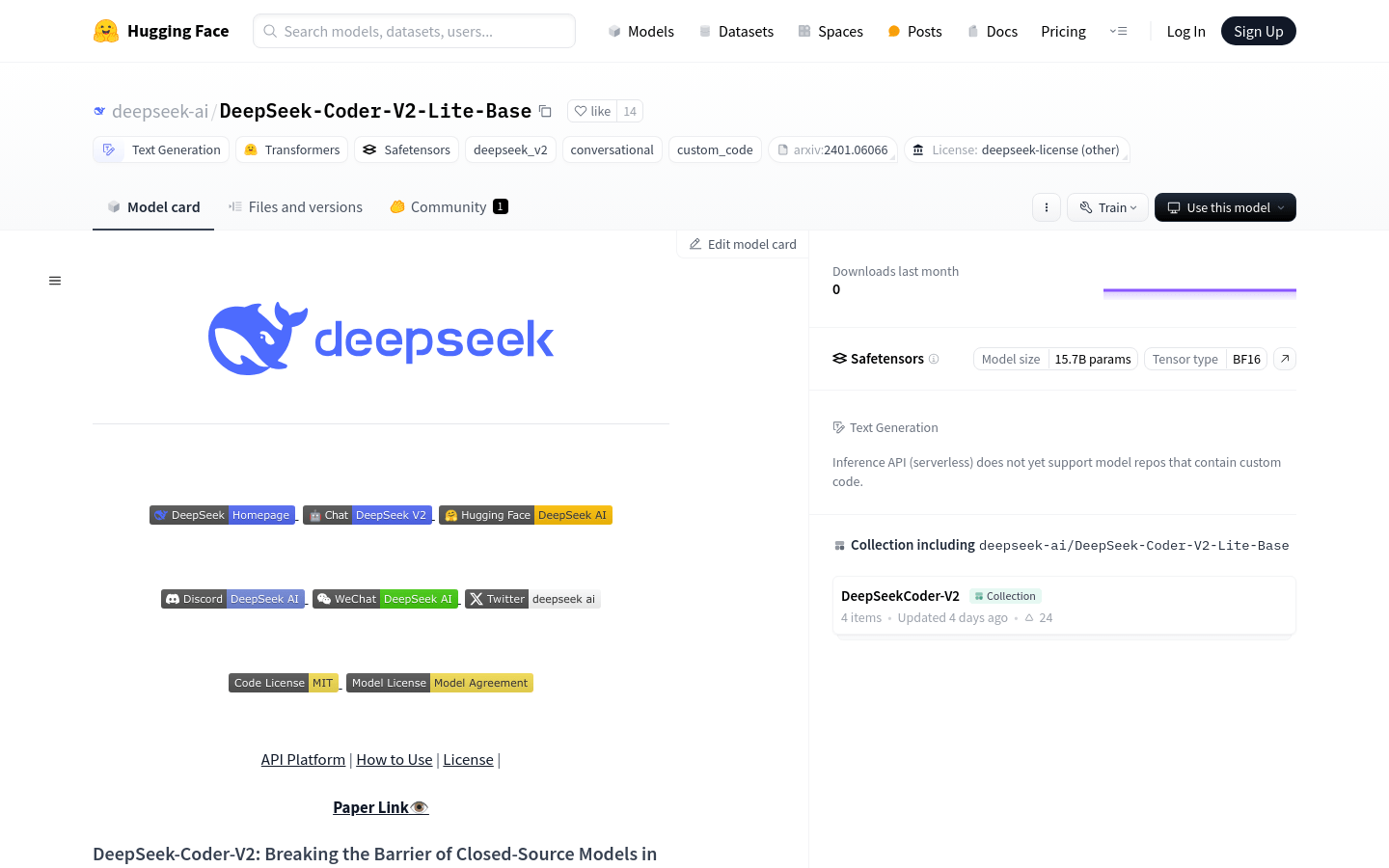

Deepseek Coder V2 Lite Base

Overview :

DeepSeek-Coder-V2 is an open-source expert mixture model (MoE) specifically designed for code language. Its performance is comparable to GPT4-Turbo. It excels in code-specific tasks while maintaining strong performance in general language tasks. Compared to DeepSeek-Coder-33B, the V2 version demonstrates significant improvements in code-related tasks and reasoning capabilities. Furthermore, it expands its supported programming languages from 86 to 338 and increases the context length from 16K to 128K.

Target Users :

Targeted at developers, programming educators, and researchers, DeepSeek-Coder-V2-Lite-Base can be utilized for code generation, teaching assistance, and research purposes.

Use Cases

Developers leverage the model to rapidly generate code for a sorting algorithm.

Programming educators utilize the model as a teaching aid, demonstrating code implementation processes.

Researchers employ the model for experiments and evaluations of code generation tasks.

Features

Code Completion: Automatically completes code snippets based on user input.

Code Insertion: Inserts new code fragments into existing code to achieve specific functionalities.

Chat Completion: Supports conversation with users and generates code based on the dialogue content.

Multilingual Support: Extends support for programming languages from 86 to 338, catering to diverse programming needs.

Long Context Handling: Increases the context length from 16K to 128K, enabling processing of longer code sequences.

API Platform Compatibility: Offers an OpenAI-compatible API for convenient developer usage.

Local Running Support: Provides example code for performing model inference using Huggingface's Transformers locally.

How to Use

1. Visit the Huggingface model library page and download the DeepSeek-Coder-V2-Lite-Base model.

2. Install the Huggingface Transformers library for model loading and inference.

3. Utilize the provided example code for code completion, code insertion, or chat completion functionality testing.

4. Adjust input parameters, such as max_length and top_p, as needed to achieve desired generation results.

5. Employ the model-generated code for further development or educational purposes.

6. Integrate the model through DeepSeek's API platform for remote invocation and seamless integration.

Featured AI Tools

Screenshot To Code

Screenshot-to-code is a simple application that uses GPT-4 Vision to generate code and DALL-E 3 to generate similar images. The application has a React/Vite frontend and a FastAPI backend. You will need an OpenAI API key with access to the GPT-4 Vision API.

AI code generation

972.9K

Codegemma

CodeGemma is an advanced large language model released by Google, specializing in generating, understanding, and tracking instructions for code. It aims to provide global developers with high-quality code assistance tools. It includes a 2 billion parameter base model, a 7 billion parameter base model, and a 7 billion parameter model for guiding tracking, all optimized and fine-tuned for code development scenarios. It excels in various programming languages and possesses exceptional logical and mathematical reasoning abilities.

AI code generation

329.8K