Gemma 2B 10M

Overview :

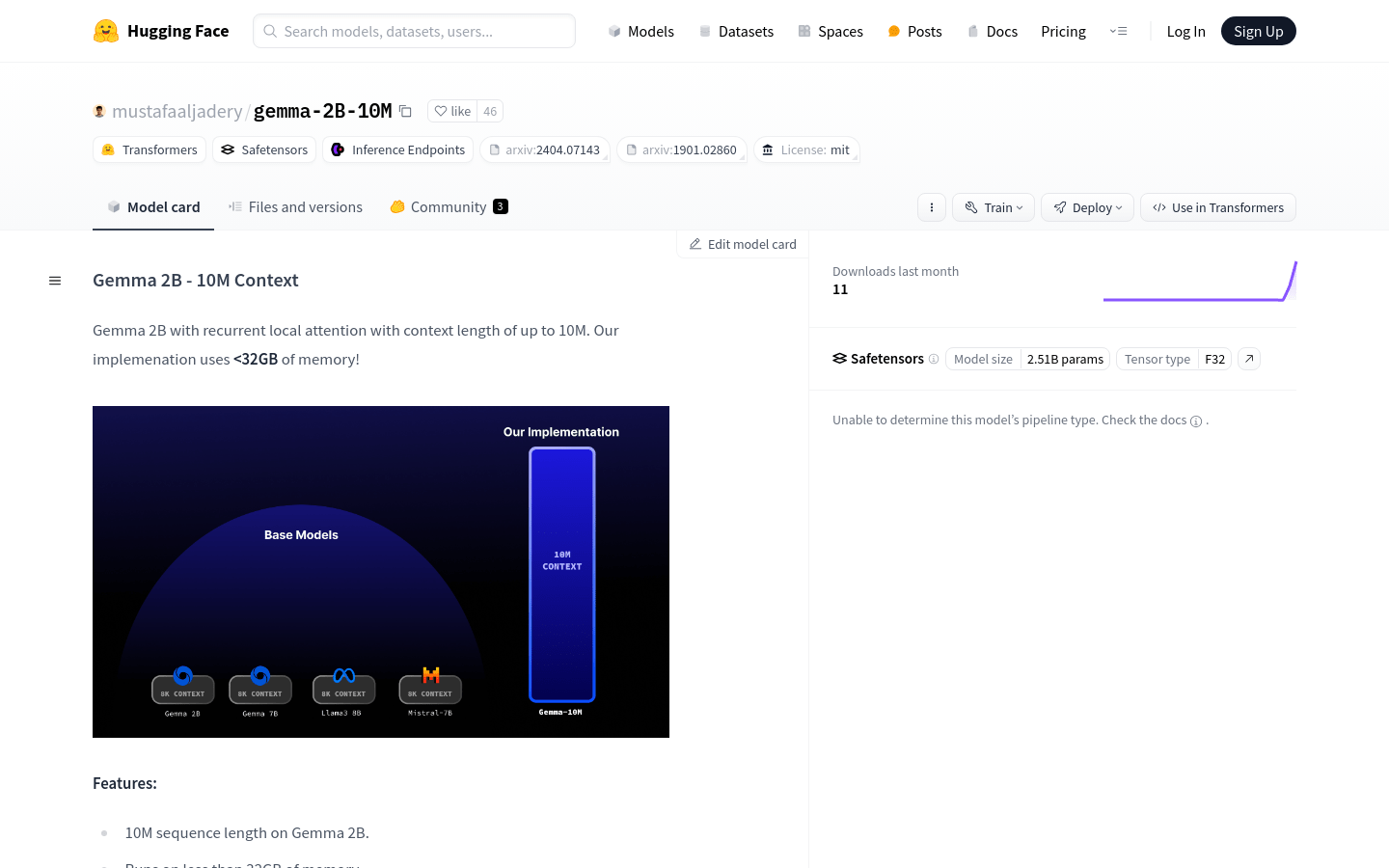

The Gemma 2B - 10M Context is a large-scale language model that, through innovative attention mechanism optimization, can process sequences up to 10M long with memory usage less than 32GB. The model employs recurrent localized attention technology, inspired by the Transformer-XL paper, making it a powerful tool for handling large-scale language tasks.

Target Users :

["Suited for researchers and developers who need to handle large volumes of text data","Ideal for long text generation, summarization, translation, and other language tasks","Attractive to enterprise users seeking high performance and resource optimization"]

Use Cases

Generate summaries for the 'Harry Potter' series books using Gemma 2B - 10M Context

Automatically generate abstracts for academic papers in the field of education

Automatically generate text content for product descriptions and market analysis in the business field

Features

Supports text processing capability with 10M sequence length

Operates under 32GB memory, optimizing resource usage

Native inference performance optimized for CUDA

Recurrent localized attention achieving O(N) memory complexity

200 early checkpoints, planning to train more tokens to improve performance

Utilizes AutoTokenizer and GemmaForCausalLM for text generation

How to Use

Step 1: Install the model and download the Gemma 2B - 10M Context model from huggingface

Step 2: Modify the inference code in main.py to fit specific prompt text

Step 3: Load the model tokenizer using AutoTokenizer.from_pretrained

Step 4: Load the model and specify data type as torch.bfloat16 using GemmaForCausalLM.from_pretrained

Step 5: Set the prompt text, for example, 'Summarize this harry potter book...'

Step 6: Generate text using the generate function without calculating gradients

Step 7: Print the generated text to view the results

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M