Tensordock

Overview :

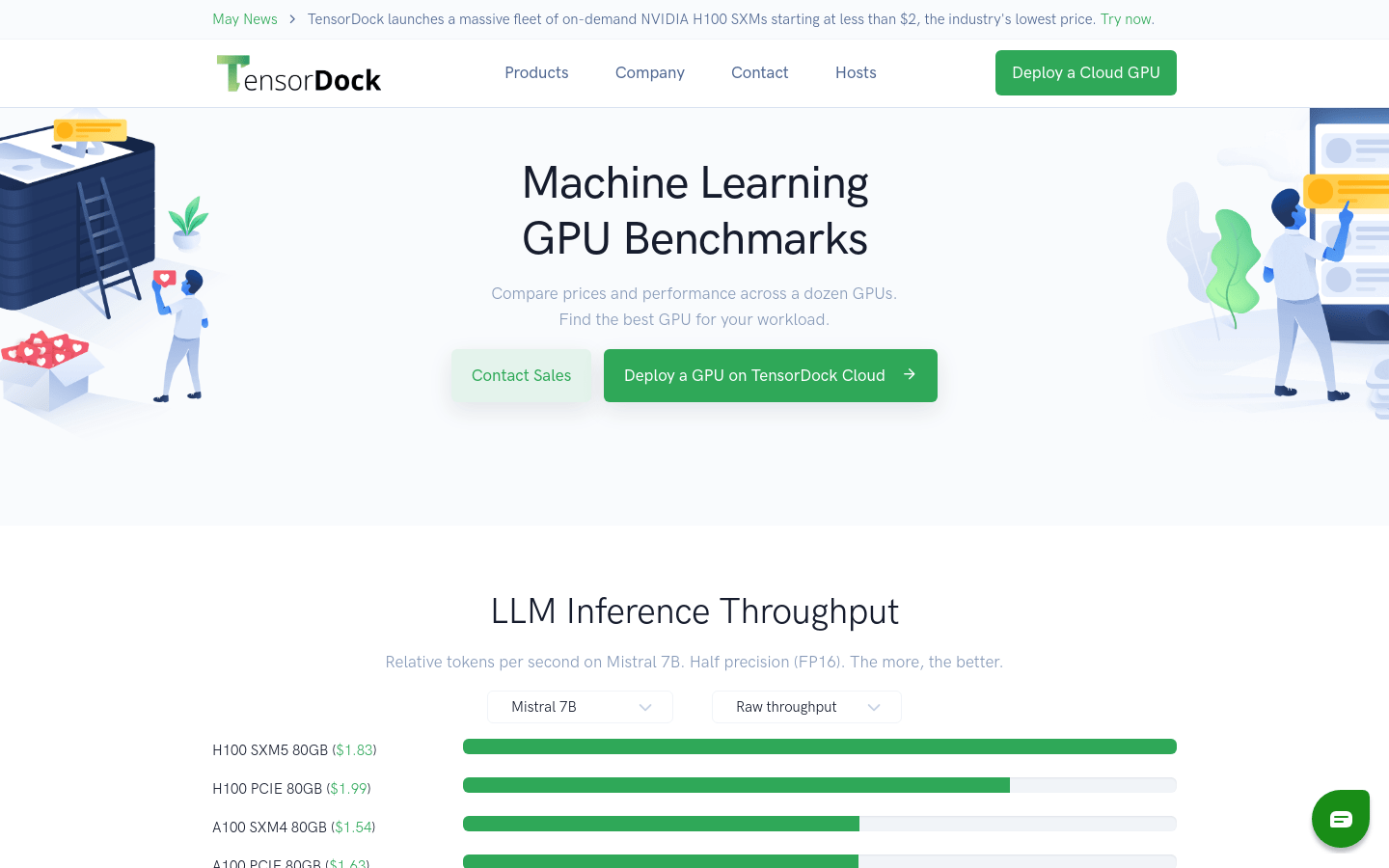

TensorDock is a professional cloud service provider built for workloads that demand unwavering reliability. It offers a range of GPU server options, including NVIDIA H100 SXMs, and cost-effective virtual machine infrastructure for deep learning, AI, and rendering. TensorDock also provides fully-managed container hosting services, complete with OS-level monitoring, auto-scaling, and load balancing. In addition, TensorDock offers world-class enterprise support, provided by professionals.

Target Users :

["Deep learning researchers and data scientists: TensorDock's GPU cloud service is specially designed for large-scale model training and data analysis.","AI developers: Utilize TensorDock's services for quick deployment and testing of AI models.","Rendering professionals: TensorDock offers services featuring high-performance GPUs necessary for image and video rendering.","Research institutions and universities: TensorDock's CPU and GPU cloud services are well-suited for scientific research and educational activities."]

Use Cases

Using TensorDock's GPU cloud service for large-scale neural network training.

Deploying machine learning models using TensorDock's container hosting service.

Performing complex scientific computations and data analysis through TensorDock's CPU cloud service.

Features

NVIDIA H100 SXMs and other high-performance GPU servers

Support for deep learning, AI, and rendering workloads

Fully-managed container hosting services with OS-level monitoring and load balancing

Auto-scaling to adapt to diverse workload demands

CPU cloud services with Intel Xeon and AMD EPYC processors

Cost-effective GPU cloud services suitable for scientific and high-performance computing workloads

Detailed GPU performance benchmarking to help users choose the most suitable GPU

How to Use

Step 1: Visit the TensorDock official website and register an account.

Step 2: Select the appropriate GPU or CPU server based on your workload requirements.

Step 3: Deploy the required server or container and configure it.

Step 4: Upload the required data and applications to the cloud services provided by TensorDock.

Step 5: Begin running deep learning model training, AI model inference, or rendering work.

Step 6: Use TensorDock's monitoring tools to track server performance and resource usage in real-time.

Step 7: Adjust resource allocation as needed to optimize workload performance.

Featured AI Tools

Pseudoeditor

PseudoEditor is a free online pseudocode editor. It features syntax highlighting and auto-completion, making it easier for you to write pseudocode. You can also use our pseudocode compiler feature to test your code. No download is required, start using it immediately.

Development & Tools

3.8M

Coze

Coze is a next-generation AI chatbot building platform that enables the rapid creation, debugging, and optimization of AI chatbot applications. Users can quickly build bots without writing code and deploy them across multiple platforms. Coze also offers a rich set of plugins that can extend the capabilities of bots, allowing them to interact with data, turn ideas into bot skills, equip bots with long-term memory, and enable bots to initiate conversations.

Development & Tools

3.8M