VILA

Overview :

VILA is a pre-trained visual language model (VLM) that achieves video and multi-image understanding capabilities through pre-training with large-scale interleaved image-text data. VILA can be deployed on edge devices using the AWQ 4bit quantization and TinyChat framework. Key advantages include: 1) Interleaved image-text data is crucial for performance enhancement; 2) Not freezing the large language model (LLM) during interleaved image-text pre-training promotes context learning; 3) Re-mixing text instruction data is critical for boosting VLM and plain text performance; 4) Token compression can expand the number of video frames. VILA demonstrates captivating capabilities including video inference, context learning, visual reasoning chains, and better world knowledge.

Target Users :

["Researchers and developers: Utilize VILA for research and application development related to video understanding and multi-image understanding.","Corporate users: VILA can provide robust technical support in commercial scenarios requiring video content analysis and understanding, such as security surveillance and content recommendation.","Education field: VILA can serve as a teaching tool to help students better understand the working principles and application scenarios of visual language models."]

Use Cases

Automatically annotate and analyze video content using VILA.

Integrate VILA into educational platforms to provide intelligent image and video interpretation functionality.

Apply VILA to intelligent security systems for real-time video surveillance and anomaly detection.

Features

Video understanding: VILA-1.5 version provides video understanding features.

Multi-model sizes: Offers 3B/8B/13B/40B four model sizes.

Efficient deployment: The 4-bit quantized VILA-1.5 model, using AWQ quantization, can be efficiently deployed on various NVIDIA GPUs.

Contextual learning: Not freezing the LLM during interleaved image-text pre-training promotes contextual learning.

Token compression: Token compression technology expands the number of video frames, enhancing model performance.

Open-source code: All contents, including training code, evaluation code, datasets, and model checkpoints, have been opened source.

Performance improvement: Significantly boosts VLM and plain text performance through specific techniques, such as re-mixing text instruction data.

How to Use

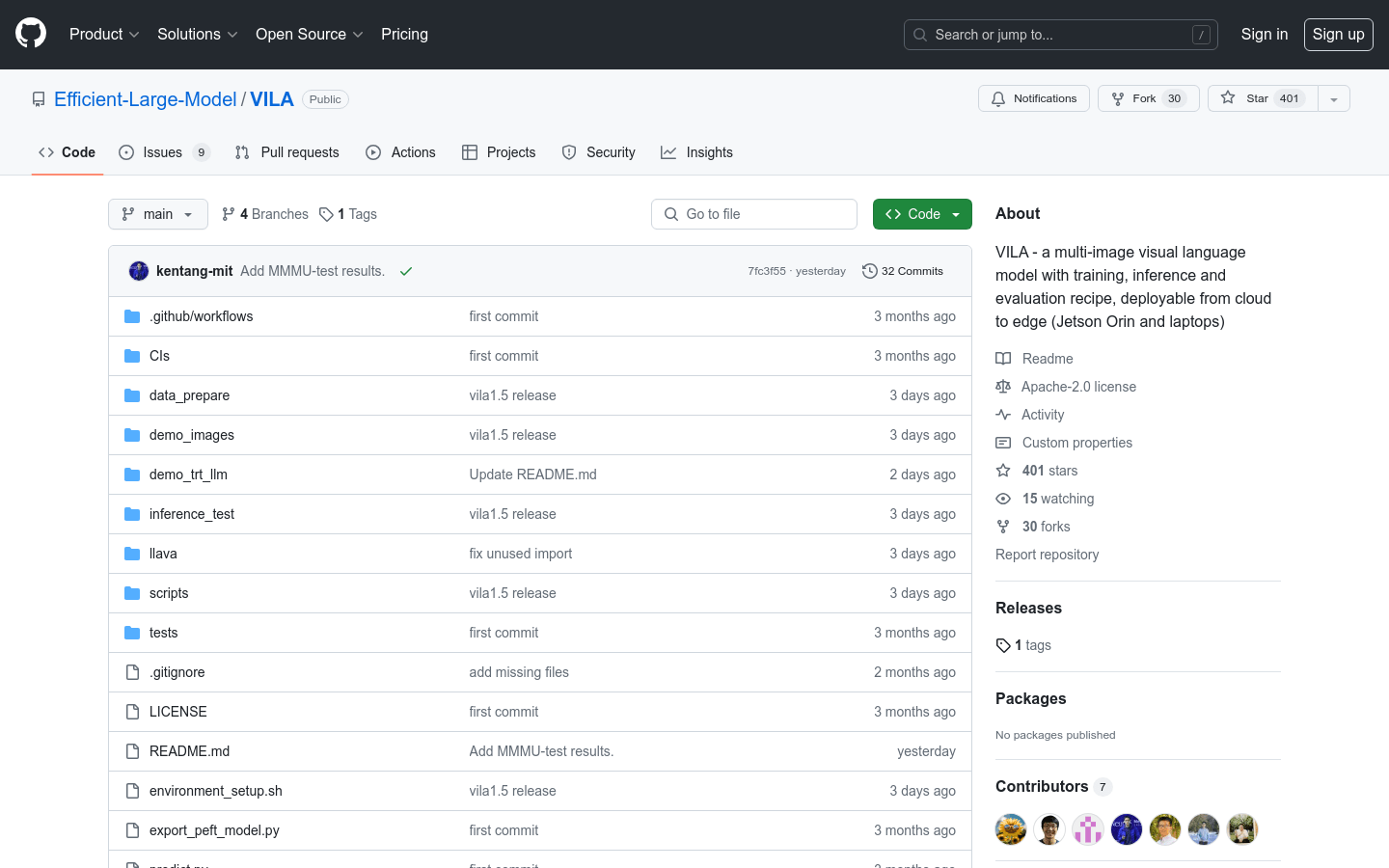

Step 1: Visit the VILA GitHub repository page to obtain the project code.

Step 2: Install the necessary environment and dependencies according to the guidelines in the repository.

Step 3: Download and configure VILA's pre-trained model.

Step 4: Use the provided training script to further train or fine-tune VILA to adapt to specific application scenarios.

Step 5: Utilize the inference script to process new image or video data, obtaining model output.

Step 6: Integrate the model output into the final product or service according to application requirements.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M