Ego Exo4D

Overview :

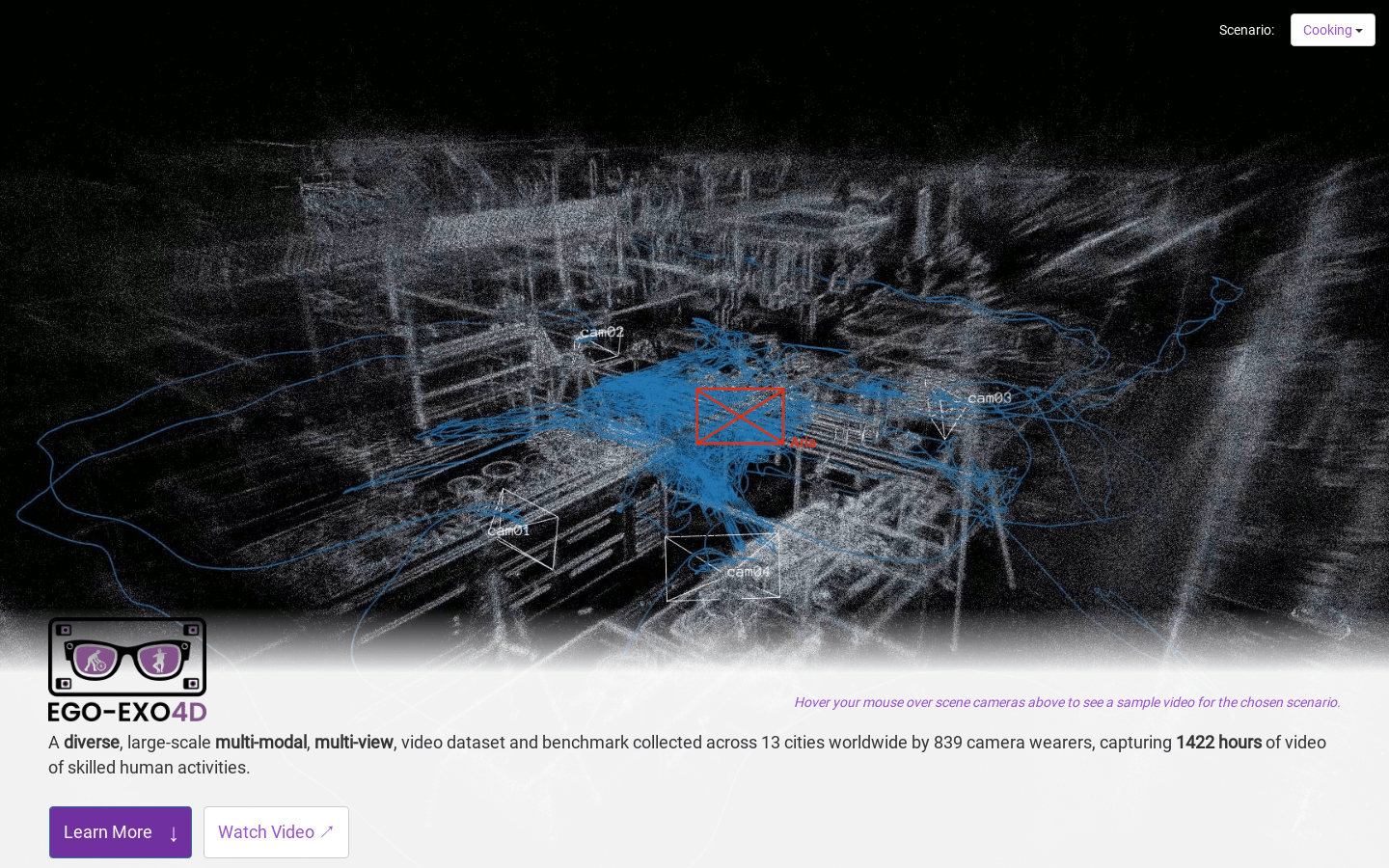

Ego-Exo4D is a multimodal, multi-view video dataset and benchmark challenge focused on capturing first-person and external perspectives of skill-based human activities. It supports multi-modal machine perception research for daily activities. The dataset was collected by 839 volunteers wearing cameras in 13 cities worldwide, capturing 1422 hours of skill-based human activity videos. The dataset provides three types of paired video-aligned natural language datasets: expert annotations, participant-provided tutorial-style narratives, and one-sentence atomic action descriptions. Ego-Exo4D also captures multi-view and multi-sensory modalities, including multiple cameras, seven microphone arrays, two IMUs, a barometer, and a magnetometer. The dataset was recorded strictly adhering to privacy and ethical policies with informed consent from participants. For more information, please visit the official website.

Target Users :

Supports multi-modal machine perception research for video analysis and understanding of daily activities

Features

Multimodal Multi-view Video Dataset

Synchronized first-person and external viewpoints

Multi-sensory modalities including microphones, IMUs, barometers, etc.

Three types of natural language datasets

Supports research on multi-modal machine perception of daily activities

Featured AI Tools

Elicit

Elicit is an AI assistant that analyzes research papers at super speed. It automates tedious research tasks like paper summarization, data extraction, and synthesizing research findings. Users can search for relevant papers, get one-sentence summaries, extract and organize detailed information from papers, and find themes and concepts. Elicit is highly accurate, user-friendly, and has earned the trust and praise of researchers worldwide.

Research Instruments

605.3K

Chinese Picks

Findin AI

Findin AI is a tool designed to significantly accelerate the academic research workflow through artificial intelligence technology. It aids users in efficiently managing literature and knowledge and improving research efficiency through various functions like literature filtering, reading, note-taking, thematic research, literature reviews, and scholarly writing. This product employs AI technologies such as automatic summarization, one-click reference acquisition, and literature Q&A, greatly reducing repetitive tasks in the research process, enabling researchers to focus on innovation and deep thinking.

Research Instruments

212.0K