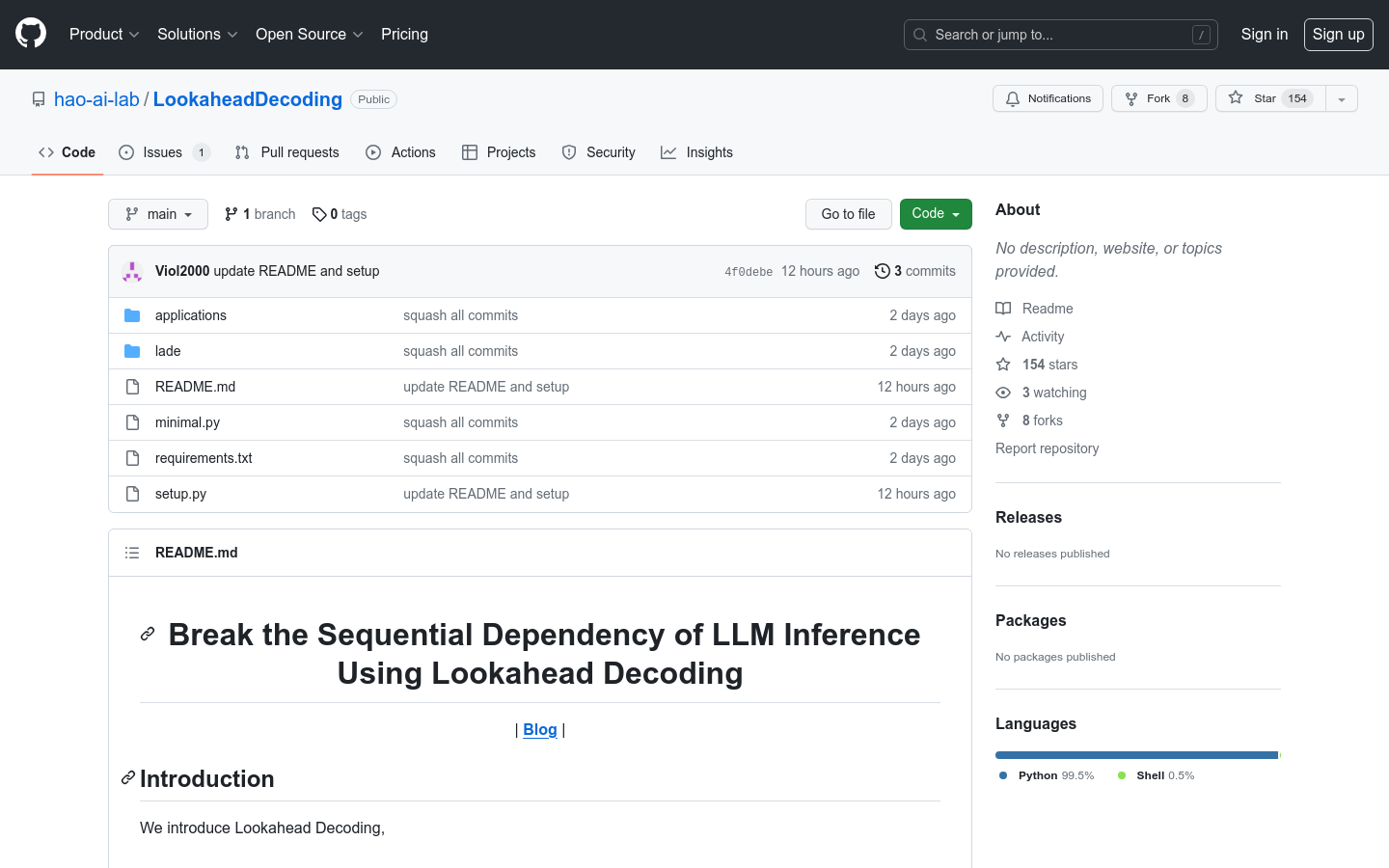

Lookahead Decoding

Overview :

Lookahead Decoding is a novel inference method designed to break the sequential dependency of LLM inference, enhancing inference efficiency. Users can leverage Lookahead Decoding by importing the Lookahead Decoding library, thereby improving their code's performance. Currently, Lookahead Decoding supports only LLaMA and Greedy Search models.

Target Users :

Users can import the Lookahead Decoding library into their own code to improve the inference efficiency of their code.

Use Cases

1. Use Lookahead Decoding to improve your code and boost inference efficiency.

2. Run minimal.py to observe the speed enhancement brought by Lookahead Decoding.

3. Engage in chatbot conversations using Lookahead Decoding.

Features

Breaking the sequential dependency of LLM inference

Improving inference efficiency

Supporting LLaMA and Greedy Search models

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M