Sapiens

Overview :

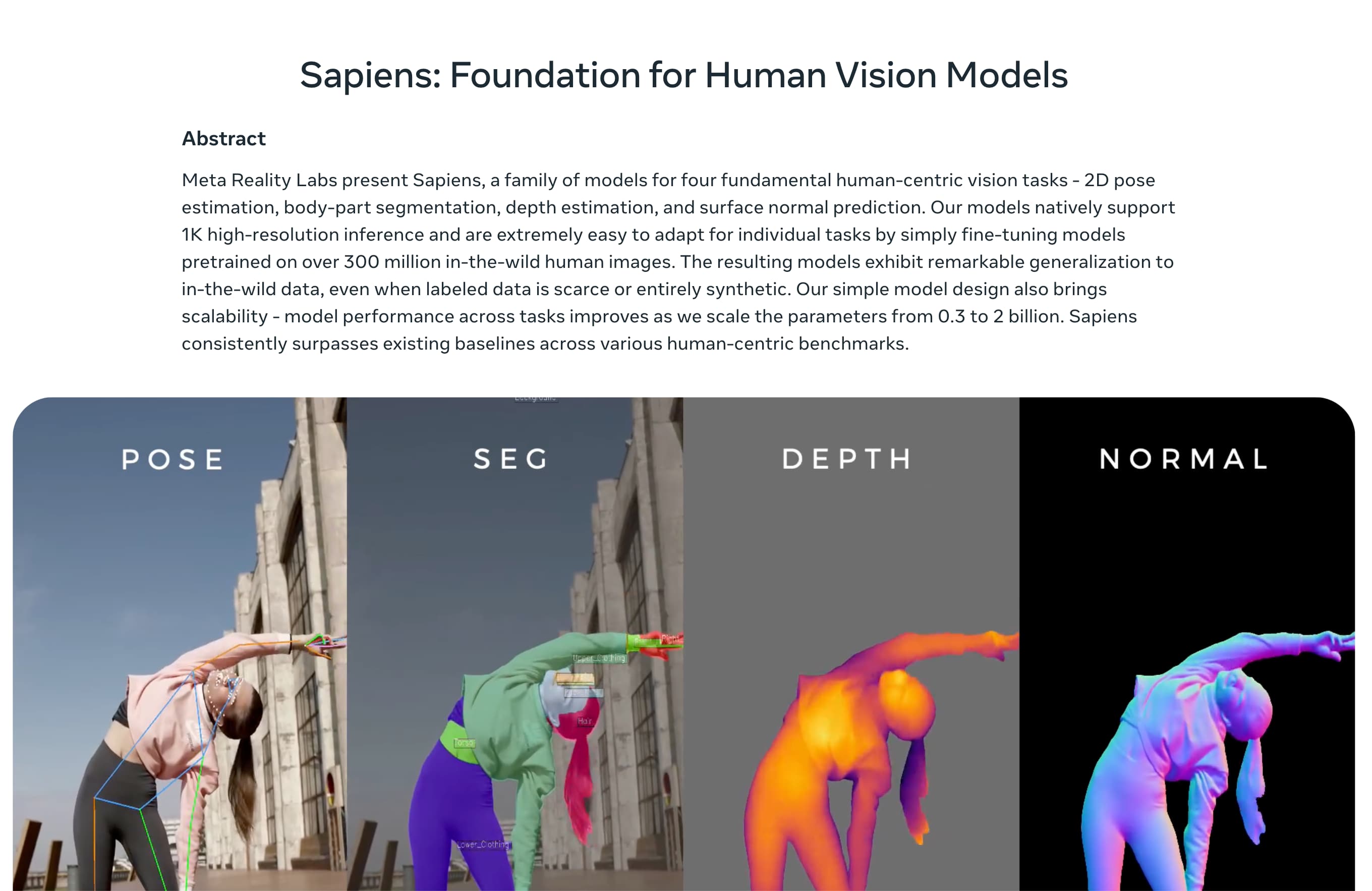

The Sapiens visual model, developed by Meta Reality Labs, focuses on handling human visual tasks, including 2D pose estimation, body part segmentation, depth estimation, and surface normal prediction. It has been trained on over 300 million human images, showcasing high-resolution image processing capabilities and excellent performance even in data-scarce conditions. Its straightforward design facilitates scalability, and its performance significantly improves with increased parameters, surpassing existing baseline models in multiple tests.

Target Users :

The Sapiens model is designed for professionals and enterprises that require high-precision analysis of human motion and structure, including developers and researchers in fields such as video surveillance analysis, virtual reality content creation, medical rehabilitation monitoring, autonomous driving, and robotic navigation.

Use Cases

In video surveillance systems, the Sapiens model can be used for real-time analysis of crowd movements and behavior patterns.

In virtual reality applications, the Sapiens model enables precise capture and simulation of user movements.

In the medical rehabilitation field, the Sapiens model monitors patients' recovery progress, providing customized rehabilitation plans.

Features

2D Pose Estimation: Identifying and estimating human poses in two-dimensional images.

Body Part Segmentation: Precisely segmenting body parts in images such as hands, feet, and heads.

Depth Estimation: Predicting the depth information of objects in images to understand three-dimensional spatial layouts.

Surface Normal Prediction: Inferring the direction of object surfaces to understand shapes and materials.

High-Resolution Input Processing: Capable of processing high-resolution images to enhance output quality.

Masked Autoencoder Pretraining: Learning robust feature representations through partial image masking.

How to Use

Step 1: Acquire the Sapiens model and familiarize yourself with its basic architecture and functions.

Step 2: Choose appropriate preprocessing and data augmentation methods based on application needs.

Step 3: Fine-tune the model to adapt to specific visual tasks.

Step 4: Utilize the model for real-world visual task processing, such as 2D pose estimation or body part segmentation.

Step 5: Analyze the model's output results and make further optimizations and adjustments as needed.

Step 6: Integrate the model into the final application or research project to implement automated image analysis.

Featured AI Tools

Chinese Picks

Capcut Dreamina

CapCut Dreamina is an AIGC tool under Douyin. Users can generate creative images based on text content, supporting image resizing, aspect ratio adjustment, and template type selection. It will be used for content creation in Douyin's text or short videos in the future to enrich Douyin's AI creation content library.

AI image generation

9.0M

Outfit Anyone

Outfit Anyone is an ultra-high quality virtual try-on product that allows users to try different fashion styles without physically trying on clothes. Using a two-stream conditional diffusion model, Outfit Anyone can flexibly handle clothing deformation, generating more realistic results. It boasts extensibility, allowing adjustments for poses and body shapes, making it suitable for images ranging from anime characters to real people. Outfit Anyone's performance across various scenarios highlights its practicality and readiness for real-world applications.

AI image generation

5.3M