Responses API

Overview :

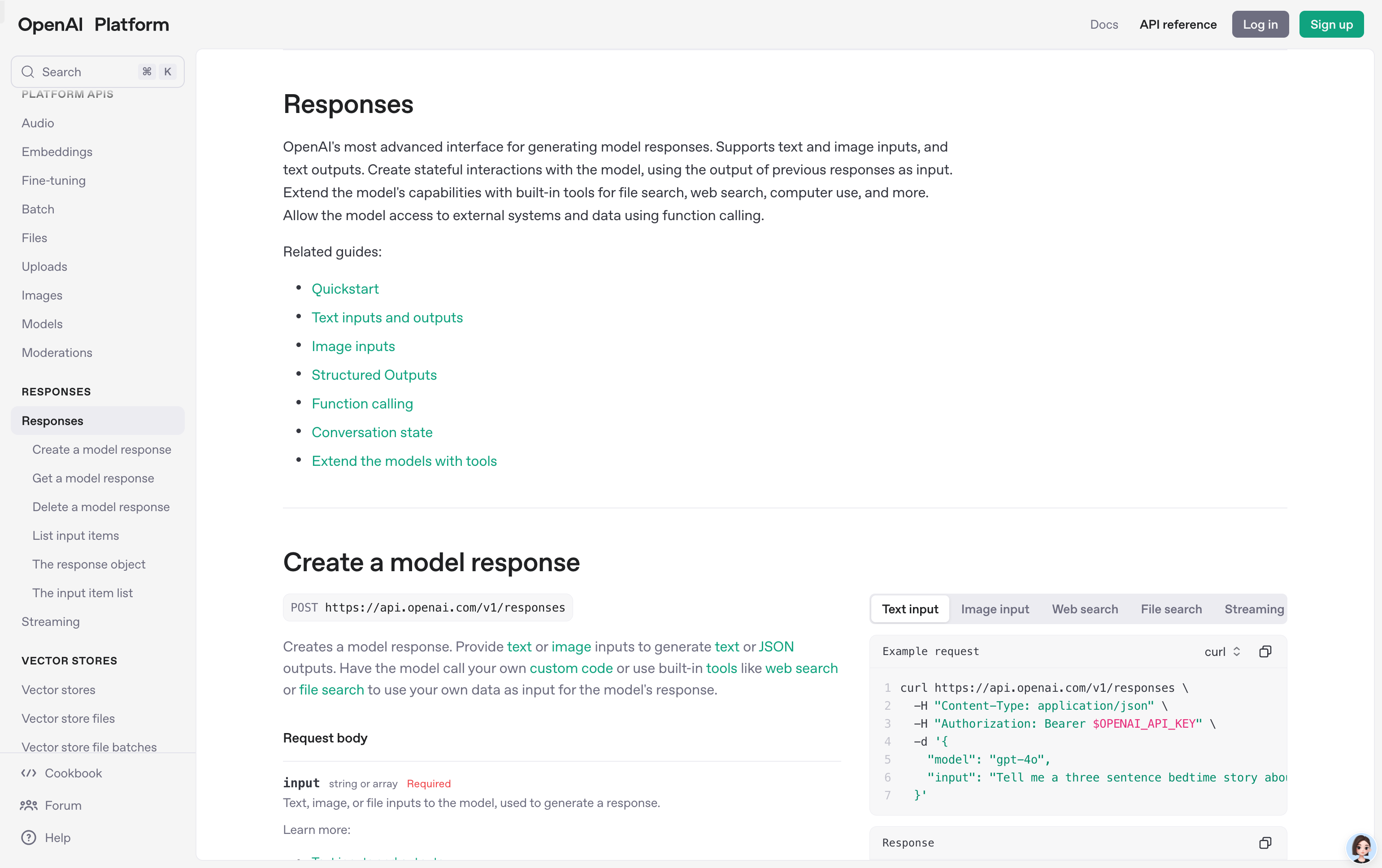

The OpenAI API's Responses feature allows users to create, retrieve, update, and delete model responses. It provides developers with powerful tools for managing model output and behavior. Through Responses, users can better control the generated content of the model, optimize model performance, and improve development efficiency by storing and retrieving responses. This feature supports multiple models and is suitable for scenarios requiring highly customized model outputs, such as chatbots, content generation, and data analysis. The OpenAI API offers flexible pricing plans to suit the needs of individuals to large enterprises.

Target Users :

This product is suitable for developers who need highly customized model outputs, such as teams building chatbots, content generation tools, or data analysis platforms. It helps developers better control model behavior, optimize user experience, and quickly implement functionality through flexible API interfaces.

Use Cases

Create a chatbot response to automatically answer user questions.

Generate the beginning of an article or story for a content creation platform.

Analyze user input text, extract key information, and generate a summary.

Features

Create model responses: Users can create model responses based on the input prompt.

Get response details: Get stored response information through the response ID.

Update response content: Modify the metadata or other information of the stored response.

Delete responses: Delete unnecessary responses from the system.

Support multiple models: Compatible with various language and functional models provided by OpenAI.

Response storage and retrieval: Store responses for later use, supporting pagination and filtering queries.

Streaming responses: Supports receiving response content gradually in a streaming manner.

How to Use

1. Register and log in to the OpenAI platform to obtain an API key.

2. Find the Responses related interface in the OpenAI API documentation.

3. Use an HTTP request to call the response creation interface and pass in the necessary parameters (such as model ID and prompt).

4. Get response details through the response ID to view the generated content.

5. If you need to modify the response, call the update interface and modify the relevant fields.

6. Use pagination and filtering functions to manage a large number of responses.

7. In practical applications, integrate the responses into chatbots or content generation tools.

Featured AI Tools

Tensorpool

TensorPool is a cloud GPU platform dedicated to simplifying machine learning model training. It provides an intuitive command-line interface (CLI) enabling users to easily describe tasks and automate GPU orchestration and execution. Core TensorPool technology includes intelligent Spot instance recovery, instantly resuming jobs interrupted by preemptible instance termination, combining the cost advantages of Spot instances with the reliability of on-demand instances. Furthermore, TensorPool utilizes real-time multi-cloud analysis to select the cheapest GPU options, ensuring users only pay for actual execution time, eliminating costs associated with idle machines. TensorPool aims to accelerate machine learning engineering by eliminating the extensive cloud provider configuration overhead. It offers personal and enterprise plans; personal plans include a $5 weekly credit, while enterprise plans provide enhanced support and features.

Model Training and Deployment

306.6K

English Picks

Ollama

Ollama is a local large language model tool that allows users to quickly run Llama 2, Code Llama, and other models. Users can customize and create their own models. Ollama currently supports macOS and Linux, with a Windows version coming soon. The product aims to provide users with a localized large language model runtime environment to meet their personalized needs.

Model Training and Deployment

262.2K