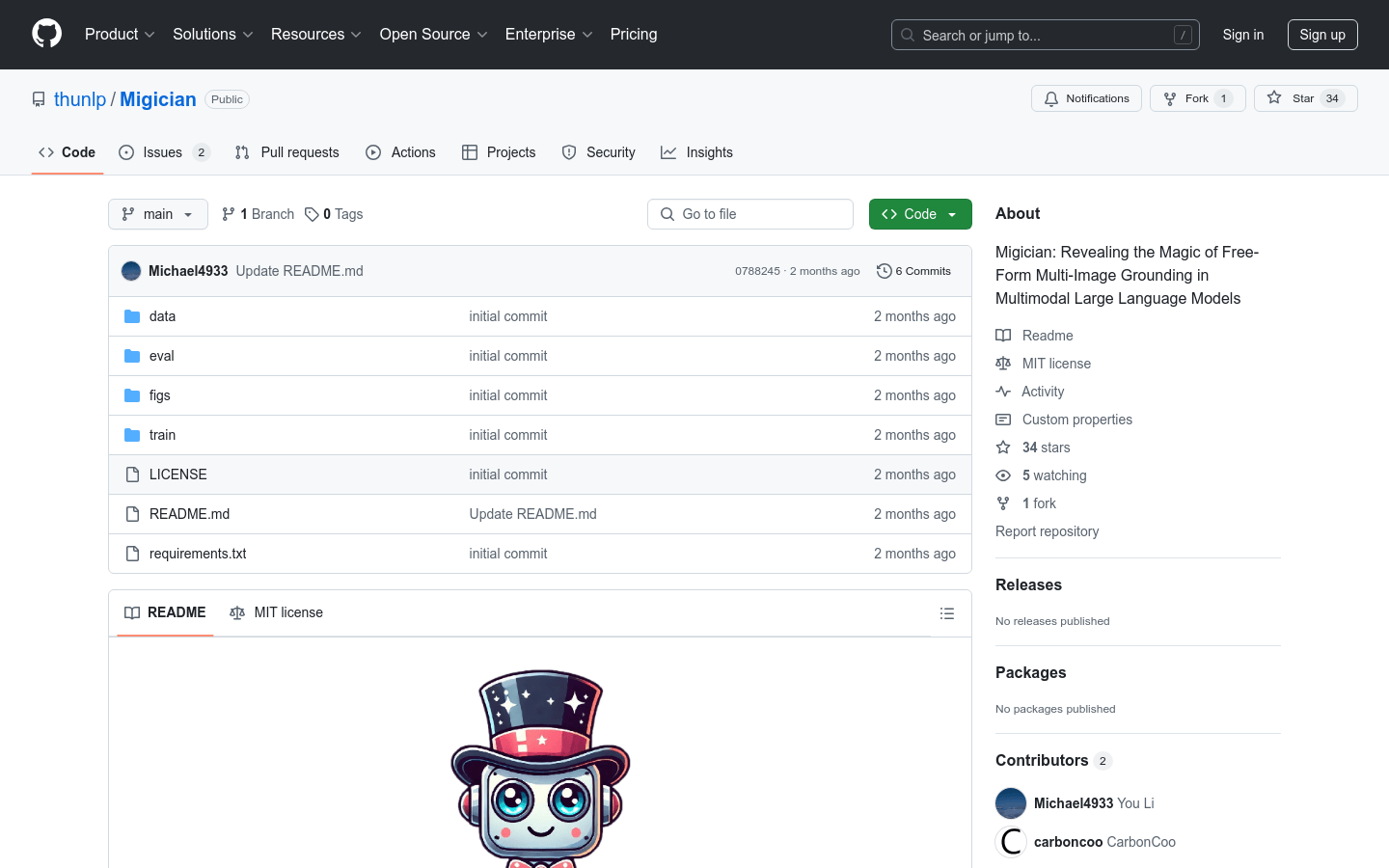

Migician

Overview :

Migician is a multi-modal large language model developed by the Natural Language Processing Laboratory of Tsinghua University, focusing on multi-image localization tasks. By introducing an innovative training framework and the large-scale MGrounding-630k dataset, the model significantly improves the accuracy of localization in multi-image scenarios. It not only surpasses existing multi-modal large language models but also outperforms larger 70B models in performance. The main advantages of Migician lie in its ability to handle complex multi-image tasks and provide free-form localization instructions, making it have important application prospects in the field of multi-image understanding. The model is currently open-source on Hugging Face for researchers and developers to use.

Target Users :

Migician is suitable for researchers and developers in multi-modal research, computer vision, and natural language processing, especially teams that need to handle multi-image localization tasks. It provides researchers with powerful tools to explore visual and language interaction in multi-image scenarios, and provides developers with scalable solutions to build applications based on multi-image localization.

Use Cases

In multi-image scenarios, users can use natural language instructions to allow the model to locate specific objects or regions, such as finding characters that appear together in a set of images.

Researchers can use Migician's model and dataset to conduct research on multi-image localization tasks, exploring new algorithms and application scenarios.

Developers can integrate Migician into their applications to provide users with functions based on multi-image localization, such as image annotation and target tracking.

Features

Free-form multi-image localization: Supports users to perform precise target localization in multi-image scenarios through natural language instructions.

Multi-task support: Covers a variety of multi-image tasks, including common object localization, image difference localization, and free-form localization.

Large-scale dataset support: Provides the MGrounding-630k dataset, containing 630,000 multi-image localization task data.

High performance: In the MIG-Bench benchmark test, the performance is significantly better than existing multi-modal large language models.

Flexible inference capabilities: Supports various inference methods, including direct inference and chain inference based on single-image localization.

How to Use

1. Create a Python environment and install dependencies: Create an environment using `conda env create -n migician python=3.10`, then run `pip install -r requirements.txt` to install dependencies.

2. Download the dataset: Download the MGrounding-630k dataset from Hugging Face and unzip it to the specified directory.

3. Load the model: Load the pre-trained Migician model using the `transformers` library.

4. Prepare input data: Format the multi-image data and natural language instructions into the input format required by the model.

5. Run inference: Call the model's `generate` method for inference to obtain localization results.

6. Evaluate performance: Evaluate model performance using the MIG-Bench benchmark to obtain metrics such as IoU.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M