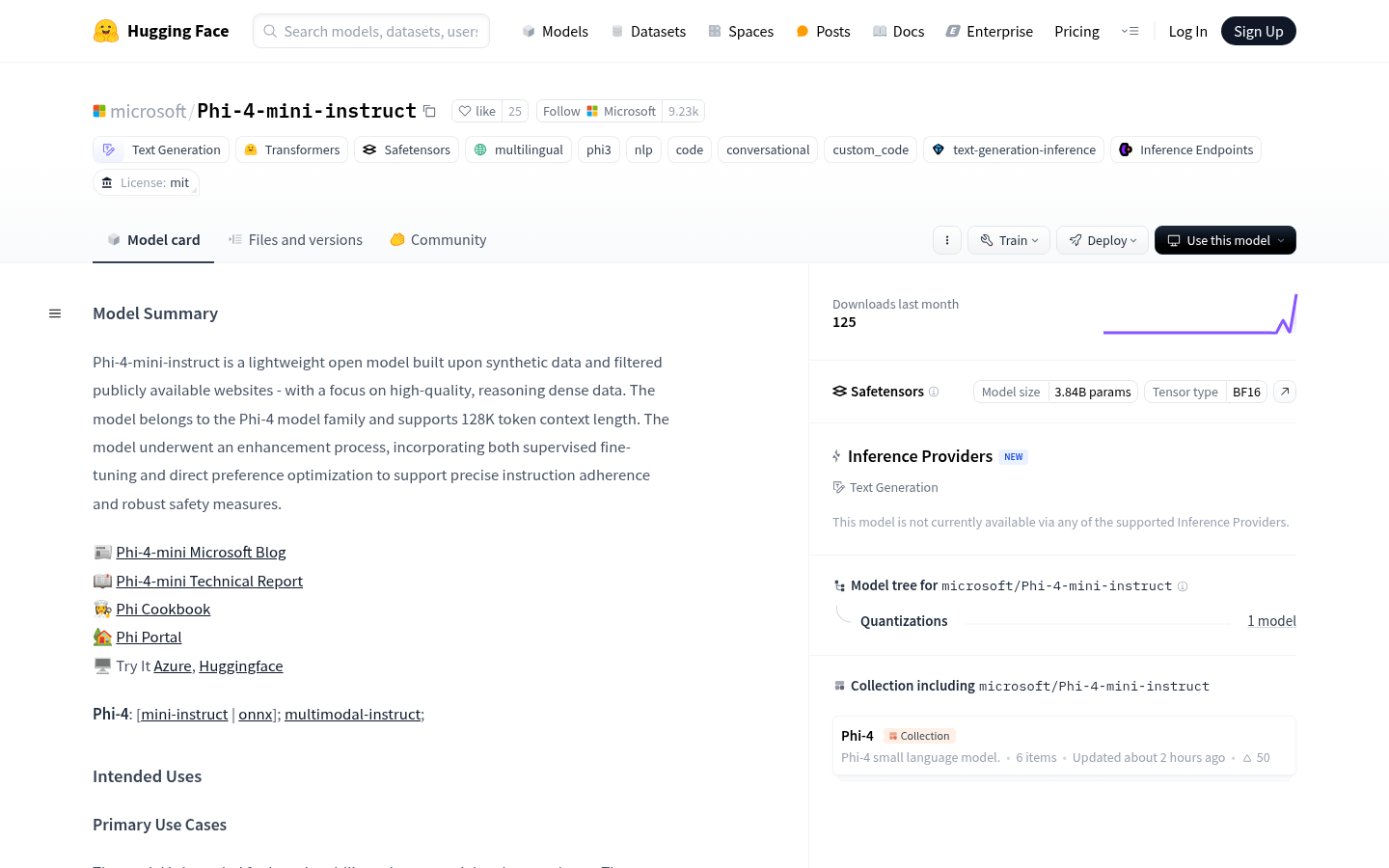

Phi 4 Mini Instruct

Overview :

Phi-4-mini-instruct is a lightweight, open-source language model from Microsoft, belonging to the Phi-4 model family. Trained on synthetic data and curated data from publicly available websites, it focuses on high-quality, inference-intensive data. The model supports 128K token context length and enhances instruction following capabilities and safety through supervised fine-tuning and direct preference optimization. Phi-4-mini-instruct excels in multilingual support, inference capabilities (especially mathematical and logical reasoning), and low-latency scenarios, making it suitable for resource-constrained environments. Released in February 2025, it supports multiple languages including English, Chinese, and Japanese.

Target Users :

This model is designed for developers and researchers who need efficient inference, multilingual support, and low resource consumption. It is particularly suitable for deployment in resource-constrained environments such as mobile devices or edge computing scenarios. Additionally, it's well-suited for applications requiring fast response times and high inference capabilities, including intelligent customer service, educational tools, and programming assistance.

Use Cases

In intelligent customer service, Phi-4-mini-instruct can quickly understand user questions and provide accurate answers, while supporting multilingual interaction.

As a programming assistant, the model can generate code snippets and provide logical reasoning support, helping developers quickly solve problems.

In the education field, Phi-4-mini-instruct can generate mathematical problem solutions and logical reasoning exercises to aid student learning.

Features

Supports multilingual conversations and instruction execution, handling input in various languages.

Possesses strong reasoning capabilities, particularly excelling in mathematical and logical reasoning.

Provides long context support, capable of handling inputs up to 128K tokens.

Supports tool invocation functionality, generating function call code based on user needs.

Through safety assessments and red teaming, it offers high security and effectively filters harmful content.

How to Use

1. Download the Phi-4-mini-instruct model files from the Hugging Face website.

2. Load the model using a supported deep learning framework (e.g., PyTorch) and configure the inference environment.

3. Select the appropriate input format based on your needs, such as chat format or tool invocation format.

4. Provide a system message to define the model's behavior and context.

5. Input user questions or instructions; the model will generate corresponding answers or function call code.

6. Post-process the model output to ensure the results meet the application's requirements.

7. In practical applications, incorporate a safety assessment mechanism to filter potentially harmful content.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M