Wan2.1

Overview :

Wan2.1 is an open-source, advanced, large-scale video generation model designed to push the boundaries of video generation technology. Through innovative spatio-temporal variational autoencoders (VAEs), scalable training strategies, large-scale data construction, and automated evaluation metrics, it significantly improves model performance and versatility. Wan2.1 supports multiple tasks, including text-to-video, image-to-video, and video editing, and can generate high-quality video content. The model has demonstrated superior performance in several benchmark tests, even surpassing some closed-source models. Its open-source nature allows researchers and developers to freely use and extend the model, making it suitable for various applications.

Target Users :

Wan2.1 is ideal for developers, researchers, and content creators requiring high-quality video generation, especially for scenarios demanding rapid video content creation, such as advertisement production, video effects, and educational videos. Its open-source nature also makes it an excellent choice for academic research and technological innovation.

Use Cases

Generate a video of two anthropomorphic cats boxing on a stage using a text description.

Generate a dynamic beach video, including waves, sunshine, and sand, from a static beach photograph.

Upscale a low-resolution video to a higher resolution while optimizing image quality.

Features

Supports text-to-video generation, creating high-quality videos based on text descriptions.

Supports image-to-video generation, enabling the creation of dynamic videos from static images.

Supports video editing functionalities for modifying and optimizing existing videos.

Supports multilingual text generation, capable of producing video content incorporating both Chinese and English.

Provides an efficient video VAE capable of efficiently encoding and decoding 1080P videos while preserving temporal information.

How to Use

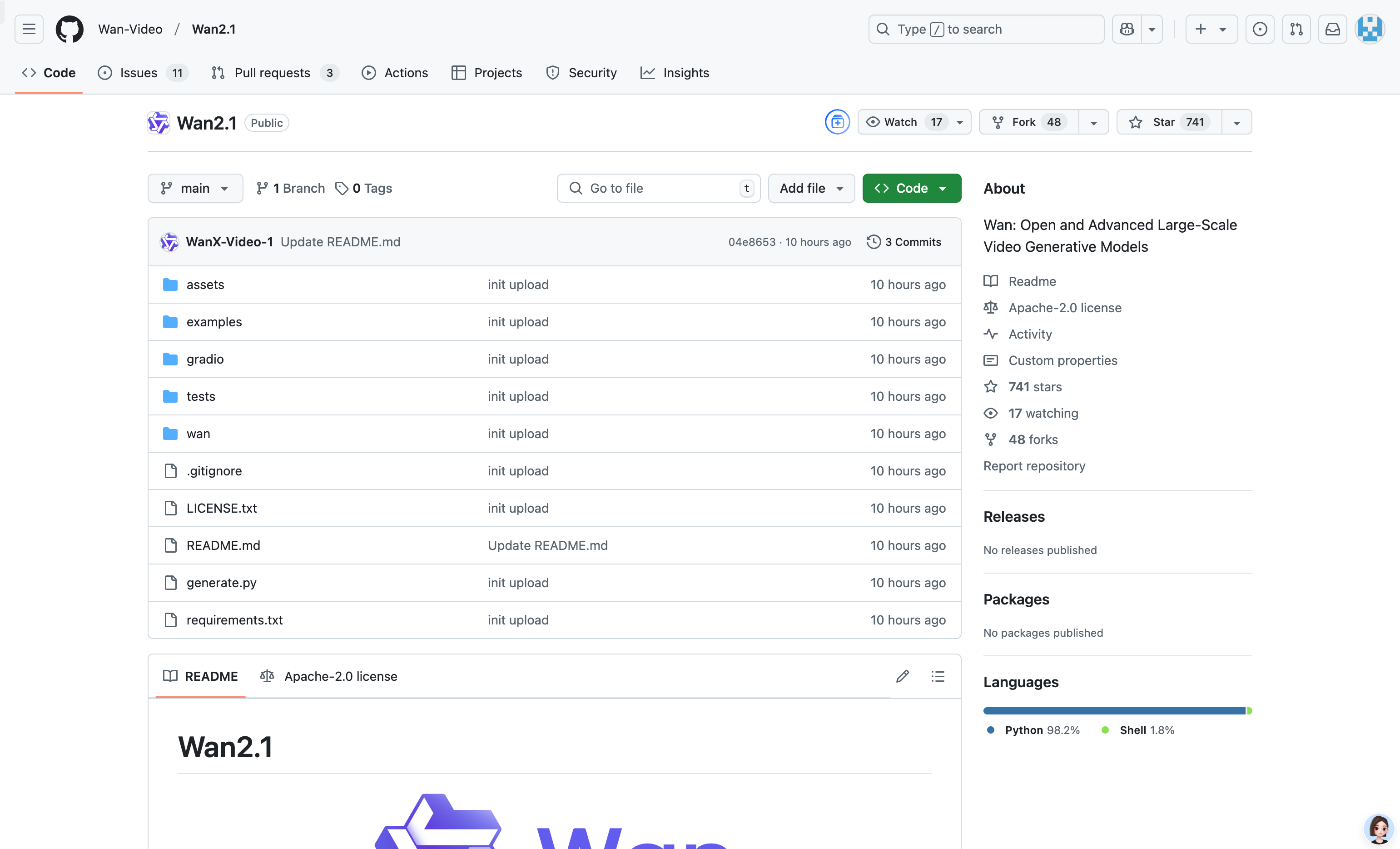

1. Clone the repository: `git clone https://github.com/Wan-Video/Wan2.1.git`

2. Install dependencies: `pip install -r requirements.txt`

3. Download model weights: Download the model via Hugging Face or ModelScope.

4. Run the generation script: Use the `generate.py` script, specifying the task type, model path, and input parameters.

5. View the generated results: Generated videos or images will be saved to the specified path based on the task type.

Featured AI Tools

English Picks

Pika

Pika is a video production platform where users can upload their creative ideas, and Pika will automatically generate corresponding videos. Its main features include: support for various creative idea inputs (text, sketches, audio), professional video effects, and a simple and user-friendly interface. The platform operates on a free trial model, targeting creatives and video enthusiasts.

Video Production

17.6M

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M