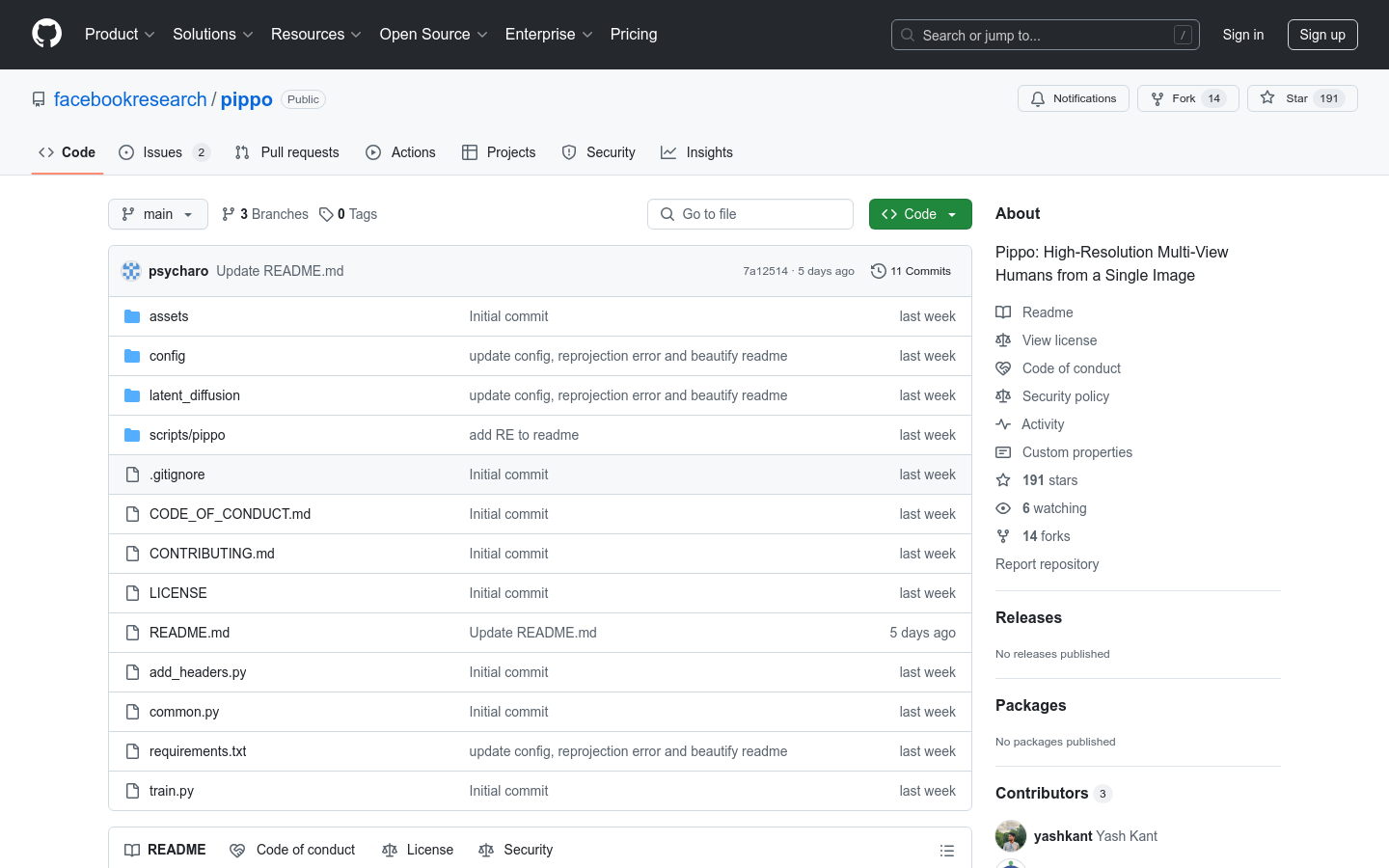

Pippo

Overview :

Pippo, developed in collaboration between Meta Reality Labs and various universities, is a generative model capable of producing high-resolution, multi-view videos from a single ordinary photograph. Its core advantage lies in generating high-quality 1K resolution videos without any additional input (such as parameterized models or camera parameters). Based on a multi-view diffusion transformer architecture, it has broad application prospects in areas like virtual reality and film production. Pippo's code is open-source, but pre-trained weights are not included; users need to train the model themselves.

Target Users :

Pippo is ideal for researchers and developers, particularly those specializing in computer vision, image generation, and virtual reality. It provides them with a powerful tool to explore techniques for generating high-quality videos from single images, applicable to scenarios such as film production and virtual reality content development.

Use Cases

Researchers use the Pippo model to generate high-quality, multi-view videos from single photographs for virtual reality content creation.

Film production teams leverage Pippo to generate high-resolution virtual character videos, saving on filming costs.

Developers extend the code architecture of Pippo to develop new image generation applications.

Features

Generates high-resolution, multi-view videos from a single photograph.

Supports model training at different resolutions (128, 512, 1024).

Provides sample training code and dataset support (e.g., Ava-256).

Calculates reprojection error between generated and real images.

Offers techniques for controlling MLP and attention bias to optimize diffusion transformer performance.

Supports running on different GPU configurations (e.g., A100, T4).

How to Use

1. Clone the repository: `git clone git@github.com:facebookresearch/pippo.git` and navigate to the directory.

2. Set up the environment: Create a Conda environment and install dependencies, such as PyTorch and other libraries.

3. Download sample data: Run `python scripts/pippo/download_samples.py` to download a sample of the Ava-256 dataset.

4. Start training: Choose an appropriate model configuration file based on your GPU configuration and run `python train.py` to begin training.

5. Calculate reprojection error: Run `python scripts/pippo/reprojection_error.py` to compare the error between generated and real images.

Featured AI Tools

English Picks

Pika

Pika is a video production platform where users can upload their creative ideas, and Pika will automatically generate corresponding videos. Its main features include: support for various creative idea inputs (text, sketches, audio), professional video effects, and a simple and user-friendly interface. The platform operates on a free trial model, targeting creatives and video enthusiasts.

Video Production

17.6M

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M