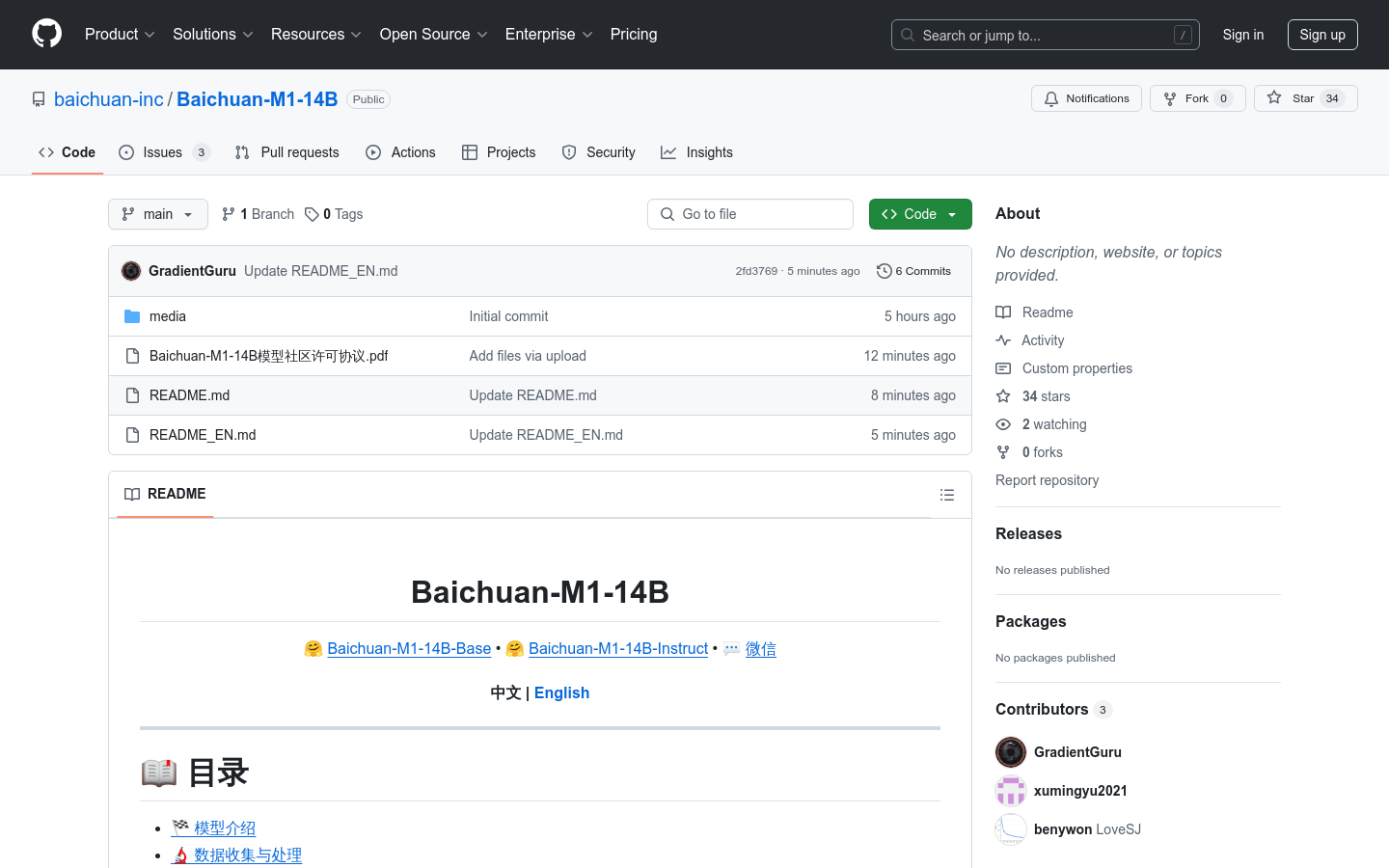

Baichuan M1 14B

Overview :

Baichuan-M1-14B is an open-source large language model developed by Baichuan Intelligent, optimized for medical scenarios. It is trained on high-quality medical and general data comprising 200 trillion tokens, covering over 20 medical departments, showcasing strong contextual understanding and long-sequence task performance. The model excels in healthcare applications and achieves state-of-the-art performance in general tasks for models of similar sizes. Its innovative model architecture and training approach enable outstanding performance in complex tasks such as medical reasoning and diagnosis, providing robust support for AI applications in healthcare.

Target Users :

This model is designed for researchers, developers, and professionals in the medical field, providing intelligent support for medical diagnostics, medical research, and clinical practice. Its powerful language generation and reasoning capabilities make it an ideal choice for applications of artificial intelligence in healthcare.

Use Cases

Assist physicians in clinical practice for symptom diagnosis and treatment recommendations.

Aid in medical education by helping students learn and understand complex medical knowledge.

Support medical research by assisting in writing medical papers through text generation and reasoning.

Features

Train from scratch on high-quality medical and general data comprising 200 trillion tokens.

Fine-grained modeling targeting over 20 medical departments enhances professional performance.

Introduce a short convolutional attention mechanism to significantly improve contextual learning capabilities.

Implement a sliding window attention mechanism to optimize long-sequence task performance.

Utilize multi-stage curriculum learning and alignment optimization to comprehensively enhance model capabilities.

Provide both Base and Instruct models to meet various usage scenarios.

Support rapid deployment and inference, adapting to multiple application scenarios.

How to Use

1. Access the Hugging Face platform and load the Baichuan-M1-14B-Instruct model.

2. Initialize the model and tokenizer using AutoTokenizer and AutoModelForCausalLM.

3. Input prompt texts, such as medical questions or task instructions.

4. Call the model to generate text, setting parameters like maximum generation length.

5. Decode the generated text and output the results for further analysis or application.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M