Latentsync

Overview :

LatentSync, developed by ByteDance, is a lip-sync framework based on audio-conditioned latent diffusion models. It directly leverages the robust capabilities of Stable Diffusion to model complex audio-video associations without the need for intermediate motion representations. The framework enhances temporal consistency of generated video frames through the proposed Time Representation Alignment (TREPA) technique while maintaining lip-sync accuracy. This technology has significant application value in video production, virtual avatars, and animation, significantly improving production efficiency and reducing labor costs, offering users a more realistic and natural audio-visual experience. The open-source nature of LatentSync allows for wide application in both academic research and industrial practice, promoting the development and innovation of related technologies.

Target Users :

Designed for professionals in video production, animation, virtual avatar development, game development, and visual effects, as well as academics and enthusiasts interested in lip-sync technology.

Use Cases

When producing virtual avatar videos, LatentSync can automatically generate realistic lip movements based on the avatar's voice, enhancing the video's authenticity and interactivity.

Animation production companies can use LatentSync to automatically generate matching lip animations during character dubbing, saving time and costs associated with traditional manual lip animation production.

Visual effects teams can leverage LatentSync to repair or enhance lip-sync effects of characters in special effects videos, thereby improving the overall visual appeal.

Features

Audio-conditioned latent diffusion model: Direct modeling of audio-video associations using Stable Diffusion without intermediate motion representations.

Time Representation Alignment (TREPA): Enhanced temporal consistency of generated video frames through time representations extracted from large-scale self-supervised video models.

High lip-sync accuracy: Ensures effective lip synchronization in generated videos through optimization techniques such as SyncNet loss.

Complete data processing workflow: Comprehensive data processing scripts covering video restoration, frame rate resampling, scene detection, and face detection and alignment.

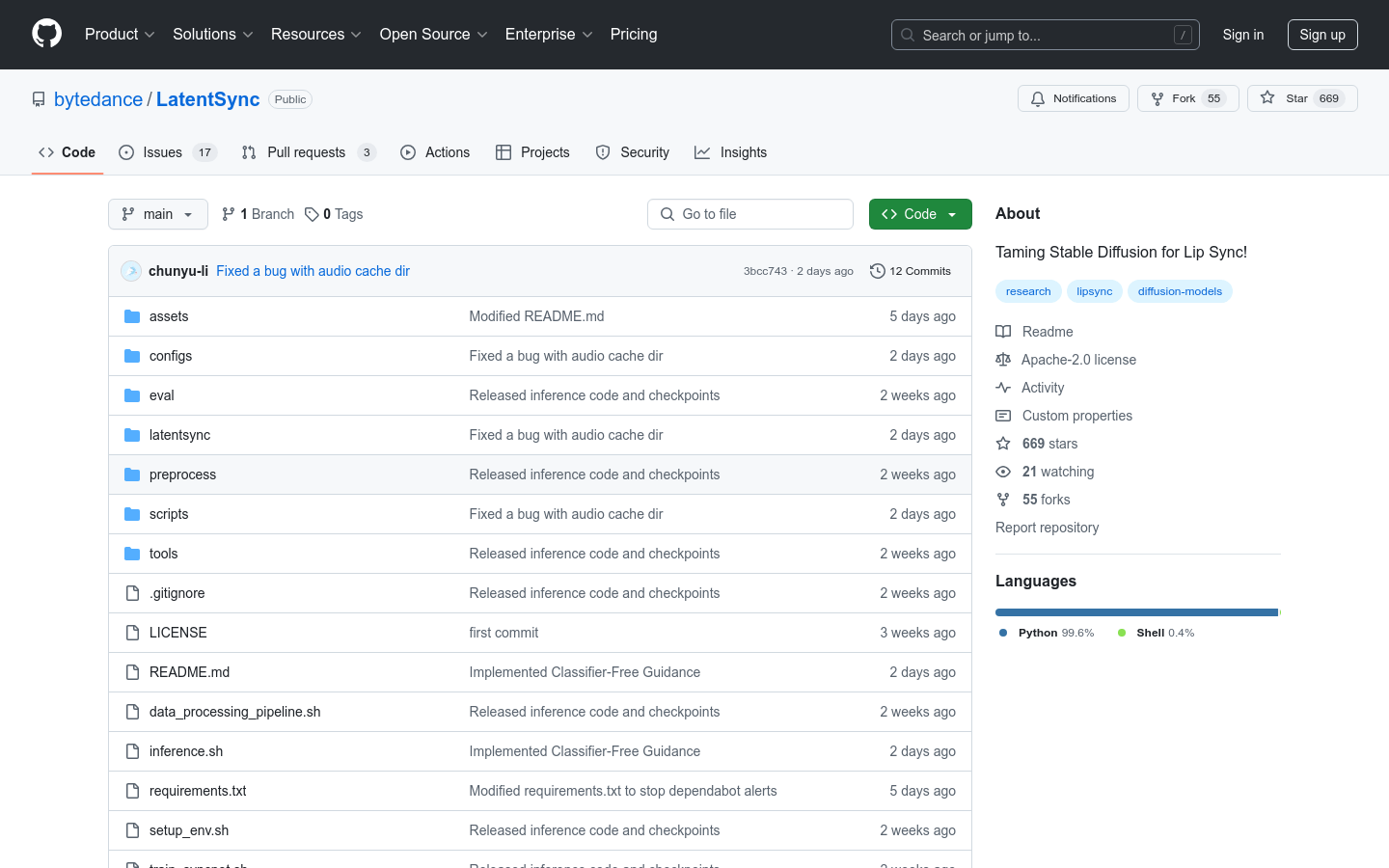

Open-source training and inference code: Includes training scripts for U-Net and SyncNet, as well as inference scripts, enabling users to train and apply models easily.

Provided model checkpoints: Open-source checkpoint files for quick download and usage by users.

Supports various video styles: Capable of processing different styles of video content, including realistic and animated videos.

How to Use

1. Environment Setup: Install the required dependencies and download the model checkpoint files by running the setup_env.sh script.

2. Data Processing: Preprocess video data using data_processing_pipeline.sh script, which includes video restoration, frame rate resampling, scene detection, face detection, and alignment.

3. Model Training: To train the model, run train_unet.sh and train_syncnet.sh scripts separately for U-Net and SyncNet training.

4. Inference: Generate lip-synced videos by running the inference.sh script; adjust the guidance_scale parameter as needed to improve lip-sync accuracy.

5. Result Evaluation: Assess the generated lip-synced videos for alignment between lip movements and audio, as well as overall video quality and effects.

Featured AI Tools

English Picks

Pika

Pika is a video production platform where users can upload their creative ideas, and Pika will automatically generate corresponding videos. Its main features include: support for various creative idea inputs (text, sketches, audio), professional video effects, and a simple and user-friendly interface. The platform operates on a free trial model, targeting creatives and video enthusiasts.

Video Production

17.6M

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M