DRT O1 7B

Overview :

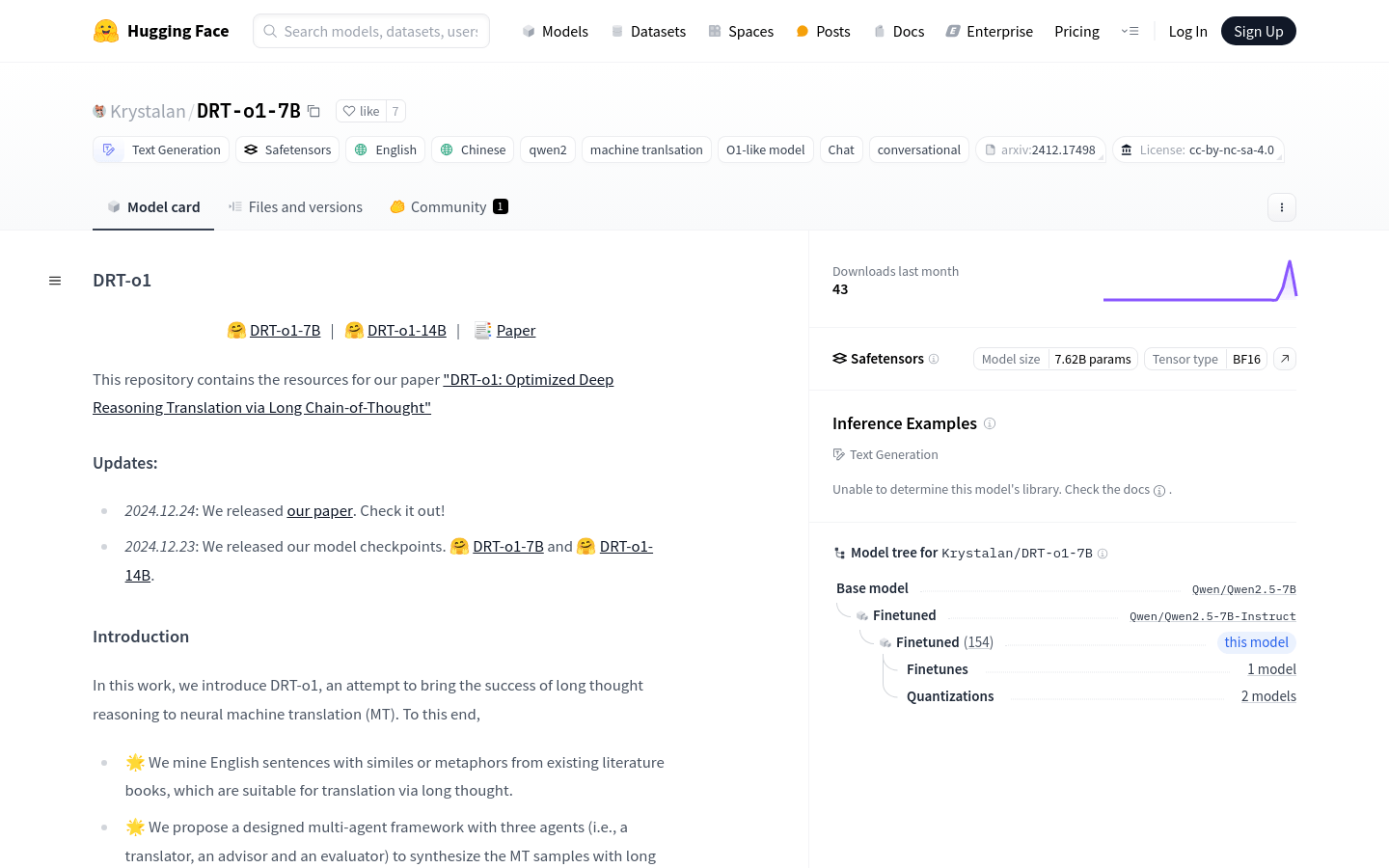

DRT-o1-7B is dedicated to successfully applying long-form reasoning in neural machine translation (MT). The model identifies English sentences suitable for long-form reasoning translation and proposes a multi-agent framework involving three roles: the translator, advisor, and evaluator, to synthesize MT samples. DRT-o1-7B and DRT-o1-14B are trained using Qwen2.5-7B-Instruct and Qwen2.5-14B-Instruct as backbone networks. The main advantage of this model lies in its capability to handle complex linguistic structures and deep semantic understanding, which is crucial for enhancing the accuracy and naturalness of machine translation.

Target Users :

The target audience for the DRT-o1-7B model includes researchers and developers in the field of natural language processing, as well as machine translation service providers. This model is suitable for them because it offers a novel approach based on deep reasoning to enhance the quality of machine translation, especially when dealing with complex linguistic structures. Furthermore, it promotes research into the application of long-form reasoning in machine translation.

Use Cases

Example 1: Using the DRT-o1-7B model to translate English literary works containing metaphors into Chinese.

Example 2: Applying DRT-o1-7B on a cross-cultural communication platform to provide high-quality automatic translation services.

Example 3: Utilizing the DRT-o1-7B model in academic research to analyze and compare the performance of different machine translation models.

Features

? Application of long-form reasoning in machine translation: Enhances translation quality through extensive reasoning.

? Multi-agent framework design: Incorporates the roles of translator, advisor, and evaluator to synthesize MT samples.

? Training based on Qwen2.5-7B-Instruct and Qwen2.5-14B-Instruct: Utilizes advanced pre-trained models as a foundation.

? Supports translation between English and Chinese: Capable of handling machine translation tasks between these two languages.

? Adaptable to complex linguistic structures: Able to process sentences that contain metaphors or other complex expressions.

? Provides model checkpoints: Convenient for researchers and developers for usage and further studies.

? Supports deployment on Huggingface Transformers and vllm: Easy to integrate and use.

How to Use

1. Visit the Huggingface website and navigate to the DRT-o1-7B model page.

2. Import the necessary libraries and modules based on the code examples provided on the page.

3. Set the model name to 'Krystalan/DRT-o1-7B' and load the model and tokenizer.

4. Prepare the input text, such as the English sentence that needs translation.

5. Use the tokenizer to convert the input text into a format acceptable to the model.

6. Input the converted text into the model, setting generation parameters such as the maximum number of new tokens.

7. Once the model generates the translated result, decode the generated tokens using the tokenizer to obtain the translated text.

8. Output and evaluate the translation results, proceeding to post-process as needed.

Featured AI Tools

Transluna

Transluna is a powerful online tool designed to simplify the process of translating JSON files into multiple languages. It's an essential resource for developers, localization experts, and anyone involved in internationalization and localization. Transluna delivers accurate JSON translations, helping your website effectively communicate and resonate with global users.

Translation

552.3K

Chinese Picks

Immersive Translation

Immersive Translation is a browser extension that can intelligently recognize the main content area of webpages and offer bilingual translations. It supports document translations in various formats, PDF translations, EPUB ebook translations, and subtitle translations. The extension allows for the selection of multiple translation interfaces, providing the most seamless translation experience possible.

Translation

541.8K