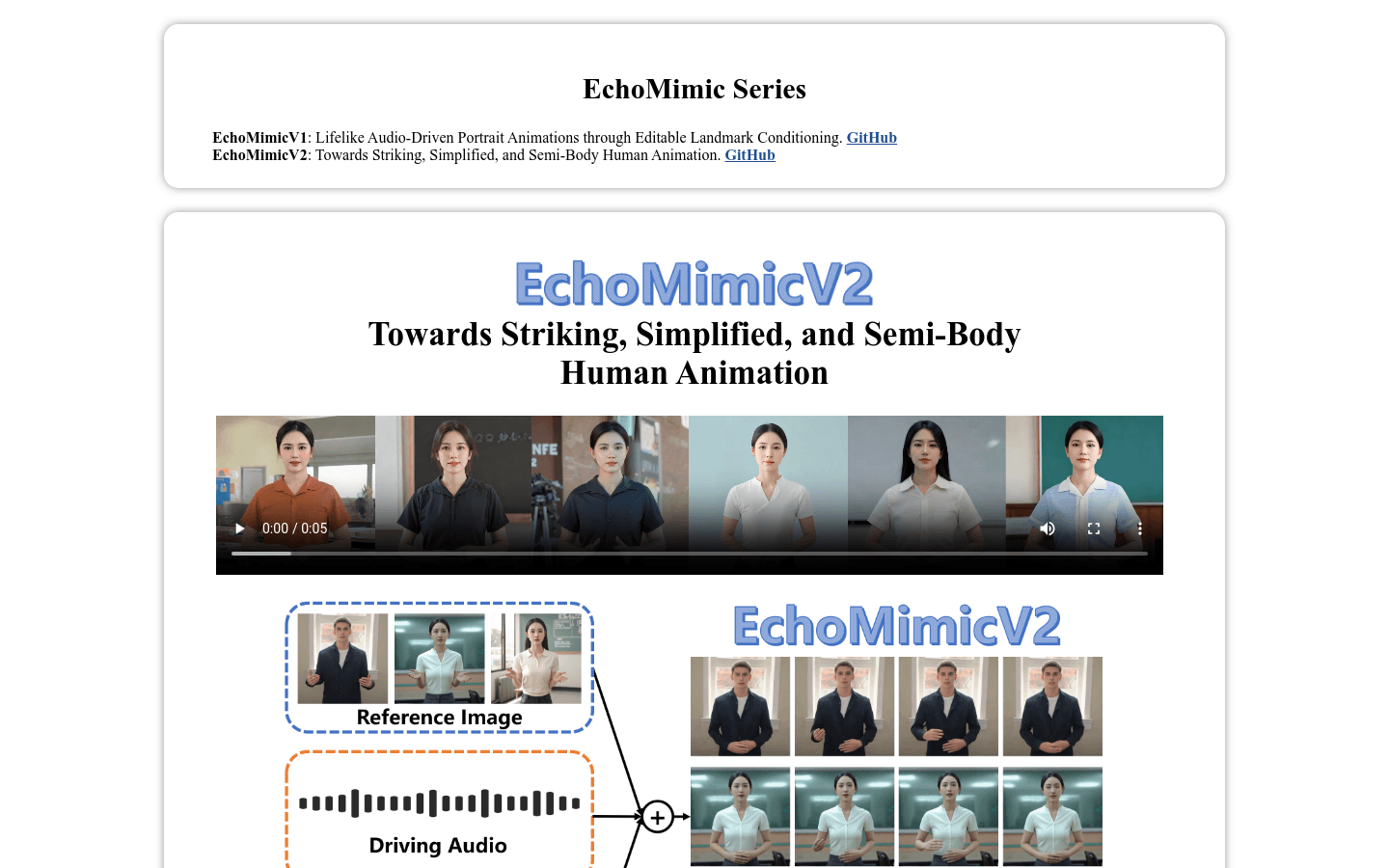

Echomimicv2

Overview :

EchoMimicV2 is an upper-body animation technology developed by the Ant Group's Terminal Technology Department at Alipay. It generates high-quality animated videos by leveraging reference images, audio clips, and a series of gestures to ensure the coherence between audio content and upper-body motions. This technology simplifies the previously complex animation production process through an Audio-Pose dynamic coordination strategy, enhancing the expressiveness of upper-body details, facial features, and gestures while reducing conditional redundancy. Additionally, it seamlessly integrates avatar data into the training framework using a head-part attention mechanism, which can be omitted during inference, thereby facilitating the animation production process. EchoMimicV2 also features a specific-stage denoising loss designed to guide motion, detail, and low-level quality of the animation at specific stages. This technology has surpassed existing methods in both quantitative and qualitative assessments, demonstrating its leading position in the field of upper-body human animation.

Target Users :

The target audience for EchoMimicV2 includes professionals such as animators, game developers, and video content creators who need to generate realistic human animations. This technology simplifies the animation production process, enhances efficiency, and maintains high-quality animation, making it ideal for commercial and creative projects that require rapid content generation.

Use Cases

Animators using EchoMimicV2 to create realistic upper-body character animations for films.

Game developers utilizing EchoMimicV2 to generate dynamic representations of characters in games.

Video content creators employing EchoMimicV2 to produce instructional animations for online courses.

Features

Generate high-quality animation videos using reference images, audio clips, and gesture sequences.

Enhance upper-body detail and facial and gesture expressiveness through Audio-Pose dynamic coordination strategies.

Reduce conditional redundancy and simplify the animation production process.

Use head-part attention mechanisms to integrate avatar data, improving training efficiency.

Design specific-stage denoising loss to optimize animation quality.

Provide a new benchmark for evaluating upper-body human animation effects.

How to Use

1. Prepare reference images, audio clips, and gesture sequences.

2. Visit the GitHub page of EchoMimicV2 to download the relevant code and models.

3. Set up the development environment and dependencies according to the documentation provided by EchoMimicV2.

4. Input the prepared reference images, audio clips, and gesture sequences into the EchoMimicV2 model.

5. Run the EchoMimicV2 model to generate animation videos.

6. Review the generated animation video to ensure audio content is coherent with the upper-body movements.

7. If necessary, adjust input conditions or model parameters to optimize the animation effects.

8. Utilize the generated animation video for commercial projects or personal creations.

Featured AI Tools

Chinese Picks

Douyin Jicuo

Jicuo Workspace is an all-in-one intelligent creative production and management platform. It integrates various creative tools like video, text, and live streaming creation. Through the power of AI, it can significantly increase creative efficiency. Key features and advantages include:

1. **Video Creation:** Built-in AI video creation tools support intelligent scripting, digital human characters, and one-click video generation, allowing for the rapid creation of high-quality video content.

2. **Text Creation:** Provides intelligent text and product image generation tools, enabling the quick production of WeChat articles, product details, and other text-based content.

3. **Live Streaming Creation:** Supports AI-powered live streaming backgrounds and scripts, making it easy to create live streaming content for platforms like Douyin and Kuaishou. Jicuo is positioned as a creative assistant for newcomers and creative professionals, providing comprehensive creative production services at a reasonable price.

AI design tools

105.1M

English Picks

Pika

Pika is a video production platform where users can upload their creative ideas, and Pika will automatically generate corresponding videos. Its main features include: support for various creative idea inputs (text, sketches, audio), professional video effects, and a simple and user-friendly interface. The platform operates on a free trial model, targeting creatives and video enthusiasts.

Video Production

17.6M