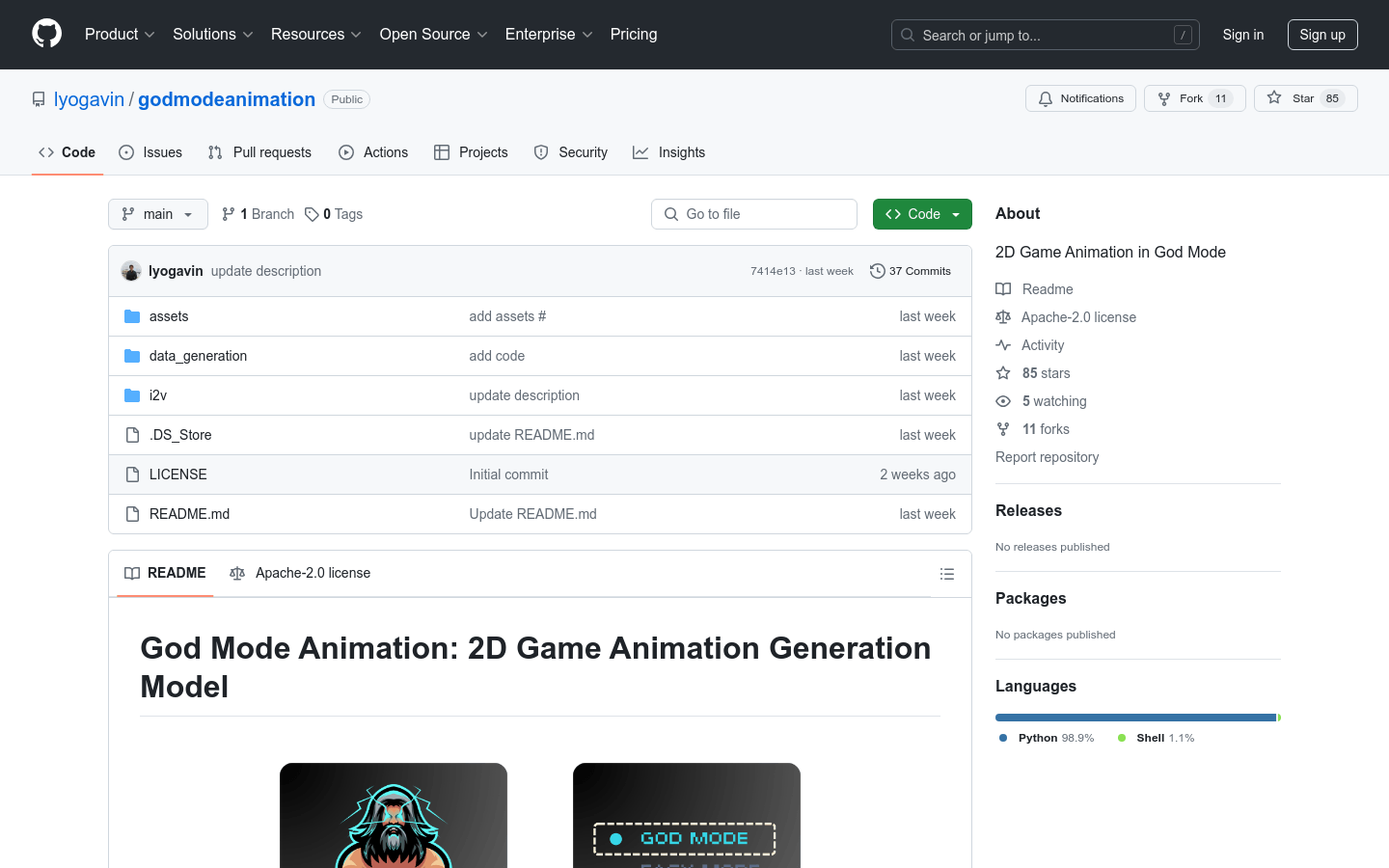

God Mode Animation

Overview :

Godmodeanimation is an open-source 2D game animation generation model that creates 2D game animations by training text-to-video and image-to-video models. Developers have utilized public game animation data and 3D Mixamo models for rendering animations to train the animation generation model, and have open-sourced the model, training data, training code, and data generation scripts.

Target Users :

This product is ideal for game developers, animators, and machine learning researchers as it provides an automated method for generating 2D game animations, saving time and resources while fostering innovation and experimentation.

Use Cases

An animation of a dinosaur jumping in the desert

An animation of Donald Trump jumping over dumpsters in New York

An animation of Harry Potter jumping over trees at Hogwarts Castle

An animation of Taylor Swift jumping with a microphone in a hotel room

Features

Supports conversion from text to animation and image to animation

Trains using public game animation data and 3D models for rendering animations

Open-sourced model, training data, training code, and data generation scripts

Provides scripts to train different animation models

Supports rendering Mixamo animations into 2D game animation videos using Python Blender

Created a public model on the Replicate platform available for trial

How to Use

Clone the godmodeanimation repository to your local machine

Install the necessary dependencies

Prepare the dataset and place it in the specified directory

Download the pre-trained model into the designated directory

Run the corresponding training script to train the animation model

Use Python Blender to render Mixamo animations into 2D game animation videos

Featured AI Tools

Sora

AI video generation

17.0M

Animate Anyone

Animate Anyone aims to generate character videos from static images driven by signals. Leveraging the power of diffusion models, we propose a novel framework tailored for character animation. To maintain consistency of complex appearance features present in the reference image, we design ReferenceNet to merge detailed features via spatial attention. To ensure controllability and continuity, we introduce an efficient pose guidance module to direct character movements and adopt an effective temporal modeling approach to ensure smooth cross-frame transitions between video frames. By extending the training data, our method can animate any character, achieving superior results in character animation compared to other image-to-video approaches. Moreover, we evaluate our method on benchmarks for fashion video and human dance synthesis, achieving state-of-the-art results.

AI video generation

11.4M