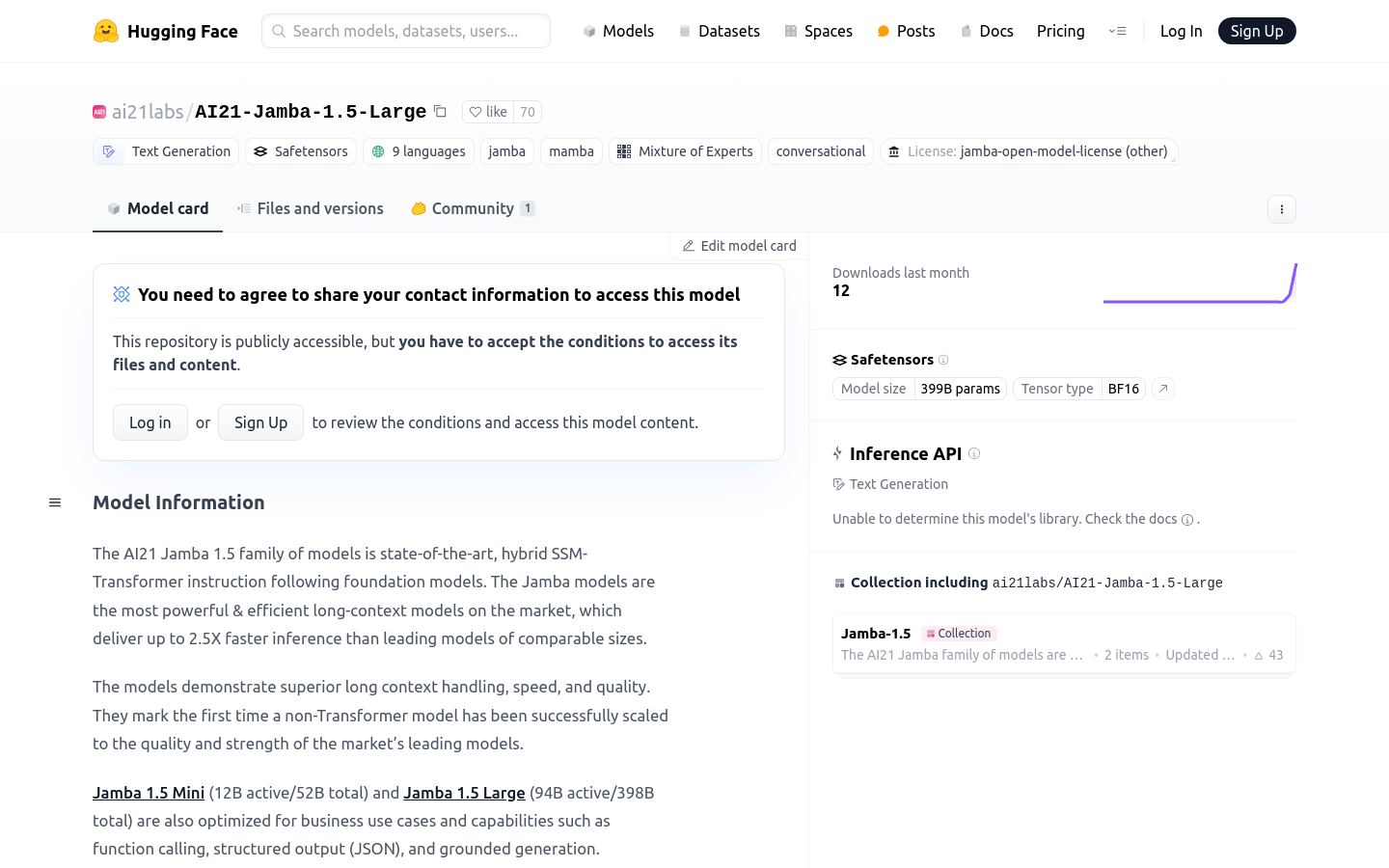

AI21 Jamba 1.5 Large

Overview :

The AI21 Jamba 1.5 series of models is one of the most powerful long-context models available on the market, offering 2.5 times faster inference speeds than leading competitors. These models demonstrate exceptional long-context processing capabilities, speed, and quality, successfully scaling non-Transformer models to match the quality and strength of market-leading models for the first time.

Target Users :

The AI21 Jamba 1.5 Large model is designed for enterprise-level applications that require processing large volumes of data and lengthy texts, such as natural language processing, data analysis, and machine learning. Its powerful performance and multilingual support make it an ideal choice for global businesses.

Use Cases

Utilize Jamba model for multilingual text generation tasks

Leverage the model's long context capabilities for complex data analysis in business intelligence

Integrate external APIs through tool usage features for automated business processes

Features

Text generation supporting nine languages

Long-context processing capabilities with context lengths up to 256K

Optimized for business use cases such as function calls, structured output (JSON), and grounded generation

Excellent performance across multiple benchmarks, including RULER, MMLU, and others

Tool usage support, compatible with Huggingface's tool usage API

JSON mode support, capable of outputting valid JSON formatted data

How to Use

Install the necessary dependencies, such as mamba-ssm and causal-conv1d

Load the model using the vLLM or transformers library

Configure model parameters according to your needs, such as tensor_parallel_size and quantization

Prepare input data, which can be text, JSON, or other formats

Invoke the model's generate method for inference

Process the model's output to obtain the desired results

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M