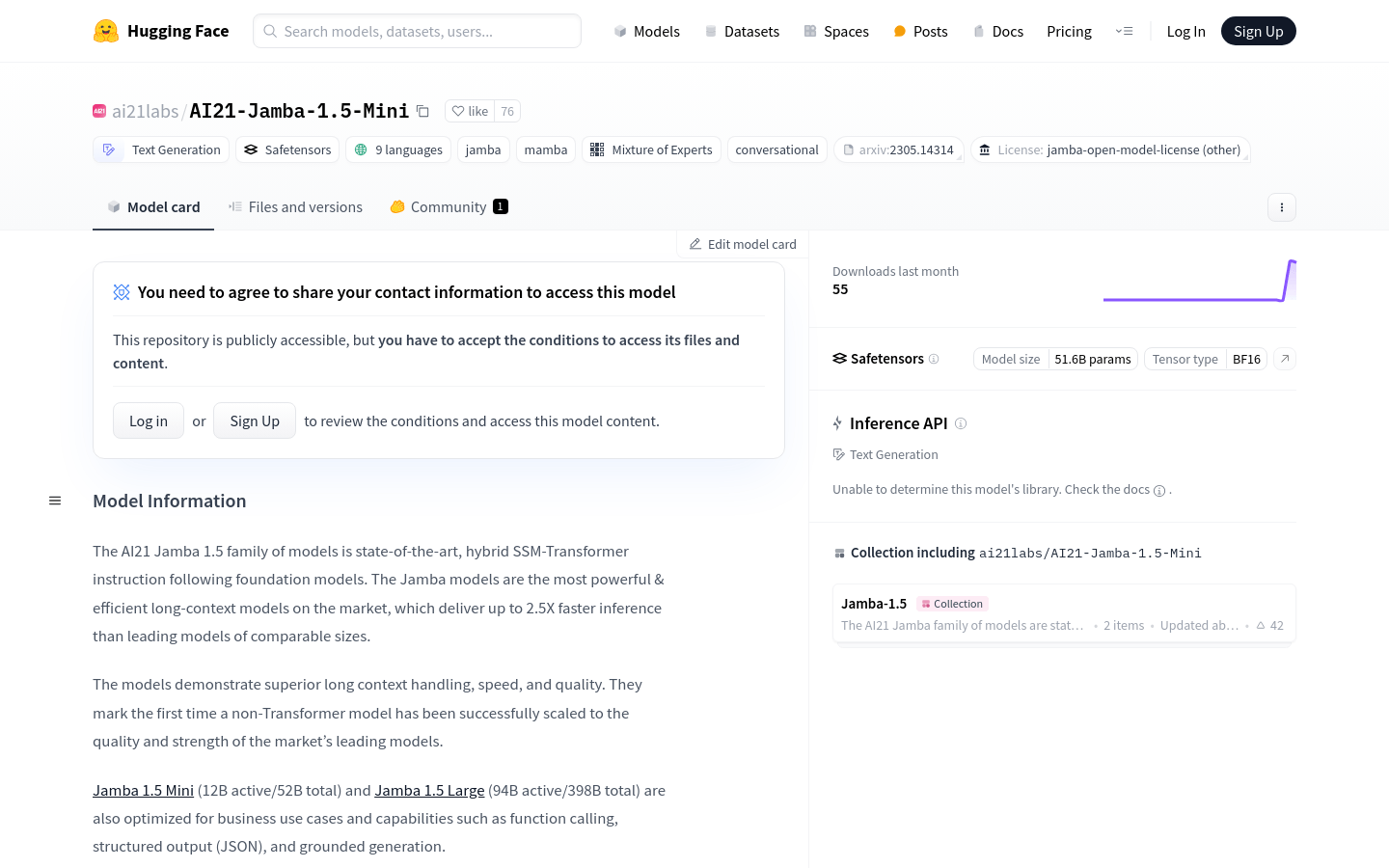

AI21 Jamba 1.5 Mini

Overview :

AI21-Jamba-1.5-Mini is the latest generation of hybrid SSM-Transformer instruction-following foundational models developed by AI21 Labs. This model stands out in the market with its exceptional long text processing capabilities, speed, and quality, achieving up to 2.5 times faster inference compared to other leading models of similar size. Jamba 1.5 Mini and Jamba 1.5 Large are optimized for commercial use cases and functionalities, such as function calling, structured output (JSON), and foundational generation.

Target Users :

The AI21-Jamba-1.5-Mini model is designed for enterprise users who need to process large volumes of text data, such as researchers and developers in the field of natural language processing, as well as businesses looking to enhance their text processing efficiency. It is particularly well-suited for scenarios like long text generation, multilingual translation, question-answering systems, and chatbots.

Use Cases

For generating long articles or reports on specific topics.

Serving as the underlying model for a multilingual chatbot, providing real-time language translation and dialogue generation.

Integrated into a corporate knowledge management system for automatically answering employee inquiries and providing decision support.

Features

Supports text generation in 9 languages, including English, Spanish, French, Portuguese, Italian, Dutch, German, Arabic, and Hebrew.

Capable of processing long text with a context length of up to 256K.

Optimized for commercial use cases, including function calling and structured outputs.

Supports tool usage features, allowing users to insert custom-defined tools based on Huggingface's tool usage API.

Enables document-based grounded generation, answering questions or following instructions based on provided documents or document snippets.

Supports JSON mode, capable of producing valid JSON formatted data in response to requests.

Provides fine-tuning examples using LoRA and QLoRA to accommodate various training needs.

How to Use

Install necessary dependencies, such as mamba-ssm and causal-conv1d.

Load and run the model using the vLLM or transformers libraries.

Adjust model parameters as needed, such as temperature, top_p, etc., to control the diversity and relevance of the generated text.

Utilize the model's tool usage feature to insert custom tools that extend the model's capabilities.

Prepare the input data, which can be in text, JSON format, or other structured data.

Pass the input data to the model to obtain the generated output.

Perform post-processing on the output results, such as parsing JSON and extracting specific information.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M