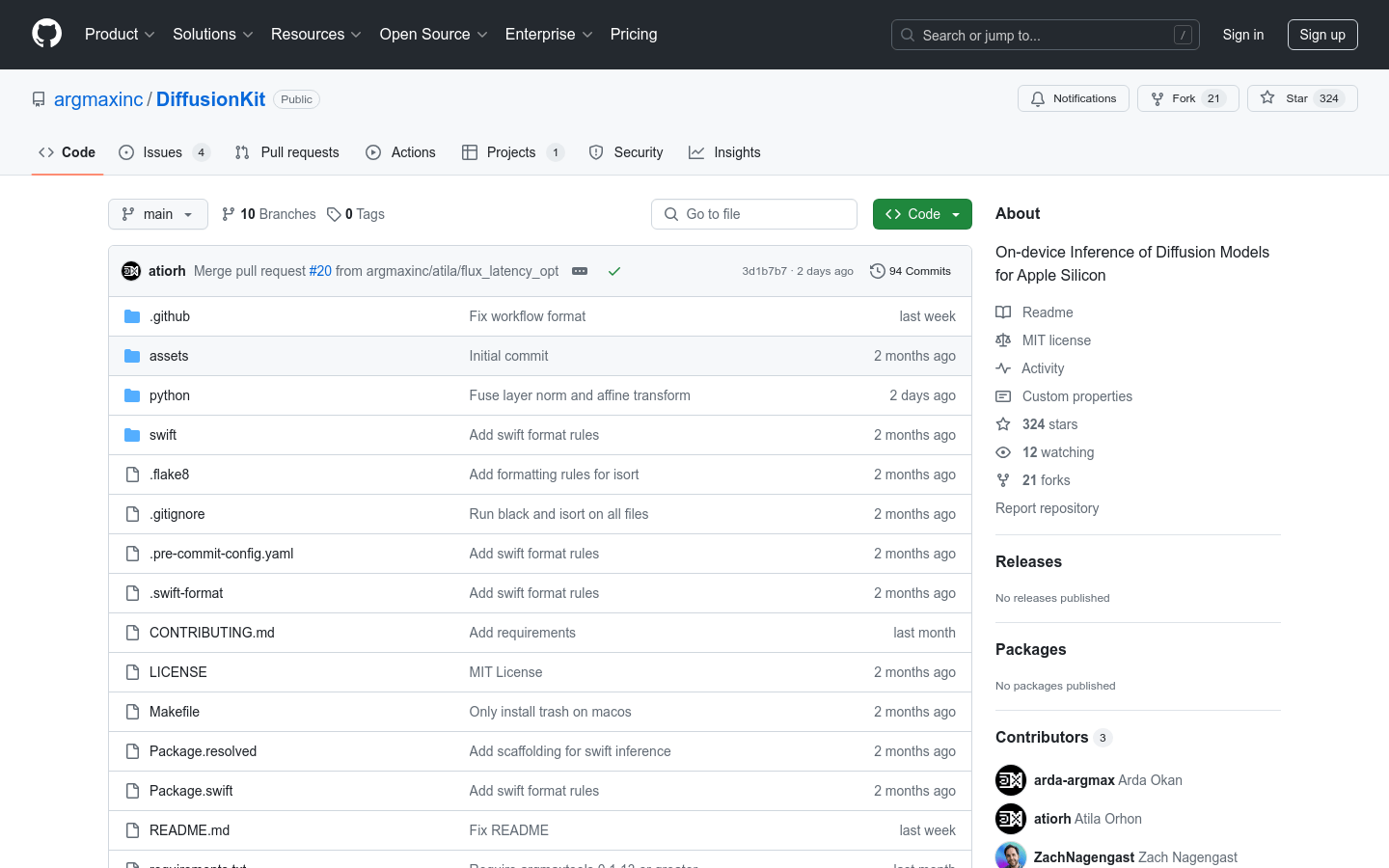

Diffusionkit

Overview :

DiffusionKit is an open-source project aimed at providing local inference capabilities for diffusion models on Apple silicon devices. It achieves efficient image processing by converting PyTorch models to Core ML format and using MLX for image generation. The project supports Stable Diffusion 3 and FLUX models, enabling both image generation and image-to-image transformations.

Target Users :

The target audience primarily consists of developers and researchers who require efficient image generation on Apple silicon devices. They may have specific needs for deploying and inferring deep learning models, aiming to enhance efficiency and response speed through local processing.

Use Cases

Use DiffusionKit to generate artwork with specific styles or content.

Transform existing images into different styles or scenes using the image-to-image functionality.

Utilize DiffusionKit for rapid prototyping and testing of models in research projects.

Features

Convert PyTorch models to Core ML format for efficient inference on Apple silicon devices.

Use MLX for image generation, supporting various parameter adjustments to control the generation process.

Support for Stable Diffusion 3 and FLUX models, offering diverse image generation options.

Generate images using Python CLI or Swift code for flexible usage.

Support for using seeds to ensure result reproducibility.

Local checkpoint usage is supported, allowing users to load custom models.

How to Use

1. Install the necessary Python environment and dependencies.

2. Use pip to install DiffusionKit as per the project documentation.

3. If necessary, download from Hugging Face Hub and accept the usage terms for Stable Diffusion 3.

4. Convert the PyTorch model to Core ML format.

5. Use the provided CLI tools or write Python/Swift code for image generation.

6. Adjust generation parameters such as seed and resolution as needed.

7. The generated images can be saved locally by specifying the desired path.

Featured AI Tools

Chinese Picks

Capcut Dreamina

CapCut Dreamina is an AIGC tool under Douyin. Users can generate creative images based on text content, supporting image resizing, aspect ratio adjustment, and template type selection. It will be used for content creation in Douyin's text or short videos in the future to enrich Douyin's AI creation content library.

AI image generation

9.0M

Outfit Anyone

Outfit Anyone is an ultra-high quality virtual try-on product that allows users to try different fashion styles without physically trying on clothes. Using a two-stream conditional diffusion model, Outfit Anyone can flexibly handle clothing deformation, generating more realistic results. It boasts extensibility, allowing adjustments for poses and body shapes, making it suitable for images ranging from anime characters to real people. Outfit Anyone's performance across various scenarios highlights its practicality and readiness for real-world applications.

AI image generation

5.3M