Llama3 S

Overview :

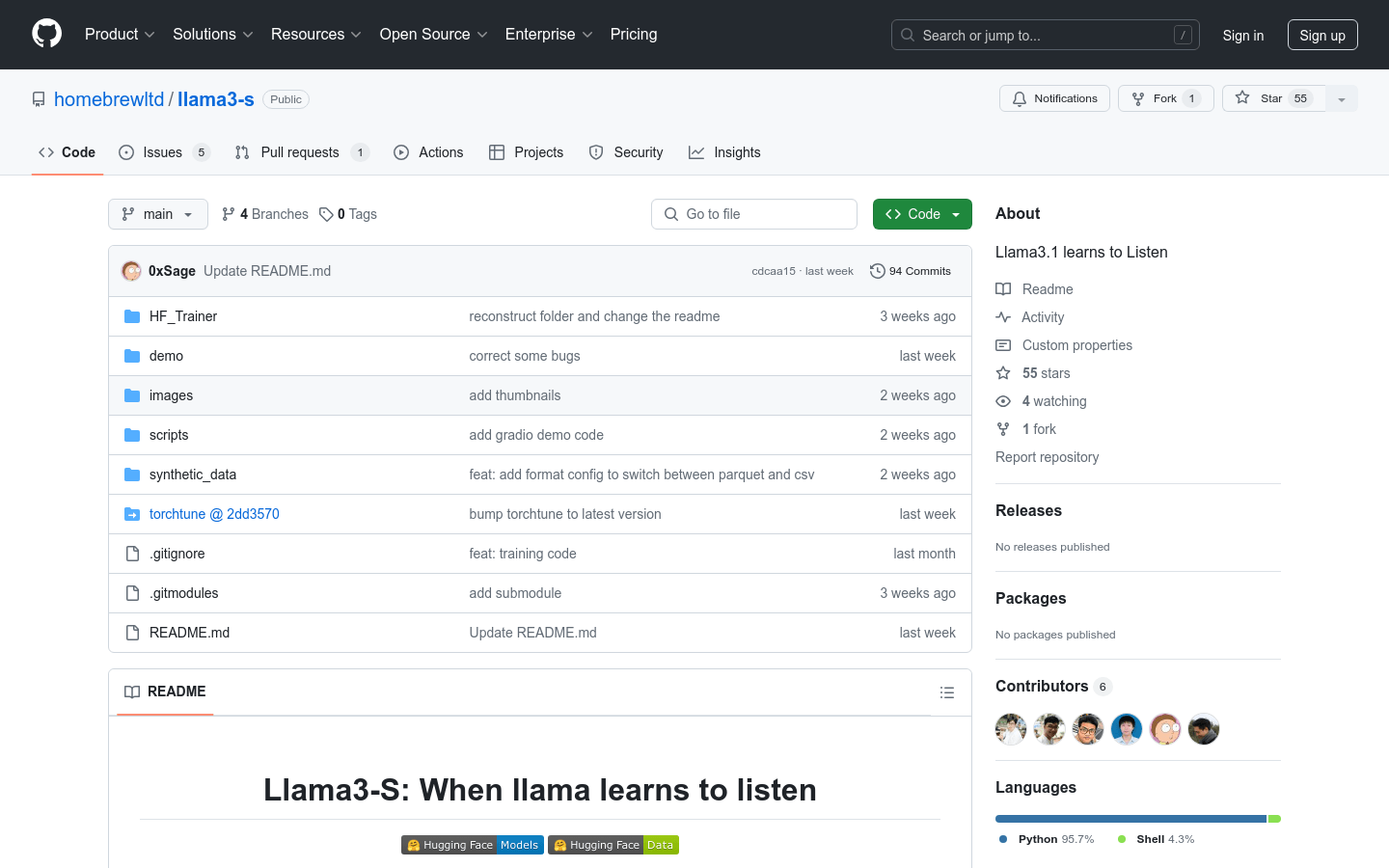

llama3-s is an open and ongoing research experiment aiming to extend large language models (LLMs) based on text to have native 'hearing' abilities. The project draws inspiration from Meta's Chameleon paper, focusing on token passability by incorporating audio tokens into the LLM vocabulary, potentially expanding to various input types in the future. As an open-source scientific experiment, both the codebase and datasets are publicly available.

Target Users :

The target audience includes researchers and developers, particularly those interested in natural language processing and machine learning. This product is suitable for them as it provides an experimental platform to explore and enhance the capabilities of language models, facilitating communication and collaboration within the open-source community.

Use Cases

Researchers use the llama3-s model to understand voice commands with different accents.

Developers leverage llama3-s for training and fine-tuning multimodal data models.

Educational institutions use llama3-s as a teaching case to instruct students on training and using language models.

Features

Utilize a synthetic audio data generator to comprehend female and Australian accents.

Currently capable of processing only single-voice command data.

Training is conducted using HF Trainer and Torchtune.

Offers fully fine-tuned models and initialized models.

Supports multi-GPU training (1-8 GPUs).

Provides Google Colab notebooks for quick start.

The synthetic generation guide offers detailed information on the generation process.

How to Use

Clone the GitHub repository to obtain the llama3-s project code.

Organize input/output directories and set up the folder structure as per the documentation.

Install dependencies for HF Trainer or Torchtune and configure the environment as needed.

Log in to Huggingface and configure the training parameters.

Run the training script to initiate the model training process.

Monitor the training progress and performance, adjusting hyperparameters as necessary.

Use Google Colab notebooks for a quick start to experimentation and prototyping.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M