Dreamai

Overview :

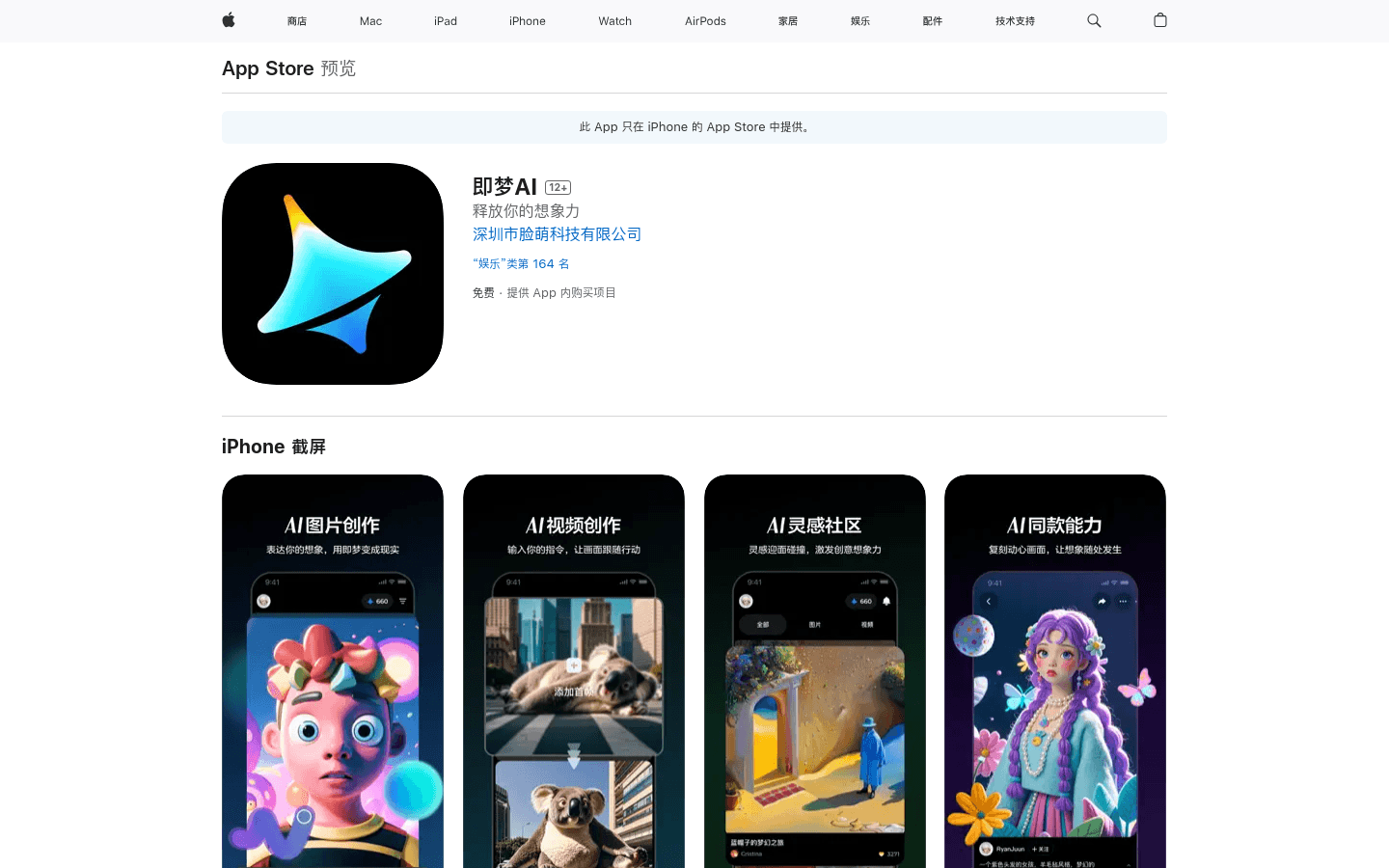

DreamAI is an AI expression platform designed for creative enthusiasts. It generates unique images and videos based on natural language descriptions, offering editing and sharing features to fully showcase users' creativity. Developed by Shenzhen Lianmeng Technology Co., Ltd., DreamAI offers a subscription service for DreamAI Pro, providing more privileges.

Target Users :

Creative enthusiasts and artists can quickly transform their ideas into visual works using DreamAI, ideal for daily entertainment and technological exploration.

Use Cases

Designers use DreamAI to create unique design elements.

Artists generate personalized artworks through DreamAI.

Regular users share fun images and videos created by DreamAI on social media platforms.

Features

AI Image Creation: Generate images based on users' natural language descriptions.

Video Creation: Input ideas to generate videos, supporting multiple iterations.

Work Sharing: Share creations via links or save them locally.

Explore Creativity: Browse works from other users and feel inspired.

Replicate Styles: Use prompts from others to create similar effects.

Membership Privileges: Subscribe to DreamAI Pro for points and extended video features.

How to Use

1. Download and install the DreamAI app.

2. Register for an account and log in.

3. Choose the AI image creation or video creation feature.

4. Describe the desired image or video content using natural language.

5. Edit the generated results until satisfied.

6. Share the final work with friends or save it locally.

7. Browse works by other users, appreciate creativity, and provide feedback.

8. If needed, subscribe to DreamAI Pro for additional services.

Featured AI Tools

Sora

AI video generation

17.0M

Animate Anyone

Animate Anyone aims to generate character videos from static images driven by signals. Leveraging the power of diffusion models, we propose a novel framework tailored for character animation. To maintain consistency of complex appearance features present in the reference image, we design ReferenceNet to merge detailed features via spatial attention. To ensure controllability and continuity, we introduce an efficient pose guidance module to direct character movements and adopt an effective temporal modeling approach to ensure smooth cross-frame transitions between video frames. By extending the training data, our method can animate any character, achieving superior results in character animation compared to other image-to-video approaches. Moreover, we evaluate our method on benchmarks for fashion video and human dance synthesis, achieving state-of-the-art results.

AI video generation

11.4M