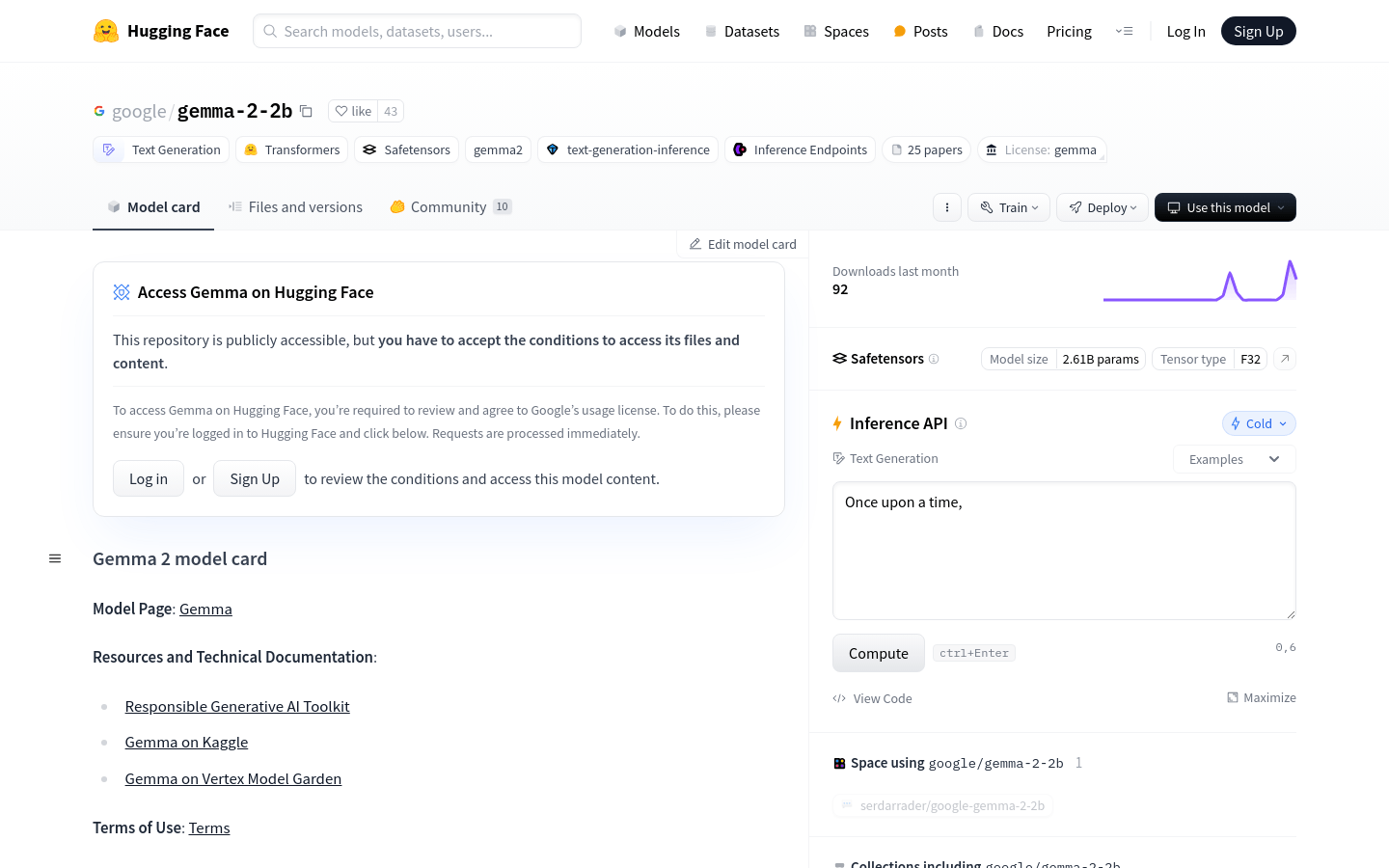

Gemma 2 2B

Overview :

Gemma 2 2B is a lightweight, advanced text generation model developed by Google, belonging to the Gemma model family. This model is built on the same research and technology as the Gemini model and is a text-to-text decoder consisting solely of a large language model, offering an English version. The Gemma 2 2B model is well-suited for various text generation tasks such as question answering, summarization, and reasoning, while its smaller model size enables deployment in resource-constrained environments like laptops or desktops, facilitating access to advanced AI models and fostering innovation.

Target Users :

The Gemma 2 2B model is designed for developers and researchers who need to rapidly deploy text generation applications on local devices. It is particularly suitable for users with limited hardware resources, such as small businesses, independent developers, or academic researchers, who wish to leverage the latest AI technologies.

Use Cases

Developers create customized chatbots using the Gemma 2 2B model.

Researchers employ this model to automatically generate abstracts for scientific papers.

Content creators utilize the Gemma 2 2B model to assist in writing creative copy.

Features

Suitable for a variety of text generation tasks such as question answering, summarization, and reasoning.

Moderate model size for easier deployment on resource-constrained devices.

Supports instruction tuning to optimize performance for specific tasks.

Offers 8-bit and 4-bit quantized versions for enhanced efficiency on hardware.

Can be quickly run through the command line interface.

Supports Torch compile acceleration, significantly improving inference speed.

How to Use

First, install the necessary Transformers library.

Choose the appropriate execution method based on your needs, such as the pipeline API or CLI.

Specify the model in your code as 'google/gemma-2-2b'.

Prepare the input text and set generation parameters, such as the maximum number of new tokens.

Execute the model to generate and obtain output text.

Decode and post-process the output text.

Advanced users can apply quantization or Torch compile techniques to optimize performance.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M