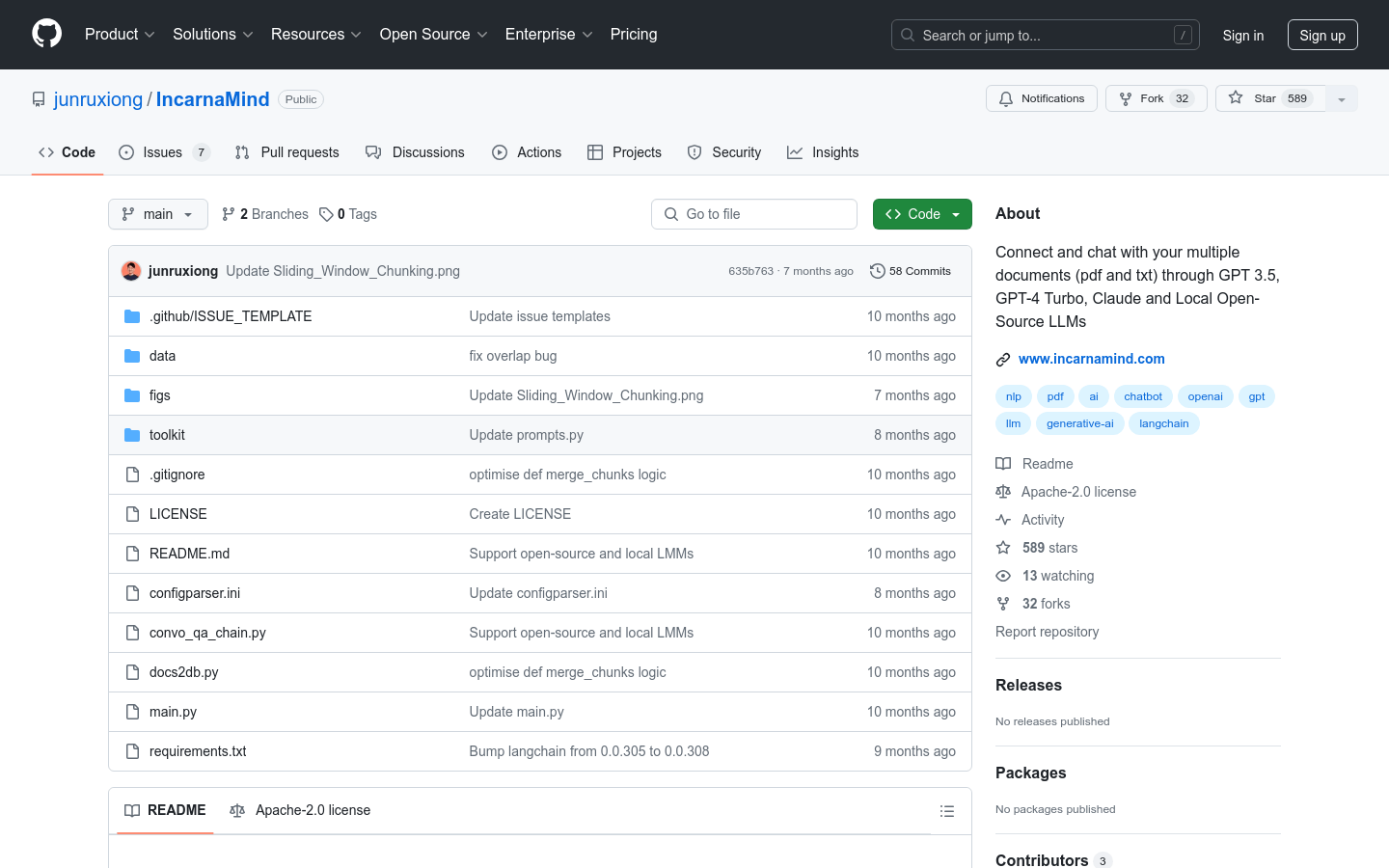

Incarnamind

Overview :

IncarnaMind is an open-source project aimed at enabling conversational interactions with personal documents (PDF, TXT) using large language models (LLMs) such as GPT, Claude, and local open-source LLMs. The project enhances query efficiency and improves the accuracy of LLMs through a sliding window chunking mechanism and integrated retrievers. It supports multi-document conversational Q&A, overcoming the limitations of single-document interaction while being compatible with various file formats and LLM models.

Target Users :

The target audience includes researchers, developers, and any users who need to interact with large volumes of documents. IncarnaMind assists them in better understanding and utilizing data within documents by providing efficient querying and conversational features, thus increasing their work efficiency.

Use Cases

Researchers can use IncarnaMind to converse with their research papers, quickly obtaining the information they need.

Developers can interact with technical documentation through IncarnaMind to resolve programming issues.

Enterprise users can utilize IncarnaMind for knowledge management, enhancing team collaboration efficiency.

Features

Adaptive Chunking: Dynamically adjusts the size and position of the window, balancing fine-grained and coarse-grained data access.

Multi-Document Conversational Q&A: Supports simple and multi-hop queries across multiple documents.

File Compatibility: Supports PDF and TXT file formats.

LLM Model Compatibility: Compatible with OpenAI GPT, Anthropic Claude, Llama2, and other open-source LLMs.

System Requirements: Requires over 35GB of GPU RAM to run the quantized GGUF version.

Open-Source and Local LLM Support: Recommends the llama2-70b-chat model and supports experiments with other LLMs.

Upcoming Release: Planning to launch smaller, cost-effective fine-tuned models.

How to Use

1. Installation: Clone the repository and set up the Python environment.

2. Create Environment: Use Conda to create and activate a virtual environment.

3. Install Dependencies: Install all the required dependencies.

4. Set API Key: Configure the API key in the configparser.ini file.

5. Upload Files: Place files in the /data directory and run the command to process them.

6. Run: Start the conversation and wait for the script to prompt for input.

7. Chat: Interact with the system by asking questions and receiving answers.

8. Log Management: The system automatically generates an IncarnaMind.log file, which can be edited as needed.

Featured AI Tools

Chatgpt Chinese Version GPT 4 (Domestic Free Direct Connection)

【Recommended】ChatGPT Chinese Version GPT-4 (Domestic Free Direct Connection) is a chatbot developed based on OpenAI's ChatGPT3.5 model. It features a rich question template library, making it convenient and efficient. The chatbot provides precise answers to questions. Users don't need to make a large upfront purchase, they can register for a free 30-day trial and purchase daily, weekly, or monthly cards according to their needs.

AI Chatbot

1.1M

Wow

Wow is an AI friend community for young people. Here, users can enter various fantasy worlds at any time and interact with AI companions through role-playing, realizing all their imaginations. Wow utilizes advanced AI technology to enable personalized conversations, exquisite character designs, and highly realistic voice synthesis. Users can find their ideal AI companions and engage in exciting and fun interactions with them. Wow also supports sharing memorable moments with friends.

AI Chatbot

775.3K