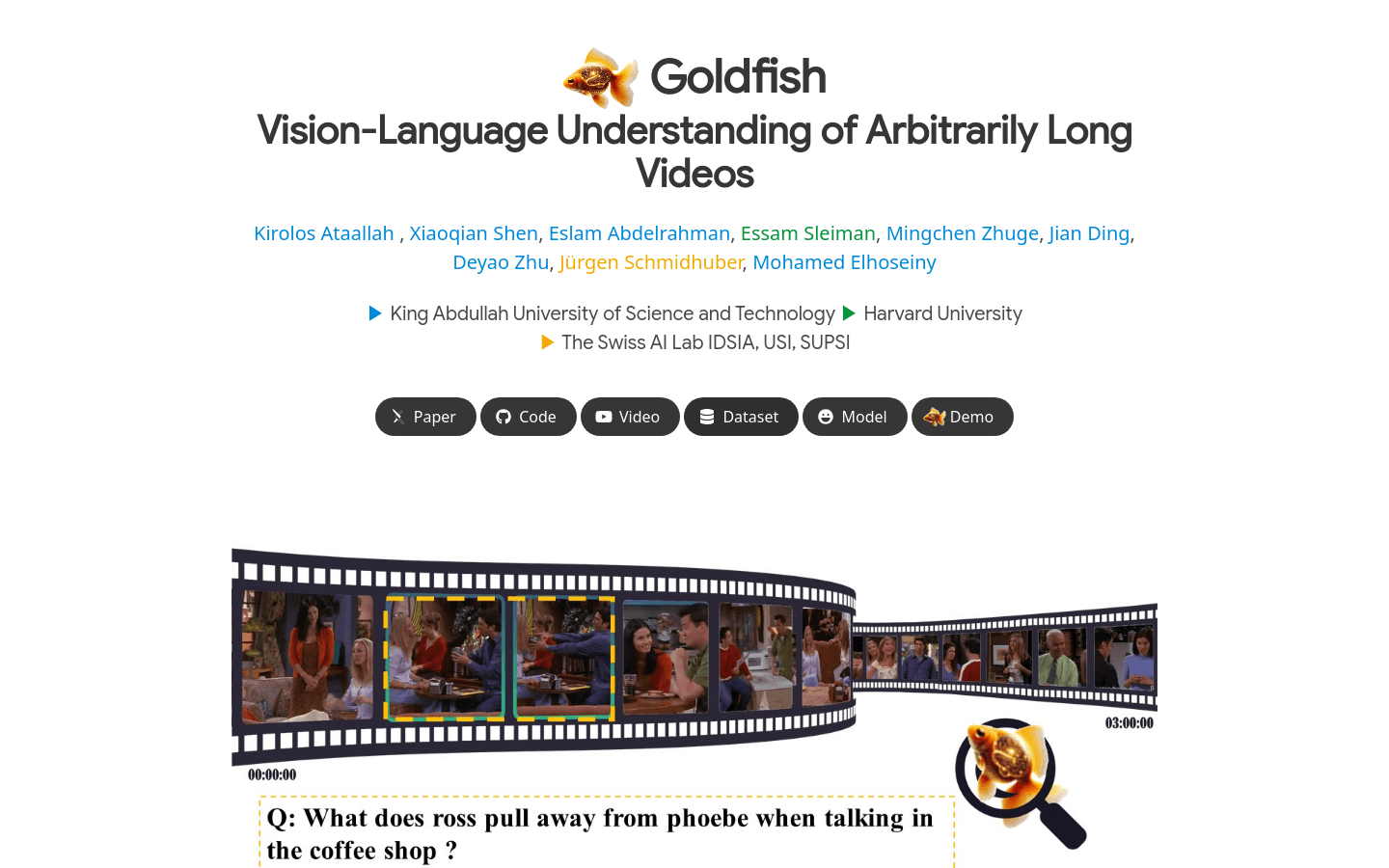

Goldfish

Overview :

Goldfish is a methodological approach designed for understanding videos of arbitrary length. It collects the top k video segments related to the instruction in an efficient retrieval mechanism, and then provides the required response. This design allows Goldfish to handle arbitrary long video sequences effectively, suitable for scenarios such as movies or TV series. To facilitate retrieval, MiniGPT4-Video is developed to generate detailed descriptions for video segments. Goldfish achieves an accuracy of 41.78% on the long video benchmark of TVQA-long, surpassing the previous methods by 14.94%. Moreover, MiniGPT4-Video also performs outstandingly in understanding short videos, surpassing the existing best methods by 3.23%, 2.03%, 16.5%, and 23.59% respectively on the short video benchmarks of MSVD, MSRVTT, TGIF, and TVQA. These results demonstrate that the Goldfish model has significantly improved in both long video and short video understanding.

Target Users :

The Goldfish model is designed for researchers and developers who need to process and understand long video content. For example, filmmakers, TV editors, video content analysts, etc. They can efficiently analyze and understand video content via the Goldfish model, thereby improving the efficiency of video content creation and analysis.

Use Cases

Filmmakers analyze film clips using the Goldfish model to extract key plots.

TV editors understand the storyline progress using the Goldfish model to optimize editing.

Video content analysts use the Goldfish model to review video content, ensuring compliance.

Video content analysts use the Goldfish model to review video content, ensuring compliance.

Video content analysts use the Goldfish model to review video content, ensuring compliance.

Features

Efficient retrieval mechanism: Processes long videos by collecting the top k video segments related to the instruction.

MiniGPT4-Video: Generates detailed descriptions for video segments, facilitating the retrieval process.

Long video benchmark: Achieves an accuracy of 41.78% on the TVQA-long benchmark.

Short video benchmark: Performs outstandingly on the MSVD, MSRVTT, TGIF, and TVQA short video benchmarks.

Video description generation: Uses EVA-CLIP to obtain visual tokens and convert them to the language model space.

Subtitle and video frame combination:Improves model performance by combining video frames and aligned subtitles.

Adaptability: Can handle long video sequences such as movies or TV series.

Featured AI Tools

Youtube AI

YouTube is the world's largest video sharing and publishing platform. Users can upload, watch, share and comment on videos. YouTube offers official channels and creator channels, with content covering entertainment, music, news, education, technology and more. YouTube has a strong community atmosphere and interactivity. Users can subscribe to interested creators, comment on videos and interact with each other. YouTube also offers a payment service, Youtube Premium, that lets you watch and download videos without ads.

AI video search

226.0K

Skmai: AI Powered Video Search On YouTube

SkmAI is an AI-powered video search tool that helps you quickly find the desired video clips by understanding the meaning of your search query, not just keywords. It can grasp your search intent and meaning, delivering the most relevant video segments within seconds. Say goodbye to spending hours searching for video clips! SkmAI is currently in Beta and supports searching for videos 2 hours or shorter. The official version will support longer videos and multilingual search in the future. Upgrade to Skm Pro for unlimited search queries and all future features.

AI video search

130.0K