Crawlee

Overview :

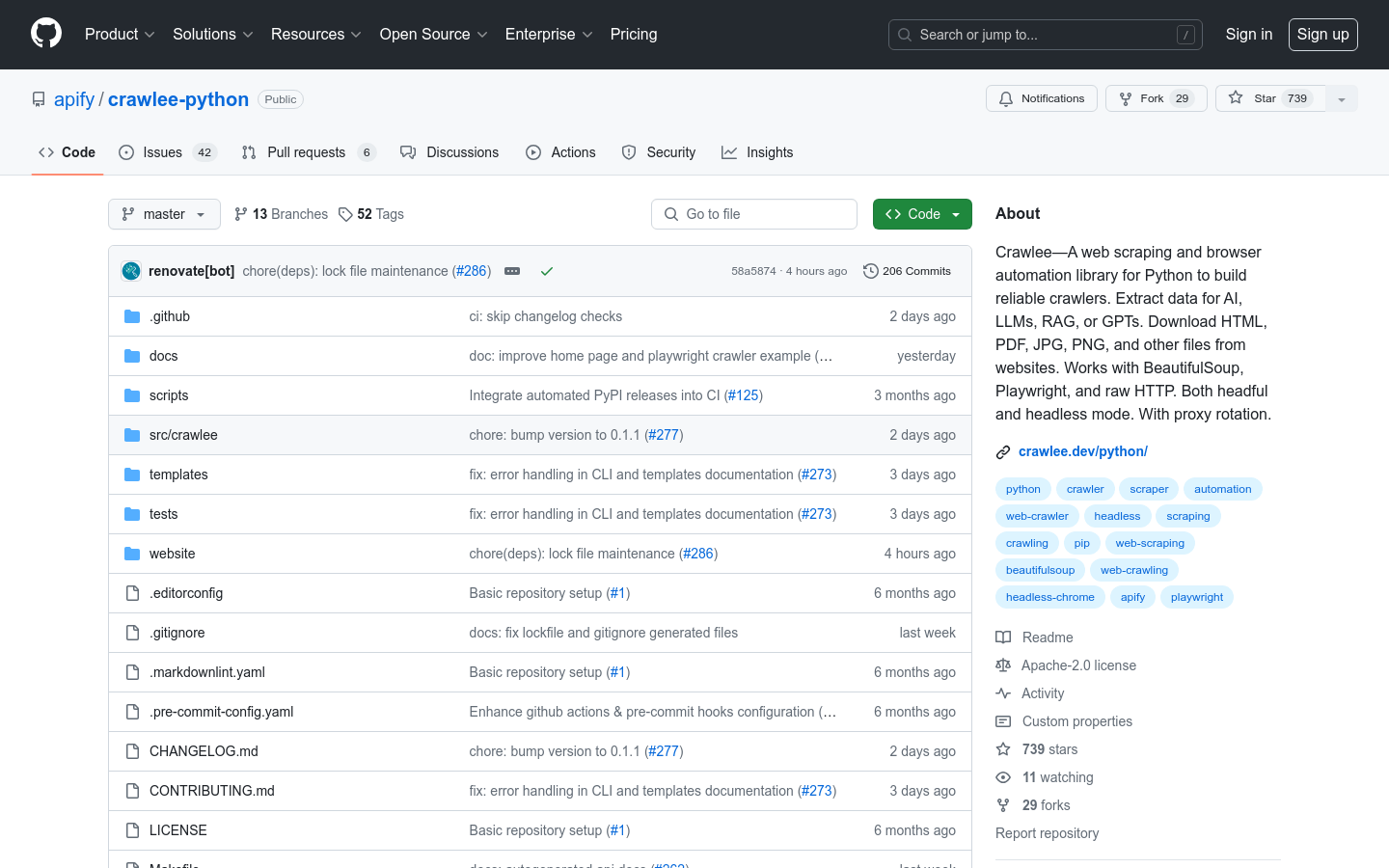

Crawlee is a Python library for building reliable web crawlers to extract data for use in AI, LLMs, RAG, or GPTs. It provides a unified interface for handling both HTTP and headless browser crawling tasks, supports automatic parallelization based on system resources, and comes with a clean and elegant API built on standard asynchronous IO. Unlike Scrapy, Crawlee offers native support for headless browser crawling. It is written in Python and includes type hints, enhancing the development experience and minimizing errors. Crawlee boasts features like automatic retries, integrated proxy rotation and session management, configurable request routing, a persistent URL queue, and pluggable storage options.

Target Users :

Crawlee is perfect for developers who need to build data scraping and web automation tools. Whether you need to extract data from static HTML pages or dynamic websites that rely on client-side JavaScript to generate content, Crawlee offers powerful support. Its ease of use and flexibility make it an ideal choice for data scientists, machine learning engineers, and web developers.

Use Cases

Efficiently extract HTML content data using BeautifulSoupCrawler.

Utilize PlaywrightCrawler to scrape data from JavaScript-heavy websites.

Quickly launch and configure new crawler projects using the Crawlee CLI.

Features

Unified HTTP and headless browser crawling interface

System resource-based automatic parallel crawling

Python type hints, enhancing development experience

Automatic error retries and anti-blocking functionality

Integrated proxy rotation and session management

Configurable request routing and persistent URL queues

Support for various data and file storage methods

Robust error handling mechanisms

How to Use

Install Crawlee: pip install crawlee

Install any additional dependencies as needed, such as beautifulsoup or playwright

Create a new crawler project using the Crawlee CLI: pipx run crawlee create my-crawler

Choose a template and configure it according to your project's requirements

Write your crawler logic, including data extraction and link crawling

Run the crawler and observe the results

Featured AI Tools

Crawl4ai

Crawl4AI is a powerful, free web crawling service designed to extract valuable information from web pages and make it accessible for large language models (LLMs) and AI applications. It facilitates efficient web crawling, provides LLM-friendly output formats such as JSON, cleaned HTML, and Markdown, supports crawling multiple URLs simultaneously, and is completely free and open-source.

AI crawler

120.1K

Chinese Picks

X Crawl

x-crawl is an AI-assisted crawling library based on Node.js that enhances the efficiency, intelligence, and convenience of crawling through powerful AI-assisted features. It supports the crawling of dynamic pages, static pages, API data, and file data, and offers capabilities for automated page control, keyboard input, event operations, and more. Additionally, it features device fingerprinting, asynchronous/synchronous operation, interval crawling, retry after failure, proxy rotation, priority queuing, and crawling logging to meet various crawling needs. x-crawl provides completely typed interfaces with generics, is released under the MIT license, and is suitable for developers and companies engaged in data crawling.

AI crawler

106.8K