Jailbreakzoo

Overview :

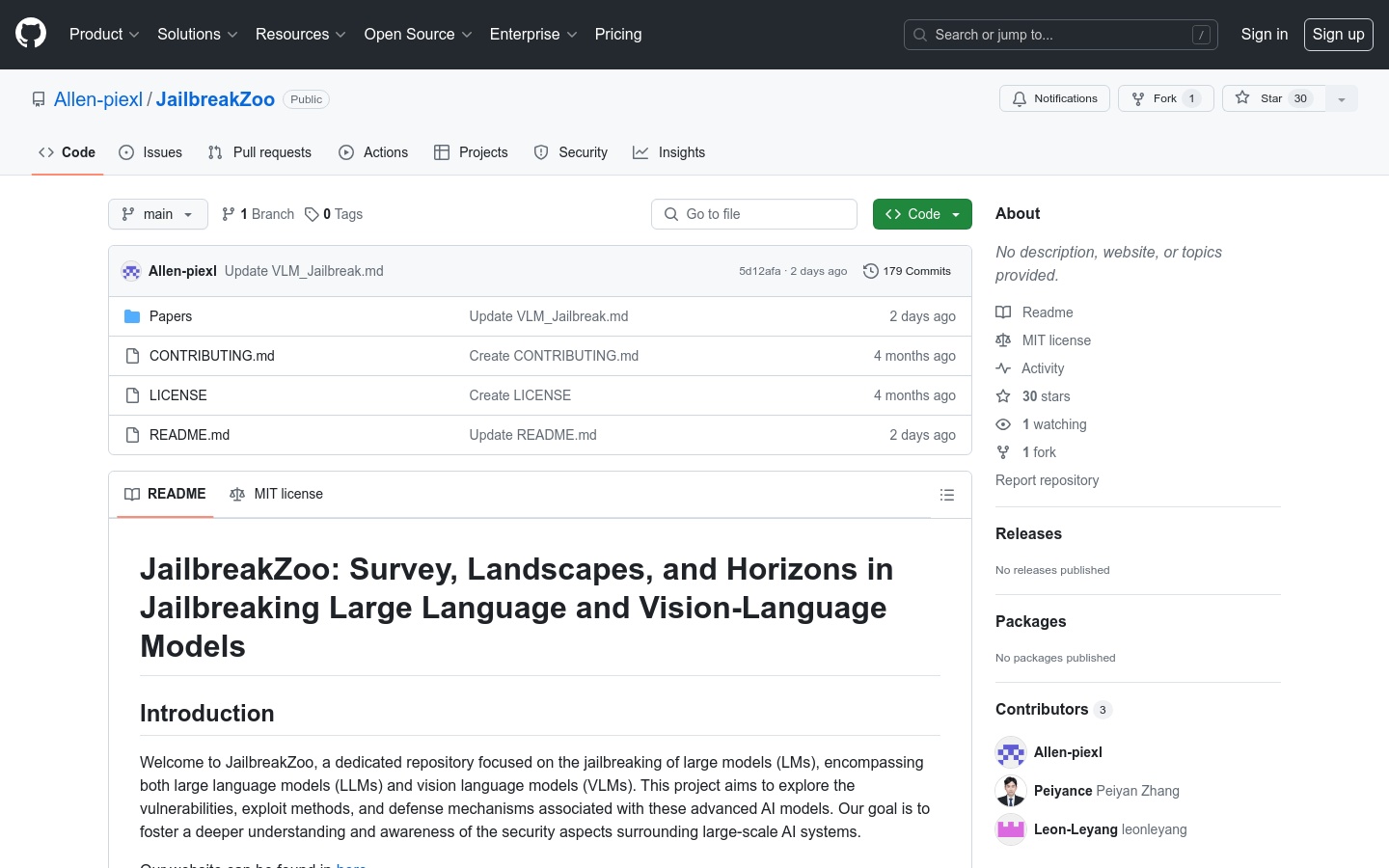

JailbreakZoo is a repository focused on breaking large models, including large language models and vision-language models. The project aims to explore vulnerabilities, exploitation methods, and defense mechanisms of these advanced AI models to promote a deeper understanding and awareness of security in large-scale AI systems.

Target Users :

JailbreakZoo targets AI security researchers, developers, and academics interested in the safety of large AI models. It provides a platform for these users to gain a deeper understanding and research potential security issues within large AI models.

Use Cases

Researchers use JailbreakZoo to analyze vulnerabilities in the latest large language models

Developers leverage information from the repository to enhance the security of their products

Academia utilizes JailbreakZoo to teach students about AI model security

Features

Systematically organizes large language model breaking techniques by release timeline

Provides strategies and methods for defending against large language models

Introduces vulnerabilities and breaking methods specific to vision-language models

Includes defense mechanisms for vision-language models, covering latest advancements and strategies

Encourages community contributions, including adding new research, improving documentation, or sharing breaking and defense strategies

How to Use

Visit the JailbreakZoo GitHub page

Read the introduction section to understand the project's goals and background

Browse breaking techniques and defense strategies published on different timelines

Review specific model break cases or defense methods as needed

Follow the contribution guidelines to submit your own research findings or suggestions if desired

Adhere to the citation guidelines when referencing JailbreakZoo in research or publications

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M