RL4VLM

Overview :

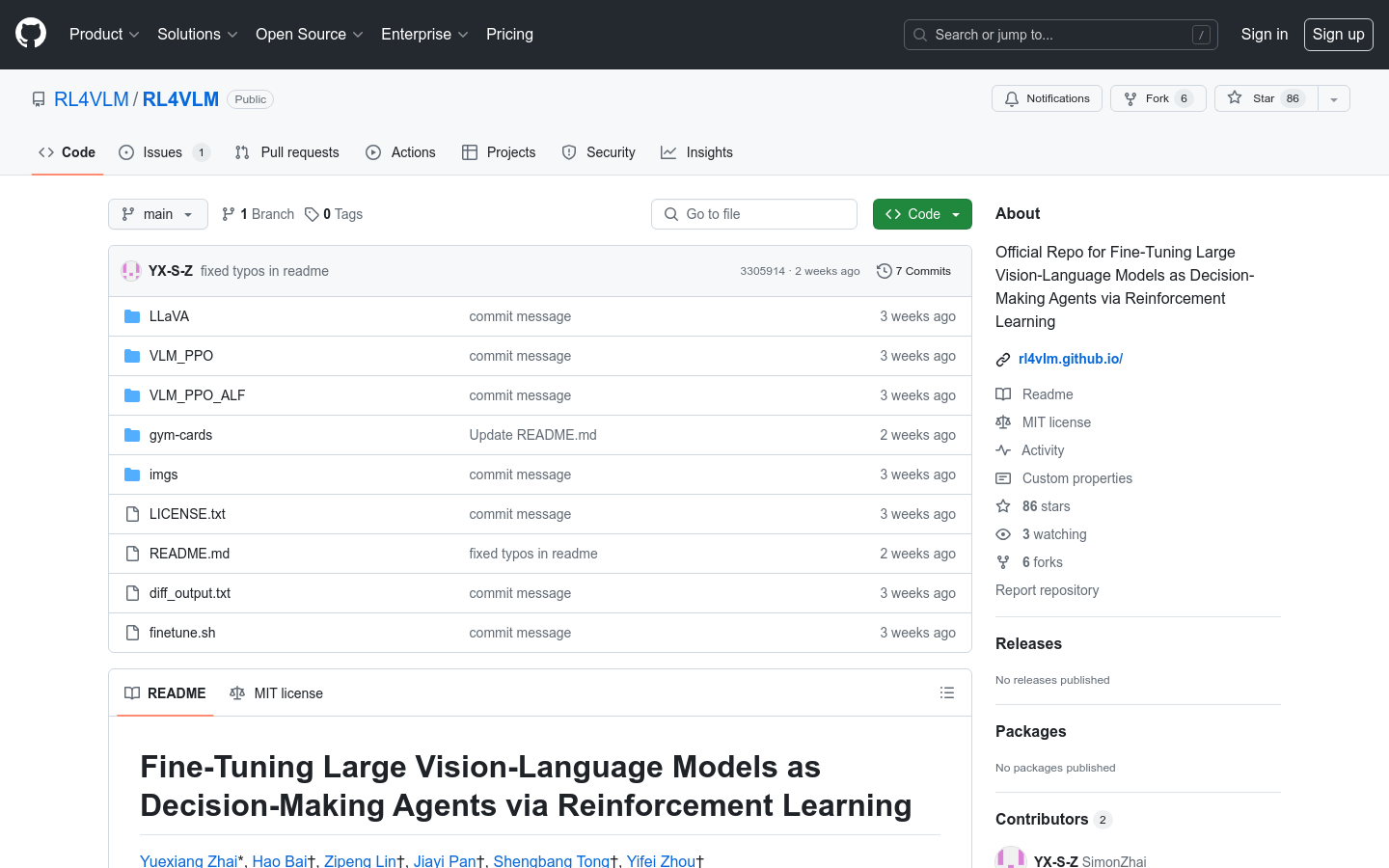

RL4VLM is an open-source project aimed at fine-tuning large vision-language models via reinforcement learning, enabling them to function as intelligent agents capable of making decisions. Developed collaboratively by researchers including Yuexiang Zhai, Hao Bai, Zipeng Lin, Jiayi Pan, Shengbang Tong, Alane Suhr, Saining Xie, Yann LeCun, Yi Ma, and Sergey Levine, it is based on the LLaVA model and employs the PPO algorithm for reinforcement learning fine-tuning. RL4VLM provides a comprehensive codebase structure, installation guidelines, licensing information, and instructions on how to cite the research.

Target Users :

Target audience primarily researchers and developers in the field of machine learning and artificial intelligence who need to leverage vision-language models for decision-making and reinforcement learning research.

Use Cases

Researchers utilize RL4VLM to fine-tune models, enhancing decision-making capabilities in natural language processing tasks.

Developers leverage the project's codebase and environments to train customized vision-language models.

Educational institutions employ RL4VLM as a teaching case study, demonstrating to students how reinforcement learning can elevate model performance.

Features

Provides a modified LLaVA model.

Original GymCards environment.

RL4VLM codebase for GymCards and ALFWorld environments.

Detailed training procedures, including preparing SFT checkpoints and running RL using SFT checkpoints.

Offers two different conda environments to accommodate the diverse package requirements of GymCards and ALFWorld.

Provides detailed guidelines and template scripts for running algorithms.

Emphasizes the importance of using specific checkpoints as starting points and offers flexibility in using different initial models.

How to Use

First, visit the RL4VLM GitHub page to access project information and the codebase.

Prepare the necessary SFT checkpoints according to the provided installation guidelines.

Download and configure the required conda environment for GymCards or ALFWorld.

Run the fine-tuning process for LLaVA following the guidelines, setting parameters like data paths and output directories.

Execute the RL algorithm using the provided template scripts, configuring GPU count and relevant parameters.

Adjust parameters within the configuration files, such as `num_processes`, based on experimental needs.

Run the RL algorithm and monitor the training process and model performance.

Properly cite the RL4VLM project according to the provided citation guidelines.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M