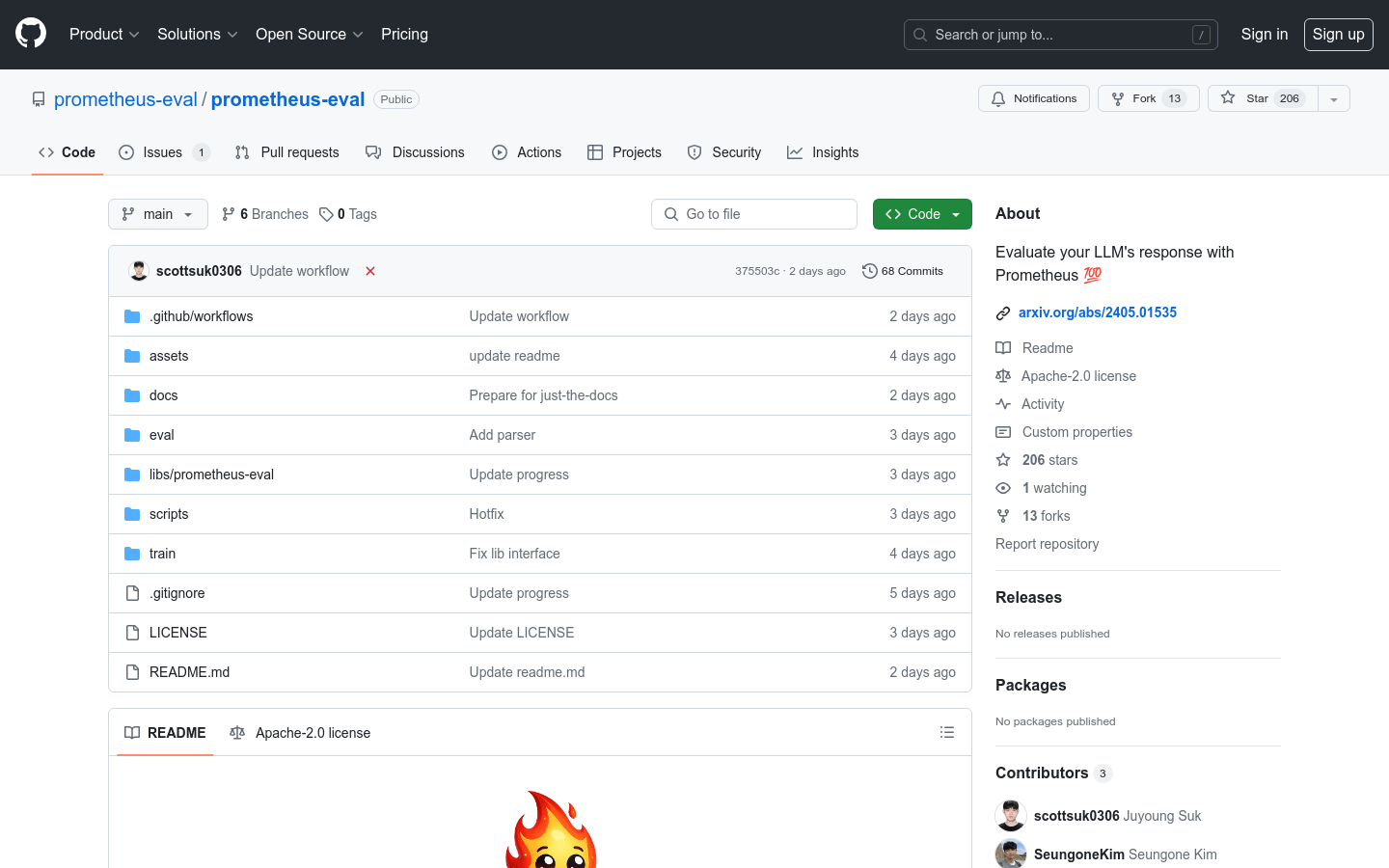

Prometheus Eval

Overview :

Prometheus-Eval is an open-source toolkit designed to assess the performance of large language models (LLMs) in generation tasks. It provides a straightforward interface for evaluating instructions and responses using the Prometheus model. The Prometheus 2 model supports direct evaluation (absolute scoring) and paired ranking (relative scoring), which can simulate human judgment and proprietary language model-based evaluation, addressing issues of fairness, control, and affordability.

Target Users :

["Researchers and developers: For evaluating and optimizing their own language models","Educational institutions: As a teaching tool to help students understand the evaluation process of language models","Enterprises: Building internal evaluation processes without relying on proprietary models, protecting data privacy"]

Use Cases

Evaluate the performance of a language model in sentiment analysis tasks

Compare the advantages and disadvantages of two different models in text generation tasks

Serve as a benchmark for developing new language models

Features

Absolute scoring: Outputs a score between 1 and 5 based on the given instructions, reference answers, and scoring criteria

Relative scoring: Evaluates two responses based on the given instructions and scoring criteria, outputting 'A' or 'B' to indicate the better response

Supports direct download of model weights from Huggingface Hub

Provides a Python package, prometheus-eval, to simplify the evaluation process

Includes scripts for training Prometheus models or fine-tuning on custom datasets

Supplies evaluation datasets for training and assessing Prometheus models

Supports operation on consumer-grade GPUs, reducing resource requirements

How to Use

Step 1: Install the Prometheus-Eval Python package

Step 2: Prepare the instructions, responses, and scoring criteria required for evaluation

Step 3: Evaluate using the absolute scoring or relative scoring method

Step 4: Analyze the model's performance based on the output scores or rankings

Step 5: Adjust and optimize the language model based on the evaluation results

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M