ALMA 13B R

Overview :

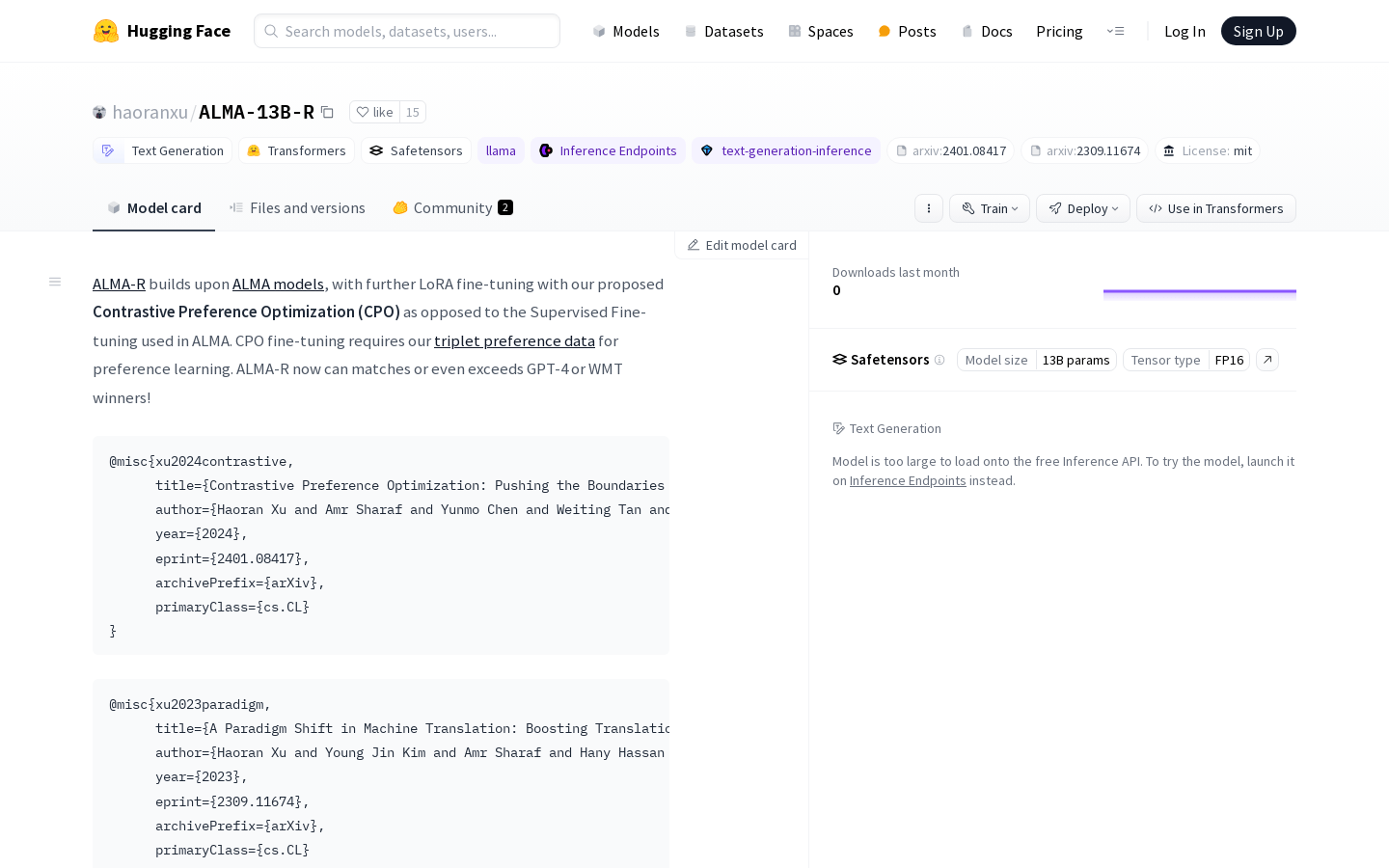

The ALMA-R model has been further fine-tuned using Contrastive Preference Optimization (CPO), surpassing GPT-4 and WMT award winners. Users can download the ALMA(-R) model and datasets from the GitHub repository. ALMA-R builds upon the ALMA model and employs our proposed Contrastive Preference Optimization (CPO) for fine-tuning, instead of the Supervised Fine-tuning used in ALMA. CPO fine-tuning requires our triplet preference data for preference learning. ALMA-R can now match or even surpass the performance of GPT-4 or WMT award winners!

Target Users :

Users can utilize the ALMA-R model for machine translation, download the associated datasets for training and fine-tuning, or deploy the model for practical applications.

Use Cases

Use the ALMA-R model for Chinese to English machine translation

Download the ALMA-R model for customized fine-tuning

Deploy the ALMA-R model for real-time translation services

Features

Download ALMA(-R) model

Download datasets

Machine translation

Model fine-tuning

Model deployment

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M