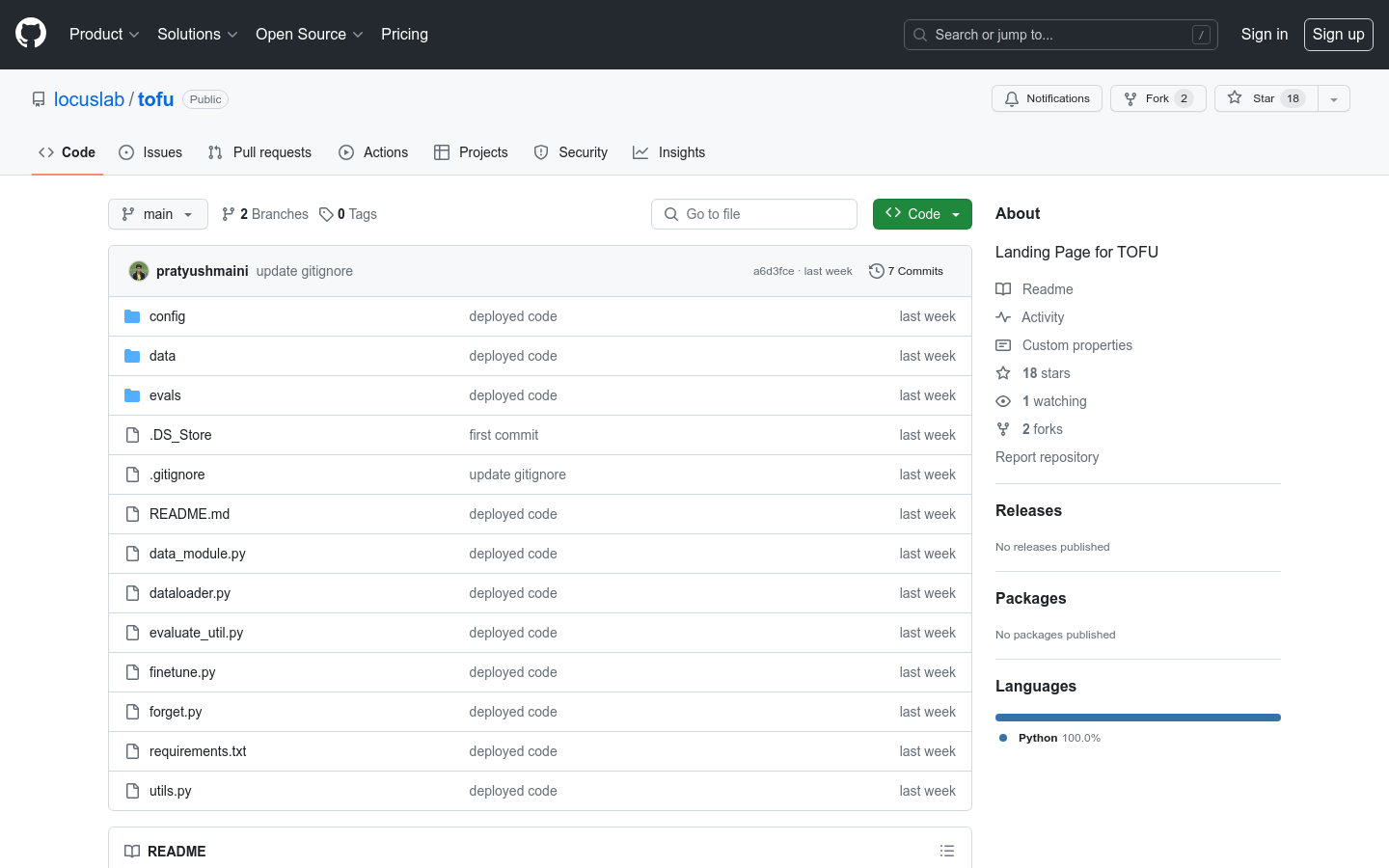

TOFU

Overview :

The TOFU dataset contains question-answer pairs generated based on 200 fictional authors that do not exist. It is used to evaluate the forgetting performance of large language models on real-world tasks. The task aims to forget models fine-tuned on various forgetting set ratios. This dataset uses the question-answer format, making it suitable for popular chatbot models like Llama2, Mistral, or Qwen. However, it can also be used for any other large language model. The corresponding codebase is written for Llama2 chatbot and Phi-1.5 models but can be easily adapted to other models.

Target Users :

Evaluate the forgetting ability of language models and train forgettable chatbot models.

Use Cases

Fine-tune a Llama model using the TOFU dataset and then forget the model on different sizes of forgetting sets to evaluate forgetting performance.

Build a chatbot based on the TOFU dataset, training a forgettable model to avoid the robot remembering or leaking sensitive information.

Use the forgetting functionality in the TOFU codebase to test the performance differences of various models when forgetting specific information.

Features

Provides a benchmark forgetting dataset

Supports the evaluation of forgetting performance in large language models

Uses a question-answer format suitable for chatbot models

Codebase supports multiple language models

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M