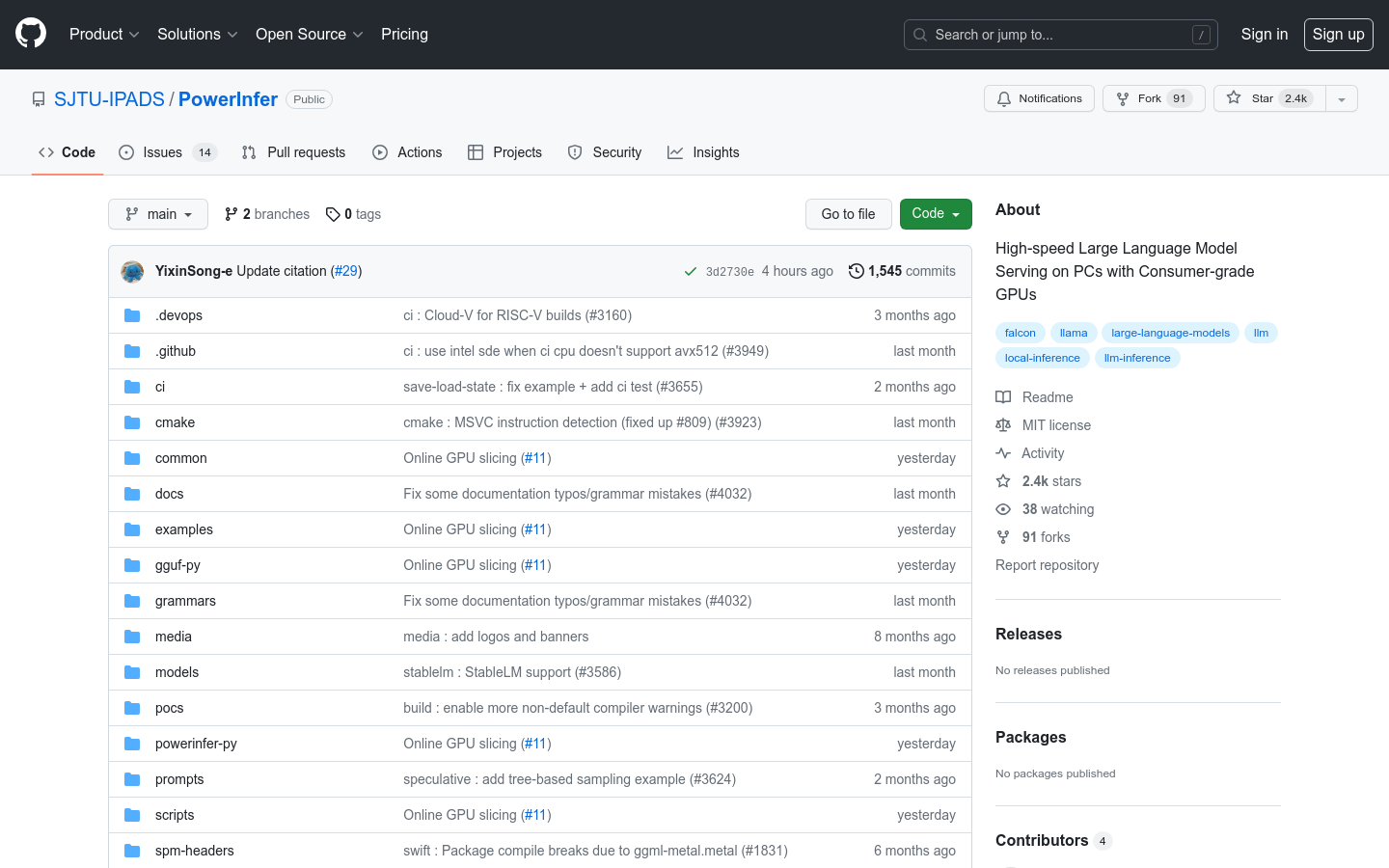

Powerinfer

Overview :

PowerInfer is an engine for performing high-speed inference of large language models on consumer-grade GPUs within personal computers. It leverages the high locality of LLM inference by pre-loading hot-activated neurons to the GPU, significantly reducing GPU memory requirements and CPU-GPU data transfer. PowerInfer also integrates adaptive predictors and neuron-aware sparse operators, optimizing the efficiency of neuron activation and sparse computation. It can achieve an average generation speed of 13.20 tokens per second on a single NVIDIA RTX 4090 GPU, only 18% slower than the top-tier server-grade A100 GPU while maintaining model accuracy.

Target Users :

PowerInfer is designed for high-speed inference of large language models on local deployments.

Features

Efficient LLM inference using sparse activation and the 'hot'/'cold' neuron concept

Seamless integration of CPU and GPU memory/compute capabilities for load balancing and faster processing speeds

Compatibility with common ReLU sparse models

Designed and deeply optimized for local deployment on consumer-grade hardware, enabling low-latency LLM inference and service

Backward compatibility, supporting inference with the same model weights as llama.cpp, albeit without performance improvements

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M