Reconfusion

Overview :

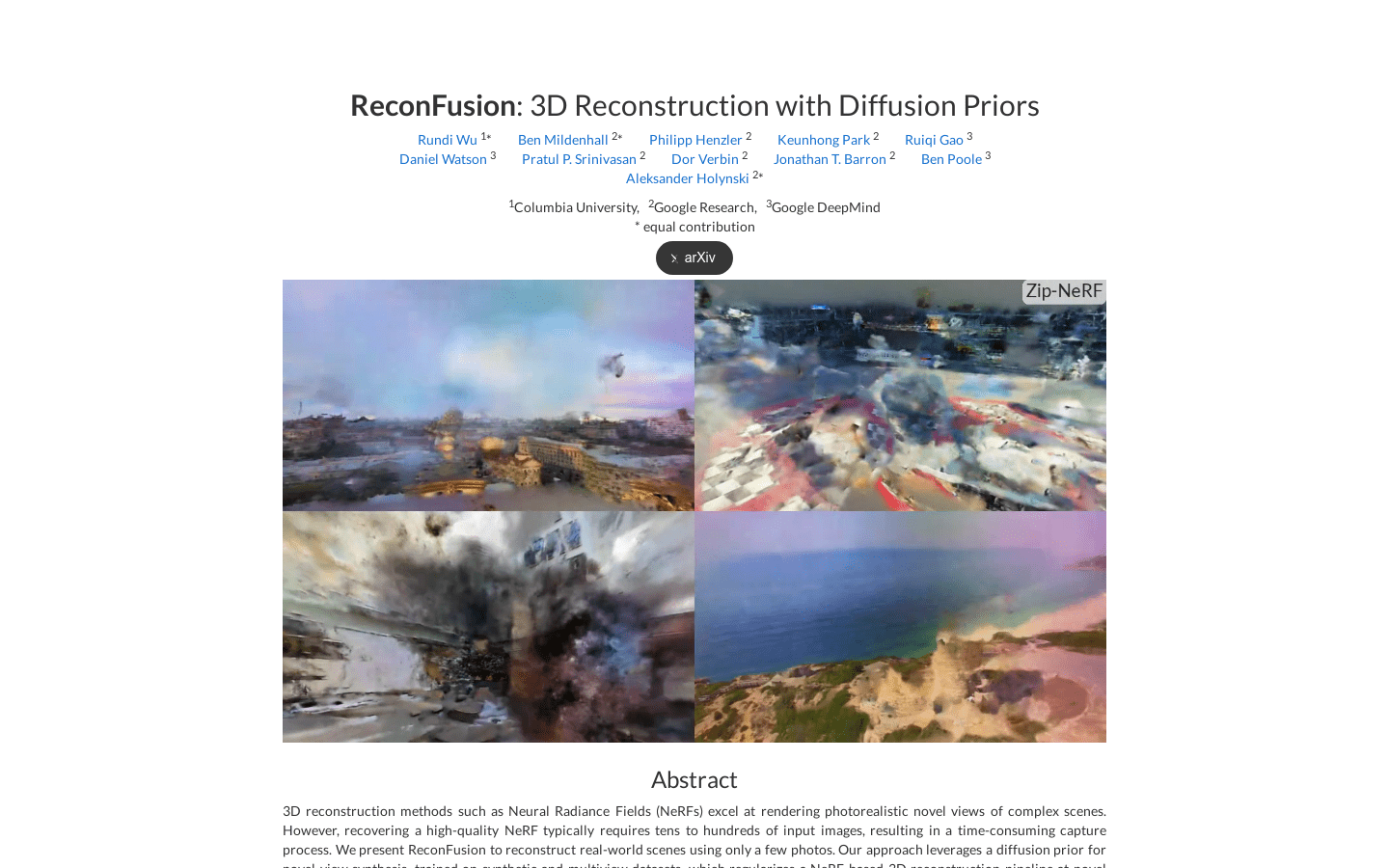

ReconFusion is a 3D reconstruction method that leverages diffusion priors to reconstruct real-world scenes from a limited number of photographs. It combines Neural Radiance Fields (NeRFs) with diffusion priors, enabling the synthesis of realistic geometry and textures at new camera poses beyond the input image set. This method is trained on diffusion priors with both limited-view and multi-view datasets, allowing it to synthesize realistic geometry and textures in unconstrained regions while preserving the appearance of the observed region. ReconFusion has been extensively evaluated on various real-world datasets, including forward and 360-degree scenes, demonstrating significant performance improvements.

Target Users :

ReconFusion is suitable for scenarios requiring 3D reconstruction from a limited number of views. It can synthesize realistic geometry and textures in unconstrained regions while preserving the appearance of the observed region.

Use Cases

Use Case 1: In medical imaging, ReconFusion can be used to reconstruct human organ models from a limited number of views.

Use Case 2: In architectural design, ReconFusion can be used to generate realistic architectural scenes from limited perspectives.

Use Case 3: In virtual reality applications, ReconFusion can be used to generate realistic virtual environments from a small number of input images.

Features

Uses NeRF to optimize the minimization of reconstruction loss and sample loss

Generates sample images using a PixelNeRF-style model

Combines noise latent variables and diffusion models to generate decoded output samples

Featured AI Tools

Chinese Picks

Capcut Dreamina

CapCut Dreamina is an AIGC tool under Douyin. Users can generate creative images based on text content, supporting image resizing, aspect ratio adjustment, and template type selection. It will be used for content creation in Douyin's text or short videos in the future to enrich Douyin's AI creation content library.

AI image generation

9.0M

Outfit Anyone

Outfit Anyone is an ultra-high quality virtual try-on product that allows users to try different fashion styles without physically trying on clothes. Using a two-stream conditional diffusion model, Outfit Anyone can flexibly handle clothing deformation, generating more realistic results. It boasts extensibility, allowing adjustments for poses and body shapes, making it suitable for images ranging from anime characters to real people. Outfit Anyone's performance across various scenarios highlights its practicality and readiness for real-world applications.

AI image generation

5.3M