4D Fy

Overview :

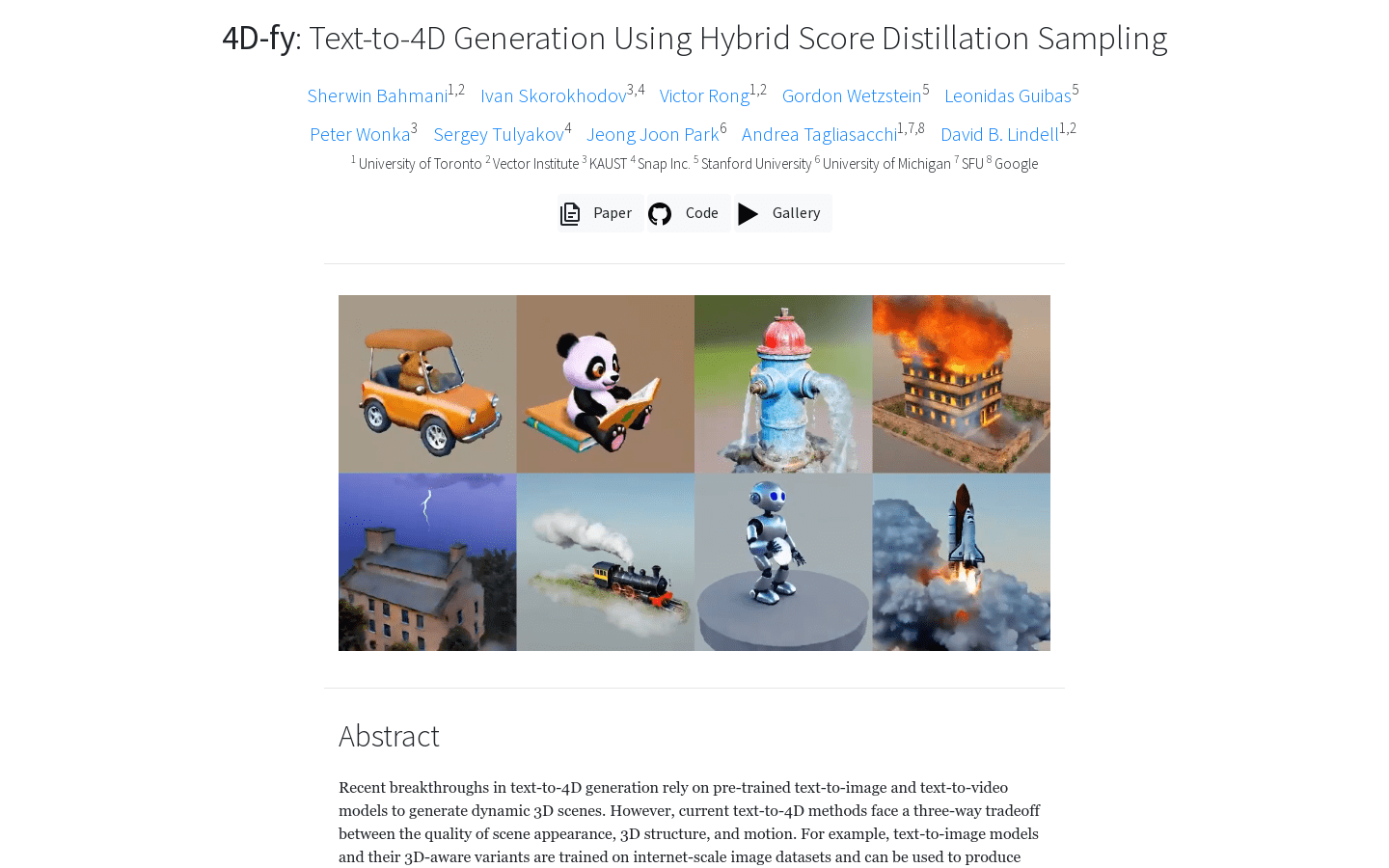

4D-fy is a text-to-4D generation method that employs mixed-fraction distillation sampling, combining supervised signals from various pre-trained diffusion models to achieve high-fidelity text-to-4D scene generation. Its approach parameterizes 4D radiance fields with neural representations, utilizing static and dynamic multi-scale hash table features. It then renders images and videos from the representations using volume rendering.

Through mixed-fraction distillation sampling, 4D-fy first optimizes the representation using gradients from a 3D-perceptual text-to-image model (3D-T2I), then refines the appearance by incorporating gradients from a text-to-image model (T2I), and finally enhances the scene's motion by incorporating gradients from a text-to-video model (T2V). 4D-fy can generate 4D scenes with captivating appearance, 3D structure, and movement.

Target Users :

Generates high-fidelity 4D scenes from text descriptions, suitable for film visual effects, virtual reality, and other fields.

Use Cases

A film effects company uses 4D-fy to generate a fire scene.

A virtual reality game developer uses 4D-fy to generate dynamic virtual environments.

An advertising agency uses 4D-fy to create product showcases with captivating appearance and motion effects.

Features

Achieves high-fidelity text-to-4D generation using mixed-fraction distillation sampling.

Parameterizes 4D radiance fields using neural representations, with static and dynamic multi-scale hash table features.

Renders images and videos, leveraging supervised signals from various pre-trained diffusion models.

Featured AI Tools

Sora

AI video generation

17.0M

Animate Anyone

Animate Anyone aims to generate character videos from static images driven by signals. Leveraging the power of diffusion models, we propose a novel framework tailored for character animation. To maintain consistency of complex appearance features present in the reference image, we design ReferenceNet to merge detailed features via spatial attention. To ensure controllability and continuity, we introduce an efficient pose guidance module to direct character movements and adopt an effective temporal modeling approach to ensure smooth cross-frame transitions between video frames. By extending the training data, our method can animate any character, achieving superior results in character animation compared to other image-to-video approaches. Moreover, we evaluate our method on benchmarks for fashion video and human dance synthesis, achieving state-of-the-art results.

AI video generation

11.4M