Dragnuwa

Overview :

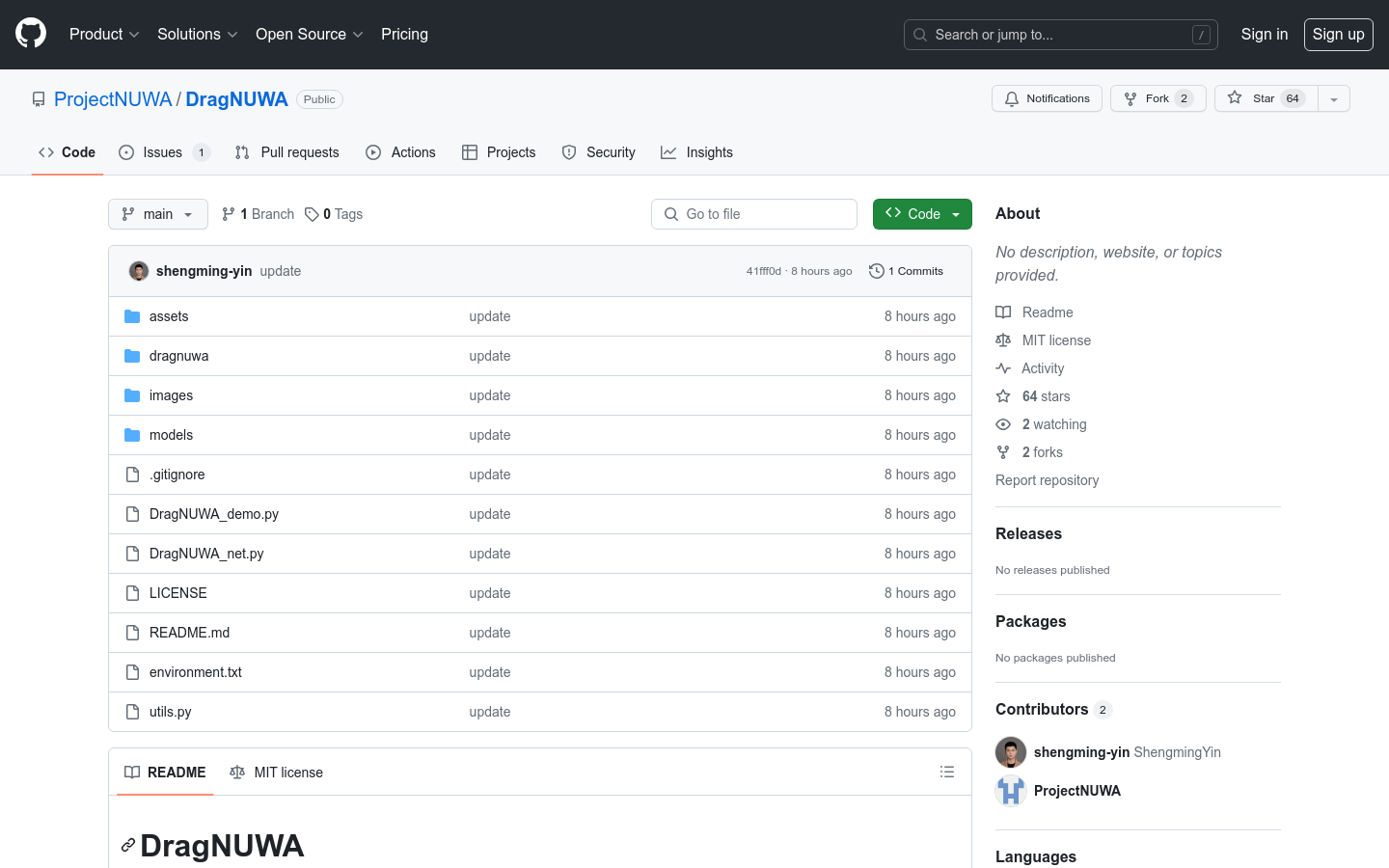

DragNUWA is a video generation tool that enables the transformation of actions into camera movements or object movements by directly manipulating backgrounds or images, resulting in corresponding videos. DragNUWA 1.5 is based on stable video diffusion technology, allowing images to move along a specific path. DragNUWA 1.0 utilizes text, images, and trajectories as three crucial control factors, promoting highly controllable video generation semantically, spatially, and temporally. Users can clone the repository via Git, download pre-trained models, and generate animations by dragging and dropping images on their desktop.

Target Users :

Designed for image and video production, particularly users who require precise control over video generation.

Use Cases

User A used DragNUWA 1.5's stable video diffusion feature to create an art video with motion based on a specific path.

User B generated a creative video with specific scene and temporal characteristics using DragNUWA 1.0's text, image, and trajectory control functions.

User C easily converted images into animated videos using DragNUWA's drag-and-drop operations.

Features

Generate highly controllable videos through drag-and-drop operations

Support stable video diffusion technology

Utilize text, images, and trajectories for video generation

Featured AI Tools

Sora

AI video generation

17.1M

Animate Anyone

Animate Anyone aims to generate character videos from static images driven by signals. Leveraging the power of diffusion models, we propose a novel framework tailored for character animation. To maintain consistency of complex appearance features present in the reference image, we design ReferenceNet to merge detailed features via spatial attention. To ensure controllability and continuity, we introduce an efficient pose guidance module to direct character movements and adopt an effective temporal modeling approach to ensure smooth cross-frame transitions between video frames. By extending the training data, our method can animate any character, achieving superior results in character animation compared to other image-to-video approaches. Moreover, we evaluate our method on benchmarks for fashion video and human dance synthesis, achieving state-of-the-art results.

AI video generation

11.5M