Animatediff

Overview :

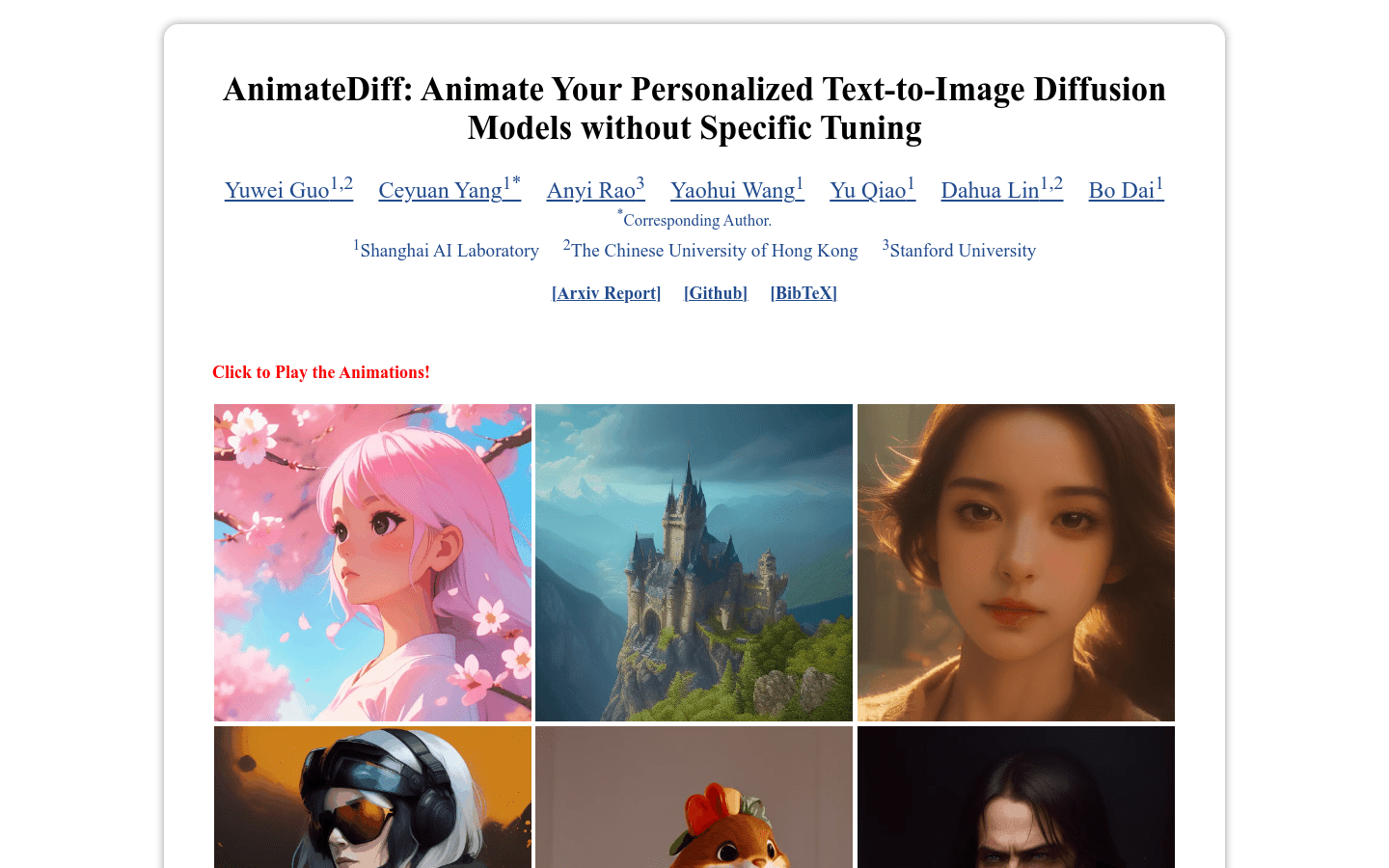

AnimateDiff is an effective framework for animating personalized text-to-image models. It adds a new initialization animation modeling module to a frozen base text-to-image model and trains on video clips to extract reasonable animation priors. Once trained, injecting this animation modeling module enables all personalized versions derived from the same base model to generate diverse and personalized animated images. This framework saves the effort of fine-tuning for specific models.

Target Users :

AnimateDiff is suitable for scenarios that require converting personalized text into animated images, applicable in creative fields and entertainment.

Features

Convert personalized text into animated images

Save the effort of fine-tuning for specific models

Generate diverse and personalized animated images

Featured AI Tools

Sora

AI video generation

17.1M

Animate Anyone

Animate Anyone aims to generate character videos from static images driven by signals. Leveraging the power of diffusion models, we propose a novel framework tailored for character animation. To maintain consistency of complex appearance features present in the reference image, we design ReferenceNet to merge detailed features via spatial attention. To ensure controllability and continuity, we introduce an efficient pose guidance module to direct character movements and adopt an effective temporal modeling approach to ensure smooth cross-frame transitions between video frames. By extending the training data, our method can animate any character, achieving superior results in character animation compared to other image-to-video approaches. Moreover, we evaluate our method on benchmarks for fashion video and human dance synthesis, achieving state-of-the-art results.

AI video generation

11.5M